Quantum computing promises to revolutionise decision-making, optimisation and simulation across numerous industries, yet realising this potential requires navigating a complex and rapidly evolving field. Emily L. Tucker from Clemson University and Mohammad hossein Mohammadisiahroudi from the University of Maryland, Baltimore County, alongside their colleagues, address this challenge by providing a comprehensive overview of quantum computing specifically tailored for industrial engineering and operations research. This work establishes foundational principles, surveys current hardware and software, and details key algorithmic advances relevant to practical problem-solving, including approaches to linear algebra, optimisation and stochastic simulation. By bridging the gap between quantum theory and real-world applications, and emphasising the crucial interplay between hardware and algorithmic development, the researchers demonstrate how industrial engineers are uniquely positioned to drive the future of quantum computing and unlock its tangible benefits for both academia and industry.

Quantum Algorithms and Variational Optimisation Methods

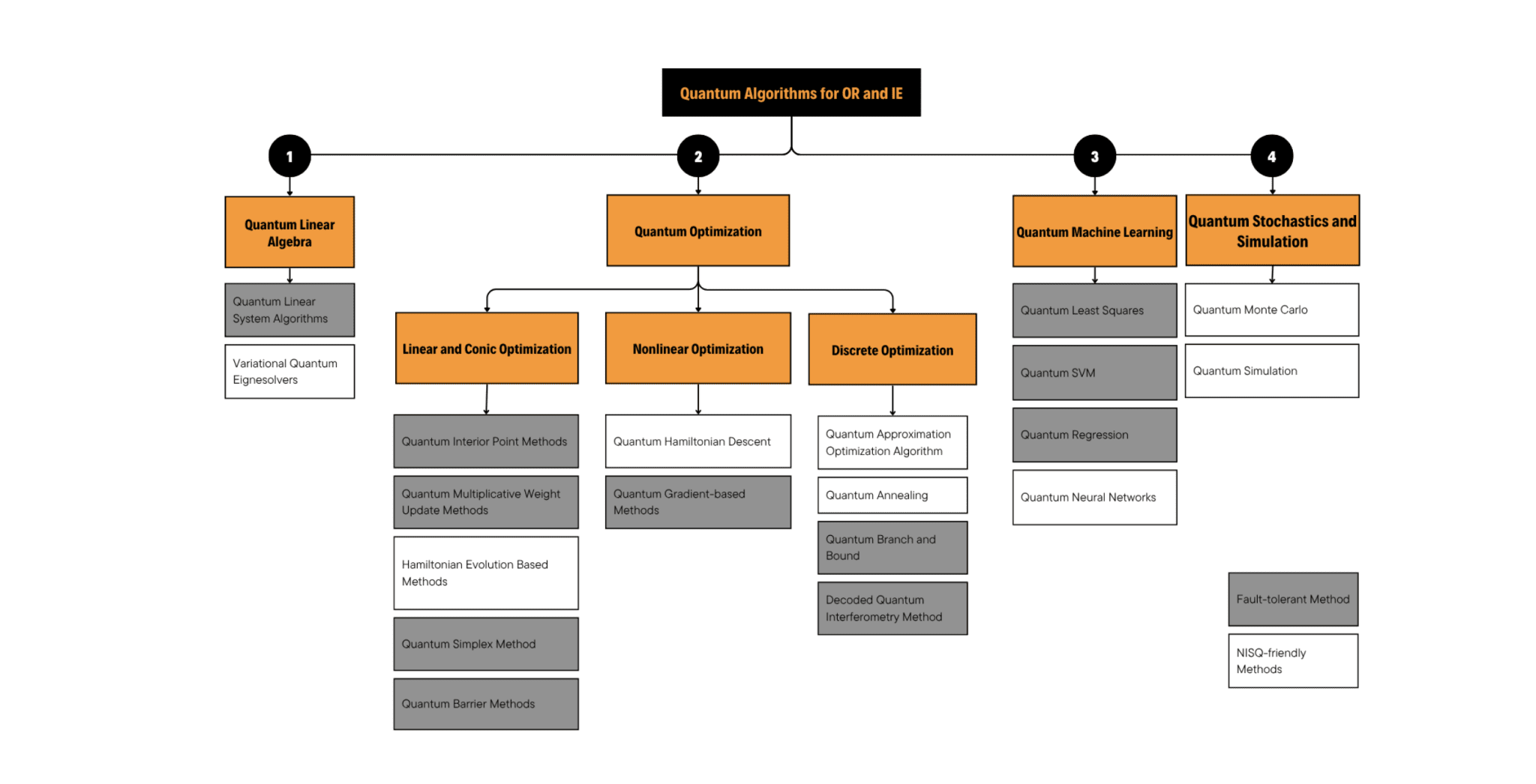

This work surveys the growing field of quantum computing and its potential to revolutionise optimisation and problem-solving. Researchers are actively exploring quantum algorithms like Shor’s algorithm for factoring, quantum support vector machines for machine learning, and quantum linear system algorithms for complex calculations. Variational algorithms, including the Quantum Approximate Optimisation Algorithm and the Variational Quantum Eigensolver, are also receiving significant attention as promising approaches for near-term quantum devices. Quantum simulation, quantum SDP solvers, and quantum annealing further expand the toolkit for tackling challenging computational problems.

A major focus lies in applying these algorithms to combinatorial optimisation problems, such as the Travelling Salesman Problem, resource allocation, and scheduling. Quantum algorithms are being investigated to accelerate solutions for linear programming, semidefinite programming, and integer programming, all underpinned by the principles of convex optimisation. Researchers are leveraging Ising models to represent complex problems for quantum annealing and exploring graph partitioning techniques to optimise solutions. Applications span numerous industries, including logistics and supply chain management, where quantum optimisation is being tested on problems like vehicle routing and port optimisation.

Scheduling optimisation, particularly for resources like television commercials, also benefits from these advancements. In finance, quantum algorithms and tensor networks are being applied to portfolio optimisation, while in machine learning, quantum support vector machines show promise for big data classification. More broadly, quantum approaches are being tested on resource-constrained project scheduling and general project timeline optimisation. Current quantum hardware, notably systems from D-Wave and IBM Quantum, provides platforms for implementing and testing these algorithms. While challenges remain in scaling quantum processors, researchers are actively developing new algorithms and improving existing ones, driven by the goal of achieving quantum advantage, demonstrating that quantum computers can solve problems intractable for classical computers. Emerging trends include quantum interior point methods, hybrid quantum-classical algorithms, quantum transfer learning, and investigations into the effect of data encoding on model performance.

Quantum Operations Research Foundations and Principles

This work establishes a foundation for industrial engineers and operations researchers entering the field of quantum computing, termed Quantum Operations Research (QOR). Researchers begin by explaining the fundamental unit of quantum computation, the qubit, which, unlike classical bits, can exist in a superposition of states. This principle allows qubits to represent combinations of 0 and 1 simultaneously, and is visually represented using the Bloch sphere, aiding in understanding superposition and other quantum phenomena. Beyond superposition, the study highlights entanglement, a unique quantum property where qubits become correlated regardless of distance, and interference, which enables quantum computations to explore multiple possibilities concurrently.

Researchers detail the circuit model, a standard approach to quantum computation where algorithms are constructed from sequences of quantum gates, analogous to logic gates in classical computing. They survey the current landscape of quantum hardware, acknowledging limitations and the need to translate theoretical concepts for those with an operations research background. The work proposes a pathway for developing competence in QOR, emphasising the importance of applied questions and the cyclical relationship between theory and application. Industrial engineers are uniquely positioned to lead this interdisciplinary space, bridging the gap between theoretical advancements and practical problem-solving. This requires a focus on translating complex quantum concepts into actionable insights for real-world applications.

NISQ Hardware Validates Quantum Algorithms Now

Researchers are charting a course through the emerging field of quantum computing, outlining foundational principles and surveying algorithmic advances relevant to industrial engineering and operations research. They focus on understanding hardware needs before deploying algorithms, utilising simulators to validate quantum approaches on small instances and develop error mitigation strategies. Current quantum hardware exists in a “Noisy Intermediate-Scale Quantum” (NISQ) era, characterised by devices containing tens to a few hundred physical qubits prone to errors and limited coherence. Despite these limitations, NISQ devices, such as Google’s Sycamore processor and IBM’s Eagle processor, have achieved groundbreaking milestones, demonstrating the ability to perform specific computations faster than classical supercomputers.

However, these devices require short computational sequences to avoid error accumulation, prompting exploration of error mitigation techniques and hybrid quantum-classical algorithms. The long-term goal remains building fault-tolerant quantum computers, which would employ quantum error correction to create stable logical qubits capable of running complex algorithms. Achieving fault tolerance necessitates millions of physical qubits, with estimates suggesting around 100 physical qubits per logical qubit, depending on physical error rates. The threshold theorem guarantees that, if physical error rates fall below a certain level, error correction can reduce logical error rates to negligible levels.

Current hardware implementations utilise various qubit technologies, with superconducting qubits leading the field, achieving gate operation speeds of tens of nanoseconds. Trapped-ion qubits offer long coherence times but exhibit slower gate speeds. Researchers are exploring modular architectures to scale beyond 100 qubits. Other technologies, including photonic qubits, neutral atoms, and semiconductor spin qubits, are also under investigation. Beyond gate-based quantum computers, quantum annealers, such as those made by D-Wave Systems, offer a distinct approach to solving optimisation problems by evolving a physical system to encode the cost function.

Quantum Computing For Industrial Applications

This work presents a comprehensive overview of quantum computing and its potential impact on industrial engineering and operations research. Researchers establish that, while still nascent, quantum computing offers significant opportunities for advancements in decision-making, optimisation, and simulation across various industries. They detail the foundational principles of quantum computing, current hardware and software landscapes, and key algorithmic developments relevant to the field, including approaches to linear algebra, optimisation, and stochastic simulation. The authors highlight the distinction between current noisy intermediate-scale quantum (NISQ) devices and the future prospect of scalable, fault-tolerant quantum computers.

Achieving fault tolerance, reliable operation despite inherent errors, remains a major engineering challenge, with ongoing research focused on technologies like topological qubits and improved superconducting or ion-trap qubits. They detail the strengths and weaknesses of leading qubit technologies, including superconducting qubits, which offer fast gate speeds but suffer from decoherence, and trapped-ion qubits, which boast long coherence times but slower gate speeds. Scaling these technologies to larger numbers of qubits presents ongoing difficulties. The study also recognises the limitations of current quantum annealers, which are designed for specific optimisation problems rather than general-purpose computation. While acknowledging these challenges, the authors emphasise the unique position of industrial engineers to bridge the gap between theoretical advancements and practical applications, ultimately shaping the future trajectory of quantum computing for real-world problem-solving. Future research will likely focus on overcoming the hardware limitations of current qubit technologies and exploring novel applications of quantum computing across diverse industrial sectors.

👉 More information

🗞 A Gateway to Quantum Computing for Industrial Engineering

🧠 ArXiv: https://arxiv.org/abs/2510.20620