Reservoir computing offers a compelling approach to machine learning, particularly for analysing sequences of data, and is well-suited to implementation on emerging quantum technologies. Emanuele Ricci, Francesco Monzani, and Luca Nigro, all from the University of Milan, alongside their colleagues, now demonstrate a method for significantly enhancing this technique through controllable damping within the quantum system. Their research addresses a key limitation in current reservoir computing designs, namely the difficulty of maintaining stable and tunable operation over extended periods. By inducing controlled damping on each qubit, the team achieves stable, circuit-level amplification, preventing information loss and enabling the processing of arbitrarily long data sequences, far exceeding the natural coherence limits of individual qubits. This advance, which also benefits from correlations between qubits to improve memory, paves the way for robust and scalable quantum random computing on fault-tolerant hardware.

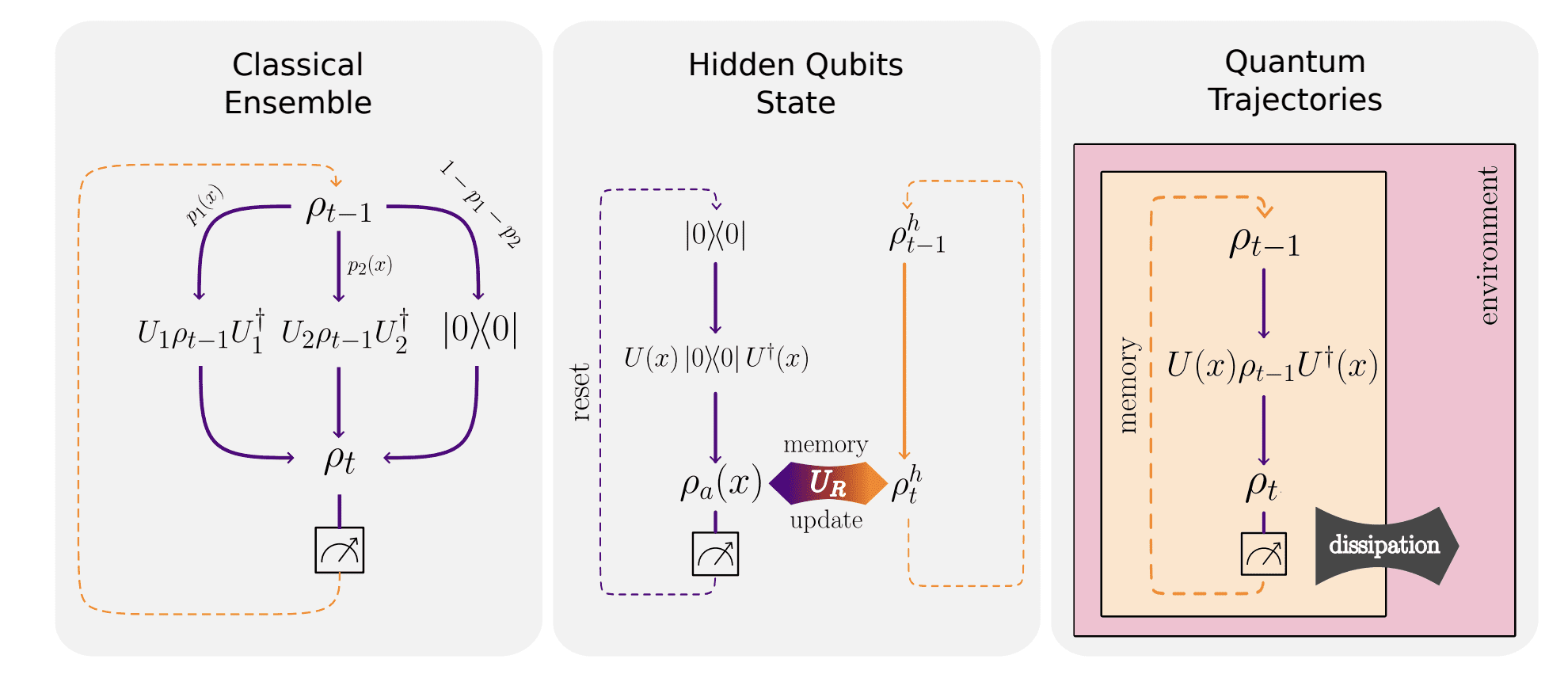

Currently, training a variational quantum circuit presents challenges. This work proposes an algorithm for inducing damping by applying a controlled rotation to each qubit in the reservoir, enabling tunable, circuit-level amplification of the zero state and maintaining the system away from a completely random state. This controlled damping prevents information loss caused by repeated mid-circuit measurements and, importantly, allows the system to process arbitrarily long input sequences.

Quantum Reservoir Computing Implementation Details

This document provides detailed methodological information for a research project exploring quantum reservoir computing, a type of recurrent neural network well-suited for processing time-series data. Quantum reservoir computing aims to leverage quantum properties, such as superposition and entanglement, to improve network performance. The document addresses challenges in training and evaluating these systems and provides detailed explanations of the techniques used. Quantum systems are fragile and susceptible to noise, which causes them to lose coherence. Depolarization, a specific type of noise, effectively randomizes the quantum state.

Repeated measurements on a qubit, without mitigation, lead to depolarization, highlighting the challenge of using mid-circuit measurements, which are necessary for reading results but also introduce noise. Subsequent sections explain how these issues are addressed. Overfitting occurs when a model learns the training data too well, including the noise, and performs poorly on unseen data. The number of trainable parameters and the amount of training data affect the risk of overfitting; a model is likely to overfit if the number of parameters is close to the number of data points. This explains why the authors carefully control the number of observables used as readout and why increasing them can improve performance.

The NARMA (Nonlinear AutoRegressive Moving Average) task is a standard benchmark for evaluating recurrent neural networks’ ability to learn temporal dependencies. This section provides details on how the NARMA task was implemented and evaluated, demonstrating that the quantum reservoir computer can effectively learn the task, even for high values of the order, demonstrating its ability to capture long-range temporal dependencies. Artificial damping is a technique used to counteract the effects of depolarization caused by mid-circuit measurements. By intentionally introducing controlled damping, the authors stabilize the quantum state and prevent it from becoming completely randomized.

This section describes the circuit elements used to implement the artificial damping, explaining how the controlled rotation gate adjusts the damping rate. This document demonstrates the challenges of using mid-circuit measurements in quantum reservoir computing and provides a novel solution in the form of artificial damping. The authors address overfitting by controlling the number of trainable parameters and the amount of training data. The results on the NARMA task demonstrate the ability of the quantum reservoir computer to learn complex temporal dependencies.

Controlled Damping Stabilizes Quantum Reservoir Computing

Reservoir computing represents a promising approach to machine learning, particularly for processing sequential data using quantum devices. This method leverages the computational power of qubits without the demanding training procedures typically associated with quantum circuits. Researchers have developed a new technique for stabilizing and tuning the evolution of these quantum reservoirs, addressing a key challenge in the field. The innovation centers on inducing controlled damping within the reservoir, effectively amplifying the zero state and preventing information loss caused by repeated measurements.

This controlled damping allows the reservoir to retain information about past inputs without classical memory buffers and enables stable computation over extended periods. Unlike previous methods limited by the coherence time of individual qubits, this approach can, in principle, process arbitrarily long input sequences. The team discovered that correlations between qubits further enhance memory retention, highlighting the potential of this system as a robust computational reservoir. Benchmarks demonstrate robust and scalable random computing on fault-tolerant hardware, representing a significant step towards practical quantum machine learning.

The induced dissipation combats information loss during data processing, sustaining computational performance over time. This new technique overcomes the limitation of information decay due to repeated measurements, allowing the reservoir to maintain memory far longer than previously possible. In tests, the algorithm outperformed existing approaches relying on intrinsic noise, even when implemented on newer hardware. The team achieved this by carefully controlling the damping of qubits, preventing progressive information loss. This controlled damping, combined with qubit correlations, allows the reservoir to effectively “remember” past inputs and maintain a stable computational state, paving the way for more complex and reliable quantum machine learning applications. The results demonstrate a robust strategy for scalable, noise-independent quantum reservoir computing compatible with future fault-tolerant quantum devices.

Controlled Dissipation Extends Quantum Memory Capacity

This research introduces a new method for enhancing quantum reservoir computing, a promising approach for processing sequential data on emerging quantum devices. The team demonstrates a technique for inducing controlled dissipation within the quantum reservoir, effectively amplifying the zero state and preventing information loss caused by repeated measurements. This controlled damping maintains the system’s stability over extended periods, allowing it to process arbitrarily long input sequences, even exceeding the coherence time of individual qubits. The results show that this method improves the retention of information within the reservoir, and that correlations between qubits further enhance its memory capabilities. Through standard benchmarks, the researchers demonstrate robust and scalable random computing on fault-tolerant hardware, and importantly, they validated the algorithm on currently available superconducting quantum computers, achieving improved performance compared to approaches relying solely on intrinsic noise.

👉 More information

🗞 Quantum reservoir computing induced by controllable damping

🧠 ArXiv: https://arxiv.org/abs/2508.14621