Fault-tolerant quantum computation demands innovative approaches as researchers strive to build machines capable of correcting errors inherent in quantum systems, and distributed computing offers a promising path toward scalability. Nitish Kumar Chandra from the University of Pittsburgh, Eneet Kaur from Cisco Quantum Lab, and Kaushik P. Seshadreesan, along with their colleagues, investigate how different architectural designs impact the resource demands of distributed quantum computers. Their work systematically analyses three distinct architectures, each employing unique strategies for connecting and operating on quantum modules, and reveals how these choices affect the number of entangled qubit pairs and the complexity of generating them. This detailed comparison provides crucial insights for designing practical, fault-tolerant quantum computers that can overcome the limitations of current hardware and operate effectively within realistic resource constraints.

Distributed Quantum Computing and Error Correction

Scientists are exploring methods to build larger, more powerful quantum computers by connecting smaller quantum processors. This approach, known as distributed quantum computing, aims to overcome the limitations of building a single, massive quantum processor. A central challenge is maintaining the integrity of quantum information across these connected systems, requiring robust methods for detecting and correcting errors. Researchers are investigating how to distribute error correction across multiple processing units, accounting for the imperfections of real-world components and the challenges of transmitting quantum information.

This work focuses on key concepts including quantum error correction, where surface codes are a promising approach, and lattice surgery, a technique for manipulating these codes. Quantum networking provides the infrastructure for connecting distributed processors, and researchers are exploring methods for improving the quality of entangled states through techniques like entanglement distillation and purification, and increasing efficiency with multiplexing. Concepts like GHZ states and quantum repeaters are also central to this research, alongside the challenges and opportunities presented by noisy intermediate-scale quantum devices.

GHZ-Based Distributed Quantum Computation Analysis

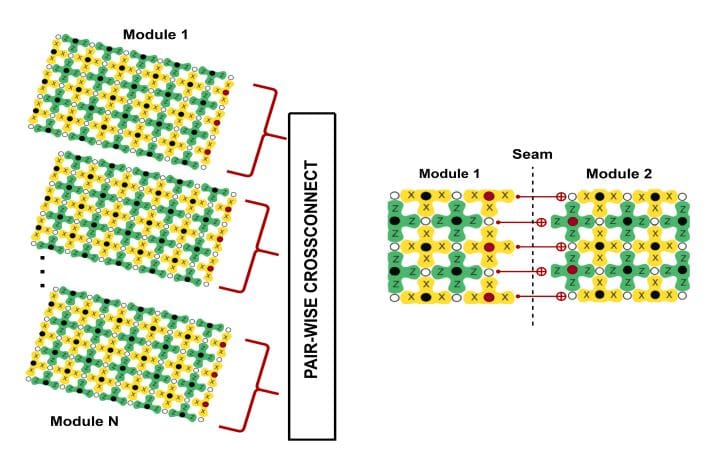

Scientists have conducted a detailed analysis of three distinct architectures for building fault-tolerant distributed quantum computers. These architectures address the fundamental challenge of scaling quantum computation beyond the limitations of single processors. The study compares how resource requirements, such as the number of entangled pairs, scale with increasing code distance, providing insights into the efficiency of each design. Researchers engineered experiments using small quantum modules connected via Greenberger-Horne-Zeilinger states, enabling nonlocal measurements, and utilizing electron spin for communication and nuclear spins for stable memory, particularly suited to platforms like nitrogen-vacancy centers in diamond.

To generate high-fidelity entangled states, the team investigated different protocols, balancing resource consumption with desired accuracy. Experiments demonstrate that simpler protocols require fewer resources, while more complex protocols, incorporating multiple purification rounds, enhance fidelity. Researchers also explored architectures where large quantum error-correcting codes are distributed across multiple modules, implementing operations using entanglement-mediated gates, effectively suppressing errors in boundary regions. Furthermore, the team investigated architectures where each node operates an entire logical code block, enabling fault-tolerant operations through techniques like lattice surgery and teleportation, and demonstrated distributed lattice surgery between surface code blocks located on different processors.

Distributed Quantum Computation Resource Scaling and Noise

This research details a comprehensive analysis of resource requirements for fault-tolerant distributed quantum computation, examining three distinct architectural types. Researchers investigated how the number of entangled pairs and the attempts needed to generate them scale with increasing code distance, providing valuable insights for near-term hardware and resource constraints, focusing on the planar surface code and toric code as representative examples. Experiments revealed that the probability of successful computation is directly influenced by noise, demonstrating that the probability of achieving correct results decreases with increasing noise levels. This confirms that minimizing noise is paramount for achieving high-fidelity quantum computations, establishing a quantitative relationship between noise and the probability of errors.

Entanglement Scaling Reveals Architectural Tradeoffs

This research systematically analyzes three distinct architectural approaches to distributed quantum computation, categorizing them by how they manage quantum information and perform operations. Researchers investigated how resource demands, specifically the number of required entangled pairs and the attempts needed to generate them, scale with increasing code distance for each architecture, revealing that some architectures require significantly more entanglement than others. These classifications highlight promising pathways for scalable, fault-tolerant distributed quantum computing, underscoring the importance of considering entanglement generation, code choice, hardware limitations, and network protocols in a coordinated manner. The authors acknowledge that precise resource requirements depend on specific implementation details and noise characteristics, and that further research is needed to optimize these architectures for near-term hardware.

👉 More information

🗞 Architectural Approaches to Fault-Tolerant Distributed Quantum Computing and Their Entanglement Overheads

🧠 ArXiv: https://arxiv.org/abs/2511.13657