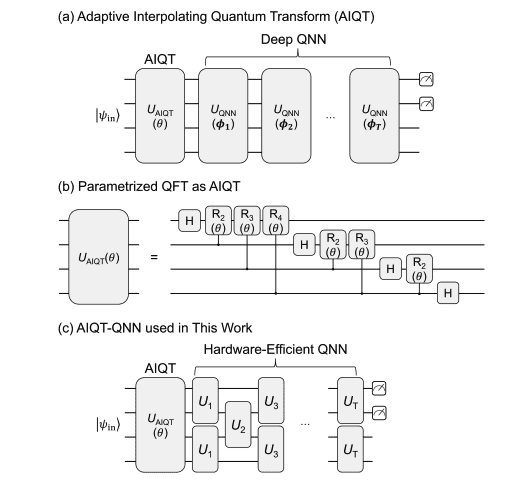

Quantum computation promises to revolutionise many fields, but realising this potential requires efficient methods for processing information, and current deep variational circuits often struggle with scalability due to their rapidly increasing complexity. Gekko Budiutama from Quemix Inc., Shunsuke Daimon from the National Institutes for Quantum Science and Technology, and Hirofumi Nishi from Quemix Inc., alongside their colleagues, address this challenge by introducing the Adaptive Interpolating Quantum Transform (AIQT), a new framework designed for flexible and efficient quantum computation. AIQT constructs trainable quantum operations by smoothly combining existing transforms, such as the Hadamard and Fourier transforms, allowing for complex calculations with a significantly reduced number of parameters. The team demonstrates that this approach achieves high performance while remaining scalable and interpretable, offering a promising alternative to existing methods and paving the way for more practical quantum algorithms.

Machine learning on quantum computers attracts attention for its potential to deliver computational speedups in different tasks. However, deep variational quantum circuits require a large number of trainable parameters that grows with both qubit count and circuit depth, often rendering training infeasible. AIQT defines a trainable unitary that interpolates between quantum transforms, such as the Hadamard and quantum Fourier transforms. This approach enables.

Mitigating Barren Plateaus in Quantum Neural Networks

This research paper comprehensively explores neural networks, particularly focusing on techniques to improve their performance and address challenges like barren plateaus in quantum neural networks. The work investigates the intersection of classical machine learning techniques with quantum computing, exploring how quantum circuits can be used as layers within neural networks and how to optimize these hybrid architectures. A major focus is on mitigating the barren plateau problem, which refers to the vanishing gradients that hinder the learning process. The research explores various initialization strategies, circuit designs, and optimization techniques to overcome this challenge.

Beyond quantum aspects, the paper delves into classical neural network enhancements, including novel architectures, activation functions, and training methods. The work emphasizes the importance of effective feature extraction and representation learning for both classical and quantum neural networks, exploring different types of transforms, Fourier, Discrete Cosine, Wavelet, and Legendre, to preprocess data and improve model accuracy. The research is geared towards the Noisy Intermediate-Scale Quantum (NISQ) era, acknowledging the limitations of current quantum hardware and focusing on practical, implementable solutions. The core of the quantum neural network research focuses on variational quantum circuits (VQCs), which are parameterized quantum circuits used as layers within a neural network.

The work discusses the Hardware Efficient Ansatz, a common approach to designing VQCs suitable for implementation on near-term quantum hardware. A central theme is addressing barren plateaus through initialization strategies, circuit design, and optimization techniques. Layerwise learning, channel attention, and other methods are explored to improve stability and performance. The research also delves into topological quantum machine learning, exploring symmetry-protected topological phases and utilizing edge modes in topological systems as features for machine learning models. It discusses using Bloch vectors to represent quantum states and qudits, quantum bits with more than two levels, in machine learning algorithms.

Specific techniques and algorithms, such as a general transform, DCT-Former, Wavelet Networks, and Tree Tensor Networks, are also investigated. This paper provides a broad overview of the state-of-the-art in hybrid classical-quantum machine learning, focusing on techniques feasible for near-term quantum hardware. The exploration of various strategies to address barren plateaus is a significant contribution, as it is a major obstacle to training quantum neural networks. The introduction of new architectures and algorithms expands the toolkit for machine learning researchers, and the exploration of topological quantum machine learning opens up new avenues for research and innovation.

This document is aimed at researchers and practitioners in quantum computing, machine learning, artificial intelligence, physics, and data science. In conclusion, this is a substantial and well-researched document that makes significant contributions to the field of hybrid classical-quantum machine learning. Deep quantum networks, while potentially powerful, often require a large number of parameters, making them difficult to train and implement on current quantum hardware. This innovative method allows the quantum network to learn effectively while significantly reducing the number of trainable parameters needed, offering a substantial advantage over traditional deep quantum circuits.

By strategically embedding a minimal set of parameters within the quantum operation itself, AIQT provides a structured approach to learning, inheriting the benefits of its constituent transformations while maintaining flexibility. The framework is designed to recover these known transformations under specific conditions, ensuring a physically valid and interpretable operation. The key to AIQT’s efficiency lies in its ability to adaptively combine the strengths of different quantum transformations, effectively simplifying the network when appropriate and leveraging known quantum advantages when beneficial. This contrasts with many existing approaches that struggle with parameter efficiency and the difficulty of training large quantum circuits due to issues like vanishing gradients.

The researchers demonstrate that AIQT can achieve high performance with a fraction of the parameters typically required for deep quantum networks, paving the way for more practical and scalable quantum machine learning applications. By providing a meaningful and structured inductive bias, AIQT allows the quantum model to learn optimal transformations from a constrained subspace of unitary operations, offering a powerful new tool for quantum computation and potentially accelerating progress in areas like classification, feature extraction, and generative modeling. AIQT defines a trainable operator that blends known quantum transforms, offering a flexible way to manipulate quantum states while keeping the number of necessary parameters manageable. This approach allows AIQT to inherit the benefits of the transforms it combines, such as the ability to efficiently process information with symmetries or local interactions. Testing on a quantum phase classification task demonstrates that AIQT-enhanced models outperform both standard quantum neural networks and models using fixed quantum Fourier transforms.

This improvement stems from AIQT’s capacity to adaptively control entanglement and information mixing within the quantum state, enabling the model to learn relevant representations without falling into optimization challenges caused by excessive complexity. The authors acknowledge that the performance of AIQT is dependent on the specific instantiation chosen, as different base transforms suit different types of quantum learning tasks. Future work could explore the optimal selection and combination of these transforms for various applications.

👉 More information

🗞 Adaptive Interpolating Quantum Transform: A Quantum-Native Framework for Efficient Transform Learning

🧠 ArXiv: https://arxiv.org/abs/2508.14418