Combinatorial optimisation underpins critical decision-making across numerous scientific, engineering, and industrial disciplines, yet its inherent computational complexity often restricts practical application. Rudraksh Sharma, Ravi Katukam, and Arjun Nagulapally, all from AIONOS, present a comprehensive review of quantum annealing (QA), a specialised analogue quantum computing approach that leverages quantum tunnelling to explore solution spaces and address these challenges. This work is significant because it consolidates the theoretical foundations of QA, details current hardware architectures like Chimera and Pegasus, and critically assesses benchmarking protocols and embedding strategies. Their analysis reveals that embedding and encoding overhead currently represent the most substantial limitations to scalability and performance, often reducing effective problem capacity by as much as 92% and impacting solution quality.

Quantum annealing limitations and the challenge of problem embedding often hinder practical applications

Scientists are tackling the inherent limitations of solving complex combinatorial optimization problems with a specialized form of quantum computing known as quantum annealing. These problems, ubiquitous in fields ranging from logistics to molecular design, become exponentially more difficult as the number of variables increases, quickly exceeding the capabilities of classical computers.

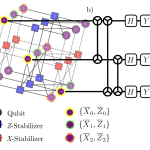

Recent work has focused on a comprehensive review of quantum annealing, detailing its theoretical foundations, hardware designs, and practical implementation strategies. Researchers have developed a unified framework connecting adiabatic quantum dynamics, Ising and QUBO models, and various qubit annealer architectures including Chimera, Pegasus, and Zephyr topologies.

This study reveals that the primary bottleneck to scaling quantum annealing lies not simply in the number of qubits, but in the overhead associated with embedding and encoding problems onto the quantum hardware. Minor embeddings, a common technique, typically require between 5 and 12 physical qubits to represent a single logical variable, reducing effective problem capacity by 80-92% and potentially compromising solution quality due to chain-breaking errors.

The research demonstrates that quantum annealing functions best as a hybrid refinement method, enhancing classical solutions rather than acting as a standalone solver, through systematic analysis across diverse applications including transportation logistics, energy systems optimization, robotics, finance, molecular design, and machine learning. Furthermore, the work critically assesses current benchmarking protocols, identifying shortcomings such as selective reporting and inadequate classical baselines, which distort performance comparisons.

Analysis also reveals structural connections between quantum annealing and gate-based variational algorithms like QAOA and VQE, highlighting constraints imposed by stoquastic conditions. Priority research directions are established, including the development of guaranteed-performance embedding algorithms, standardized benchmarking, and methods for achieving non-stoquastic control, ultimately aiming to define annealing hardness and demonstrate quantifiable quantum advantage. This review serves as a valuable resource for researchers and practitioners in the field of quantum-enhanced combinatorial optimization.

Embedding limitations and their impact on quantum annealing performance are significant challenges in practical applications

A detailed analysis of embedding and encoding overhead establishes its role as the primary determinant of scalability and performance in quantum annealing. Minor embeddings typically require between 5 and 12 physical qubits per logical variable, reducing effective problem capacity by 80-92% and potentially compromising solution quality through chain-breaking errors.

This work develops a unified framework connecting adiabatic quantum dynamics, Ising and QUBO models, stoquastic and non-stoquastic Hamiltonians, and diabatic transitions to current flux annealers. Specifically, the study examines Chimera, Pegasus, and Zephyr topologies alongside emergent architectures like Lechner-Hauke-Zoller systems and Rydberg atom platforms, as well as hybrid quantum-classical computation approaches.

The research systematically investigates applications across transportation logistics, energy system optimisation, robotics, finance, molecular design, and machine learning to assess quantum annealing’s empirical utility as a hybrid refinement method. Benchmarking protocols were critically analysed, revealing methodological shortcomings such as selective reporting of results and inadequate classical baselines.

Furthermore, the study addresses the omission of preprocessing overhead in performance comparisons, which distorts accurate evaluations. Investigation extends to the structural connections between quantum annealing and gate-based variational algorithms, including QAOA and VQE, exploring their behaviour under fine-grained control and the limitations imposed by stoquastic constraints.

This involved a comprehensive review of current benchmarking practices, identifying a need for standardized protocols and principled measures of quantum advantage. The study prioritises future research directions, including the definition of annealing hardness problems irrespective of size, the development of non-stoquastic control methods, and the creation of performance-guaranteed embedding algorithms.

Embedding overhead limits quantum annealing as a hybrid optimisation technique, especially for large problem instances

Minor embeddings routinely exhibit a physical variable count between 5 and 12, resulting in an 80-92% reduction in effective problem capacity. This embedding overhead constitutes the largest determinant of both scalability and performance within the system. Analysis reveals that quantum annealing functions best as a hybrid refinement method rather than a standalone solver, demonstrated through systematic study across transportation logistics, energy systems optimisation, robotics, finance, molecular design, and machine learning.

Current benchmarking methodologies suffer from methodological shortcomings including selective reporting of results and inadequate classical baselines. Furthermore, the exclusion of preprocessing overhead distorts genuine performance comparisons. The research establishes a structural connection between quantum annealing and gate-based variational algorithms such as QAOA and VQE, examining their behaviour under fine-grained control and the limitations imposed by stoquastic constraints.

This work identifies priority research directions including the comprehensive definition of annealing hardness problems irrespective of size, the development of non-stoquastic control mechanisms, and the creation of performance-guaranteed embedding algorithms. Standardised benchmarking protocols and principled measures of quantum advantage are also highlighted as crucial areas for future investigation.

The study details how adiabatic quantum dynamics, Ising and QUBO models, stoquastic and non-stoquastic Hamiltonians, and diabatic transitions relate to modern flux annealers utilising Chimera, Pegasus, and Zephyr topologies. These findings also extend to emergent architectures like Lechner-Hauke-Zoller systems, Rydberg atom platforms, and hybrids of quantum and classical computation.

Embedding limitations currently overshadow quantum coherence challenges in practical applications

Quantum annealing represents a distinct approach to combinatorial optimization, differing fundamentally from both classical meta-heuristics and gate-based variational quantum algorithms. Its strengths lie in continuous-time analogue evolution, quantum tunnelling, and inherent sampling of low-energy states, making it potentially well-suited to problems with complex energy landscapes.

Current limitations to scalability are primarily determined by the overhead associated with embedding and encoding problems onto quantum annealers, rather than simply the number of available qubits. Embedding costs typically consume 80 to 92% of physical qubits, effectively reducing problem capacity, while high-density problem formulations demand coefficient precision beyond the capabilities of present hardware.

Classical overheads, therefore, currently pose a greater obstacle to achieving practical quantum advantage than decoherence or coherence times. Observed experimental advantages are largely confined to hybrid models where quantum annealing functions as an optimization subroutine following substantial classical pre-processing.

Stand-alone quantum annealing currently struggles to compete with established classical solvers at industrial scales for many problem types. Future progress hinges on addressing embedding and encoding inefficiencies, alongside the development of non-deterministic control techniques. Rigorous benchmarking, incorporating comprehensive timing, statistical analysis, and comparison to industrial-grade classical solvers, is essential for accurately assessing performance gains. Research priorities include automating embedding, exploring non-stoquastic quantum annealing, establishing advantage metrics, and defining principles for optimal hybrid architectures integrating quantum and classical computation.

👉 More information

🗞 Quantum Annealing for Combinatorial Optimization: Foundations, Architectures, Benchmarks, and Emerging Directions

🧠 ArXiv: https://arxiv.org/abs/2602.03101