Quantum machine learning promises to revolutionise data analysis, but current methods struggle with the time-consuming process of handling large datasets. Mehdi Ramezani from the Centre for Quantum Engineering, and colleagues, present a new framework that overcomes this limitation by processing entire training datasets in a single operation. The team’s approach draws a parallel between feature extraction and parameter optimisation, embedding data into a quantum superposition to enable parallel classification. This innovative method reduces the theoretical complexity of training, offering substantial time savings and comparable classification accuracy to conventional quantum circuits, and represents a significant step towards scalable and efficient quantum machine learning implementations.

Building on the structural analogy between feature extraction in foundational quantum algorithms and parameter optimization in quantum machine learning, the research embeds a standard parameterized quantum circuit into an integrated architecture. This architecture encodes all training samples into a quantum superposition and applies classification in parallel, fundamentally changing how data is processed. The approach reduces the theoretical complexity of loss function evaluation from a level proportional to the square of the dataset size to one proportional to the dataset size itself, offering a substantial improvement in scalability. Numerical simulations demonstrate the potential for significant speedups as dataset size increases.

Parallel Quantum Classification with Variational Circuits

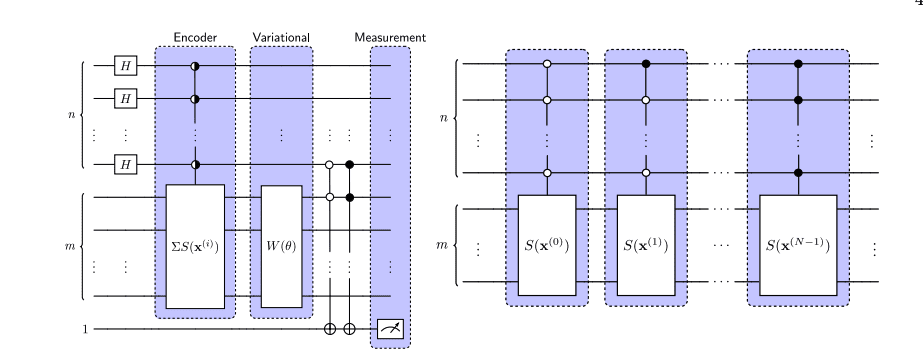

Researchers have investigated a novel quantum machine learning framework for both binary and multi-class classification problems. The team’s work centers on a method that processes data in parallel, a significant departure from traditional approaches that handle data sequentially. This parallel processing is achieved by utilizing a quantum register to represent all training samples simultaneously, allowing the model to evaluate multiple data points within a single quantum operation. The framework employs variational quantum circuits, which consist of a feature map to encode classical data into quantum states, a trainable circuit for classification, and a technique called data reuploading to enhance the circuit’s expressiveness.

The research demonstrates that this approach offers several key strengths, including the potential for substantial speedups in training and improved efficiency. Comprehensive experiments using various datasets confirm the framework’s performance. While the results are promising, the team acknowledges the need for further investigation into scalability, error mitigation, and hardware requirements.

Parallel Quantum Training Accelerates Model Development

Researchers have developed a new framework for quantum machine learning that significantly accelerates the training of quantum models. The team’s approach addresses a key bottleneck in current quantum machine learning techniques, which often require numerous circuit evaluations during training. By drawing a parallel to foundational quantum algorithms, they devised a method that processes entire datasets in a single operation, leveraging the principles of superposition and quantum parallelism. This contrasts with conventional quantum machine learning, where data is typically processed sequentially or in small batches.

The new method encodes all training samples into a quantum superposition, effectively allowing the model to evaluate classifications in parallel. This innovative approach reduces the theoretical complexity of loss function evaluation, a critical step in training, from a computationally expensive process to a much more efficient one. Specifically, the team demonstrated a reduction in complexity from a level proportional to the square of the dataset size to one proportional to the dataset size itself, representing a substantial improvement in scalability. Numerical simulations on various datasets confirm that the method achieves classification accuracies comparable to existing quantum circuits and demonstrates substantial time savings during training, highlighting the potential for practical applications. The team’s work opens new avenues for developing quantum models that can be trained more quickly and efficiently, potentially unlocking the full potential of quantum machine learning.

Parallel Quantum Training Reduces Complexity Significantly

This work introduces a new quantum machine learning framework that addresses a key limitation in both classical and quantum approaches: the sequential processing of training data. By encoding all training samples into a quantum superposition, the method enables parallel processing, theoretically reducing the complexity of loss function evaluation from a level proportional to the square of the dataset size to one proportional to the dataset size itself. Numerical simulations across various binary and multi-class classification datasets demonstrate that this approach achieves classification accuracies comparable to conventional circuits, while significantly reducing training time. The core contribution lies not in improving classification accuracy itself, but in enhancing the efficiency of existing algorithms. This paradigm shift allows effective quantum circuits to be applied to larger datasets without incurring prohibitive computational costs, potentially unlocking scalability for quantum machine learning applications. While the authors acknowledge that the method’s performance is currently demonstrated through simulations, they highlight its potential for implementation on near-term quantum hardware.

👉 More information

🗞 Parallel Data Processing in Quantum Machine Learning

🧠 ArXiv: https://arxiv.org/abs/2508.12006