Understanding how quantum circuits respond to real-world hardware imperfections represents a crucial step towards building practical, fault-tolerant quantum computers. Matthew Girling, Ben Criger, and Cristina Cîrstoiu, all from Quantinuum, have developed a new method to characterise the specific types of errors that occur in detector regions, the components responsible for identifying and correcting errors within a quantum computation. Their approach directly measures the logical errors conditioned on the outcomes of error detection, providing a detailed picture of noise behaviour and enabling more effective decoding strategies. This research demonstrates significant improvements in standard fault tolerance diagnostic tests when combined with noise mitigation techniques, bringing scalable quantum computation closer to reality by allowing for more accurate and robust error correction.

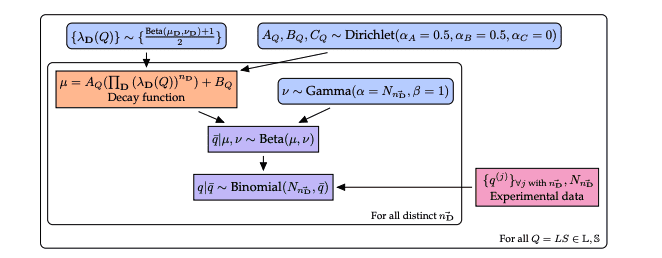

Researchers address limitations inherent in current assumptions regarding quantum error correction. They introduce a protocol designed to directly estimate the characteristics of errors affecting logical qubits, and associated imperfections, within detector regions comprising multiple syndrome measurements. This method operates conditionally, based on observing a specific pattern in the syndrome outcomes, and exhibits robustness against common errors in quantum systems, making it particularly suitable for architectures that use flag-based syndrome measurement schemes. For processing experimental data, the team implements a Bayesian modelling approach, and validates this new protocol using a small error-detecting code on Quantinuum H1-1, a trapped-ion device. Results demonstrate significant improvements in several noise diagnostic tests relevant to fault tolerance when employing noise tailoring and mitigation strategies.

Logical Error Detection with J4 Code

This work presents results from experiments and simulations aimed at characterizing and estimating error rates in a quantum error correcting code, specifically the J4, 2, 2K code. Key concepts underpinning this research include quantum error correction, which protects quantum information from noise, and LSD-DRT (Logical Syndrome Detection with Random Twists), a method for detecting logical errors by applying random adjustments and measuring the resulting syndrome. The syndrome itself is the result of a measurement that reveals information about the errors that have occurred, without revealing the encoded quantum information. Understanding physical errors, which occur on the physical qubits, and logical errors, which affect the encoded quantum information, is crucial.

The research also utilizes specific circuits, known as gadgets, within the error correction scheme to perform tasks like syndrome extraction or error correction. Leakage-protected gadgets are designed to minimize information leakage, which could compromise the error correction process. The data presented comes from both experimental measurements and numerical simulations, allowing for direct comparison and validation. Researchers estimate the probabilities of different error types, providing a detailed characterization of the code’s performance. This process involves measuring the syndrome, estimating error rates, and validating the results with simulations.

Syndrome Outcomes Reveal Detector Error Characteristics

Researchers have developed a new method for precisely characterizing errors in quantum circuits, a crucial step towards building practical, fault-tolerant quantum computers. The technique focuses on understanding how errors manifest within the process of quantum error correction itself, specifically by analyzing the outcomes of syndrome measurements, the checks used to detect and correct errors without directly measuring the quantum information. This detailed analysis allows for a more accurate assessment of the underlying noise affecting quantum computations. The core of the approach involves a protocol to directly measure the impact of errors associated with detector regions, which are built from multiple syndrome measurements.

By conditioning the analysis on specific syndrome outcomes, researchers can pinpoint the types of errors occurring and their probabilities with greater accuracy than previously possible. This is particularly important because errors can arise not only from the quantum data but also from the error correction process itself, such as inaccurate syndrome readings. The method is robust against common errors in quantum systems and is well-suited for architectures that use flag-based syndrome measurement schemes. To validate their technique, the team applied it to a small error-detecting code using Quantinuum’s H1-1 trapped-ion device.

They demonstrated significant improvements in several noise diagnostic tests when employing noise mitigation strategies, including techniques to protect against leakage errors and to randomize the quantum state. Specifically, they achieved precise estimates of logical error rates, with values of approximately 2 x 10⁻⁴ for X-type errors, 1 x 10⁻⁴ for Y-type errors, and 3. 7 x 10⁻⁵ for Z-type errors, after applying post-selection and ideal decoding. These results demonstrate that the low-level error-detecting code, when combined with effective noise mitigation, can not only preserve quantum information for extended periods but also improve the overall fidelity of the computation. The ability to accurately characterize and mitigate errors within the error correction process represents a significant advancement, paving the way for more reliable and scalable quantum computers capable of tackling complex problems.

Logical Qubit Errors Characterized by Syndrome Outcomes

This research introduces a new method for directly measuring how errors affect logical qubits, focusing on regions of quantum circuits that extract syndrome information. The protocol estimates the probability of specific errors occurring, conditioned on the outcomes of syndrome measurements, and is particularly well-suited for systems employing flag-based syndrome extraction. Demonstrations on a trapped-ion device show that this approach improves the accuracy of noise diagnostics, crucial for validating the assumptions underpinning quantum error correction. The findings highlight the importance of directly characterizing noise at the logical level, as current circuit-level noise models appear insufficient to fully explain observed errors. Future work will focus on extending the protocol to larger regions of quantum circuits, incorporating mid-circuit measurement benchmarking, and refining the Bayesian modelling approach to improve the precision of error characterization.

👉 More information

🗞 Characterization of syndrome-dependent logical noise in detector regions

🧠 ArXiv: https://arxiv.org/abs/2508.08188