Predicting how the world changes over time is a core challenge in artificial intelligence, and researchers are now extending this capability to explore ‘what if’ scenarios, rather than simply forecasting the most likely future. Yiqing Shen, Aiza Maksutova, Chenjia Li, and Mathias Unberath, all from Johns Hopkins University, present a new framework that allows AI systems to answer counterfactual questions about videos, such as predicting what would happen if an object were removed from a scene. Their work introduces a method that builds ‘digital twins’, structured text representations of observed scenes, and uses these to reason about the effects of hypothetical interventions. This approach transforms standard video diffusion models into powerful ‘counterfactual world models’, achieving state-of-the-art performance and demonstrating the potential of alternative video representations for more flexible and controllable AI simulations.

Text-to-Video and Learned World Models

This research explores the rapidly evolving field of video generation and understanding. A dominant theme is text-to-video generation, with ongoing efforts to improve video quality, controllability, and realism from text prompts. Significant progress is also being made in developing world models, artificial intelligence systems that learn an internal representation of the environment and can predict future states, often linked to reinforcement learning and planning. Researchers are also addressing challenges in video editing and manipulation, enabling tasks like object removal and style transfer. A growing trend involves using digital twins, virtual representations of real-world environments, to enhance the reasoning and planning capabilities of AI systems.

Large Language Models (LLMs) are increasingly central to these systems, providing the ability to understand instructions and generate coherent content. Diffusion models have emerged as a leading approach for text-to-video generation due to their ability to produce high-quality results. Attention mechanisms are widely used to help models focus on the most relevant parts of the input. Researchers are actively developing new benchmarks and evaluation metrics to assess the performance of video generation models, while also addressing concerns about fairness and bias. There is a growing emphasis on developing scalable and efficient models capable of handling high-resolution videos and complex scenes. Connecting world models to embodied agents is enabling AI systems to interact with the physical world, and some researchers speculate on the potential of these models to serve as general-purpose world simulators, potentially contributing to the development of Artificial General Intelligence (AGI). Key contributors to this field include Yiqing Shen and Mathias Unberath, Uriel Singer and his team at Google/DeepMind, Eloi Alonso and Francois Fleuret, and Vincent Micheli, with landmark achievements including OpenAI’s video generation models, Shen’s work on digital twins, Singer’s Make-a-Video, and Valevski’s Diffusion World Models.

Counterfactual Video Prediction via Digital Twins

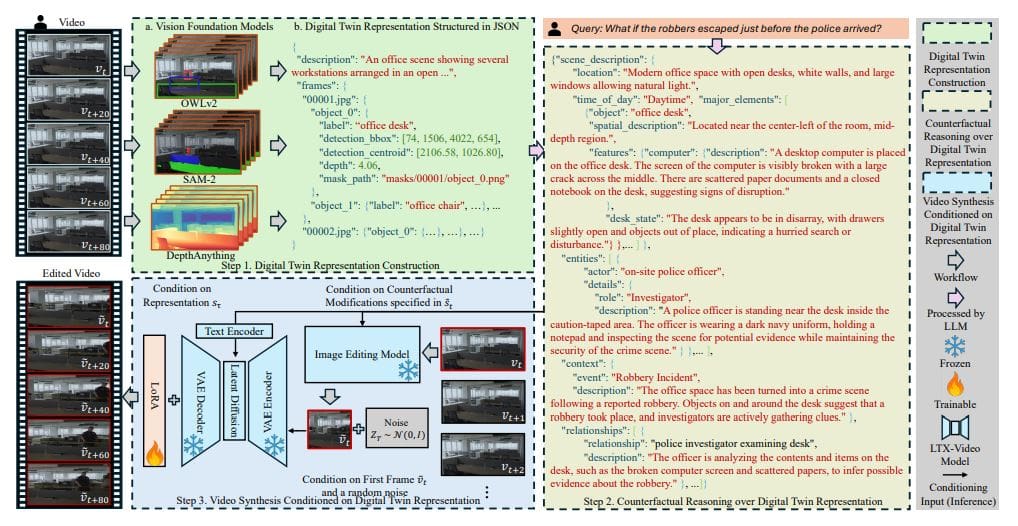

This research introduces a novel counterfactual world model (CWMDT) that predicts how visual scenes evolve under hypothetical changes. Unlike traditional world models that focus on factual observations, CWMDT allows researchers to explore “what if” scenarios, such as predicting the outcome of removing an object from a scene. This is achieved through the introduction of digital twin representations, which explicitly encode objects and their relationships as structured text, enabling targeted interventions impossible with pixel-based approaches. The process begins by transforming each video frame into a digital twin representation using several vision foundation models.

SAM-2 performs object segmentation and tracks objects across frames, while DepthAnything calculates the spatial positioning of each object. OWLv2 assigns semantic categories to detected instances, and QWen2. 5-VL generates natural language descriptions of object attributes. The resulting digital twin representation is serialized in JSON format, shifting the problem from visual reasoning to textual analysis. The core innovation lies in the intervention mapping, where a large language model (LLM) analyzes the given intervention and predicts its temporal evolution within the digital twin representation.

The LLM identifies affected objects and relationships, then forecasts how these changes propagate through time, leveraging its embedded world knowledge. Finally, the modified digital twin representation is fed into a video diffusion model, which generates a counterfactual video sequence reflecting the predicted changes. This end-to-end system decomposes the counterfactual world model into three consecutive mappings, enabling precise control over simulated environments. Evaluations on benchmark datasets demonstrate that CWMDT achieves state-of-the-art performance, suggesting that explicit representations like digital twins offer powerful control signals for video forward simulation.

Digital Twins Enhance Counterfactual Scene Prediction

Researchers have developed a framework, CWMDT, to enhance counterfactual world modeling, enabling systems to predict how scenes would evolve under hypothetical changes. Unlike existing approaches that directly manipulate pixel data, CWMDT constructs detailed digital twins of observed scenes, explicitly encoding objects and their relationships as structured text. This allows for targeted interventions on specific scene properties, a capability lacking in traditional world models. Experiments on the RVEBench benchmark reveal that CWMDT significantly outperforms existing video generative models across all metrics and complexity levels.

Specifically, CWMDT achieves a score of 33. 33% on the GroundingDINO metric at the highest complexity level, demonstrating its ability to precisely localize intervention targets. Furthermore, CWMDT achieves 64. 06% on the LLM-as-a-Judge metric, indicating a substantial improvement in aligning counterfactual outcomes with the intended intervention semantics. Ablation studies reveal the critical importance of both digital twin representations and LLM-based intervention reasoning for accurate counterfactual modeling, with removing either component leading to a noticeable decrease in performance. The research demonstrates that CWMDT can generate diverse and plausible counterfactual scenarios, such as simulating traffic congestion or a tree falling onto a road, while maintaining realistic physical constraints and temporal consistency.

👉 More information

🗞 Counterfactual World Models via Digital Twin-conditioned Video Diffusion

🧠 ArXiv: https://arxiv.org/abs/2511.17481