The pursuit of more efficient machine learning algorithms continues to drive innovation in computational paradigms, with a growing focus on reducing energy consumption and resource demands. Researchers are now exploring methods that move beyond conventional architectures, seeking alternatives capable of achieving comparable performance with significantly less overhead. Martin F. X. Mauser, Solène Four, and colleagues, report the successful implementation of a data re-uploading scheme on a photonic integrated processor, demonstrating high accuracy in image classification tasks. Their work, detailed in the article ‘Experimental data re-uploading with provable enhanced learning capabilities’, not only showcases a potentially resource-efficient optical implementation but also provides analytical proof of the algorithm’s universality, trainability, and ability to generalise to unseen data, establishing a foundation for future development of more sustainable machine learning techniques.

Quantum computing explores computation beyond classical limits for select tasks. The pursuit of quantum computing stems from the theoretical possibility of surpassing the limitations of classical computation for specific problems. Algorithms such as Shor’s, designed for factoring large numbers, and Grover’s, for database searching, establish a foundational basis for the field. Despite these advances, building quantum computers capable of tackling practically relevant problems remains a significant challenge, as current devices are limited in scale and prone to errors. Consequently, research is increasingly focused on identifying near-term applications where quantum computers can offer an advantage, particularly at the intersection of quantum computing and machine learning, aiming to leverage quantum capabilities to enhance existing algorithms or develop entirely new approaches.

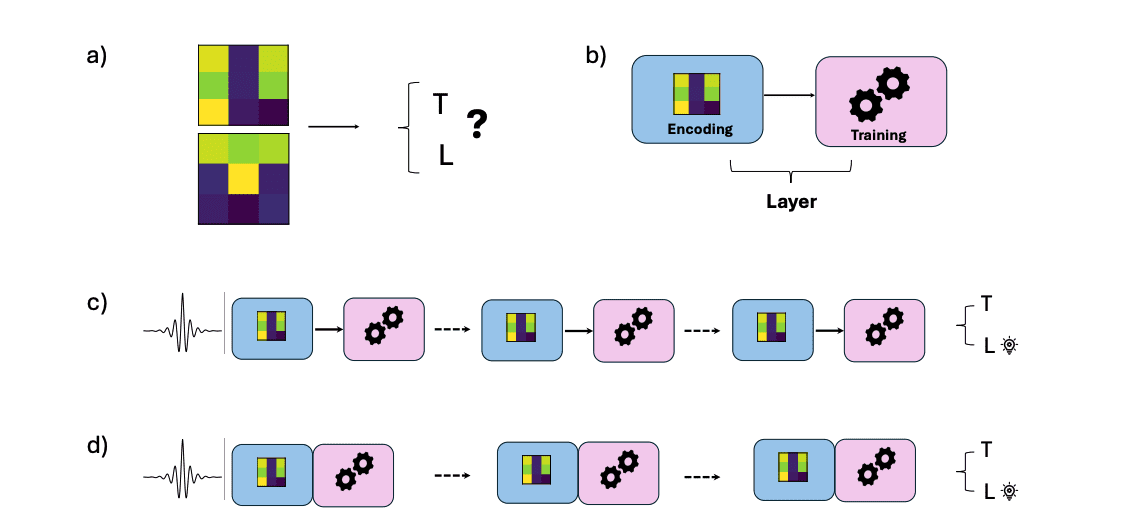

Data re-uploading represents one such algorithm, offering a potentially resource-efficient method for creating complex mappings of input data, differing from traditional kernel methods which rely on transforming data into higher-dimensional spaces by utilising the repeated application of quantum operations to encode information. Importantly, data re-uploading circumvents the no-cloning theorem, a fundamental principle of quantum mechanics that prevents the perfect copying of unknown quantum states, by cleverly re-using input data within the quantum circuit.

Researchers now present an implementation of data re-uploading on a photonic integrated processor, demonstrating high accuracy in image classification tasks and providing analytical proof that this implementation functions as a universal classifier and an effective learner, capable of generalising to unseen data. This research not only showcases a potentially resource-efficient optical implementation of data re-uploading but also offers new theoretical insights into its trainability and generalisation properties, paving the way for more sophisticated quantum machine learning models.

Recent advances in machine learning increasingly explore the potential of quantum computation, seeking algorithms that may offer advantages in resource efficiency, particularly concerning energy consumption. Researchers are now implementing and analysing data re-uploading schemes on photonic integrated processors, demonstrating high accuracy in image classification tasks and providing new theoretical insights into the algorithm’s capabilities. This approach utilises Mach-Zehnder interferometers, or MZIs, as the fundamental building blocks of the quantum machine learning model, manipulating and encoding quantum information to create a network capable of learning complex patterns from data.

The core principle involves repeatedly encoding classical data into the quantum state, enabling the model to refine its internal parameters and improve its predictive accuracy. A key aspect of this research lies in demonstrating the universality of the model, meaning its ability to approximate any continuous function, achieved through a rigorous mathematical proof utilising Fourier series analysis, a technique that decomposes complex functions into simpler sine and cosine waves.

Furthermore, the model’s complexity is quantified using the Vapnik-Chervonenkis (VC) dimension, a measure that indicates its ability to learn intricate patterns without overfitting to the training data, establishing bounds on the VC dimension and providing valuable insights into the model’s generalisation capabilities. Training such a quantum machine learning model requires careful consideration of gradient estimation techniques, as with conventional machine learning, the model’s parameters are adjusted iteratively to minimise the difference between its predictions and the desired outputs. However, estimating these gradients on quantum hardware presents unique challenges, with researchers exploring methods like parameter shift rules and finite difference methods to overcome these hurdles, allowing for efficient optimisation of the model’s parameters.

Numerical simulations, conducted using software libraries such as TensorFlow and Strawberry Fields, play a crucial role in validating these techniques and optimising the training process before implementation on actual quantum hardware. The successful implementation of this data re-uploading scheme on a photonic integrated processor represents a significant step towards realising practical quantum machine learning applications, combining theoretical analysis with experimental validation and demonstrating the potential of this approach to deliver resource-efficient algorithms capable of tackling complex classification tasks. The insights gained from this work lay the groundwork for developing more sophisticated quantum machine learning models and integrating them into broader computational frameworks, potentially revolutionising fields ranging from image recognition to medical diagnostics.

The findings indicate that increasing the number of layers within the model enhances its capacity, allowing it to represent more complex functions, while the model’s connection to Fourier series reinforces its theoretical underpinnings. Practical implementation relies on software tools including TensorFlow and Strawberry Fields, facilitating the training process, with the Adam optimiser and a Linear Discriminant Analysis (LDA) loss function proving effective in optimising the model’s performance. Experiments utilising on-chip training compare different gradient estimation techniques, including forward, backward, and central finite differences, to optimise performance, highlighting a robust and verifiable methodology.

Future work should focus on expanding the scope of image classification tasks and exploring the model’s performance on more diverse datasets, investigating the potential for reducing the number of qubits required for equivalent performance and remaining a key area for optimisation. Comparative studies against other quantum machine learning models, such as variational quantum circuits, will further clarify the advantages and disadvantages of this photonic approach, while extending the model’s application to other machine learning problems, including regression and clustering, presents a promising avenue for research. Exploring the integration of this data re-uploading scheme as a subroutine within more complex quantum algorithms could unlock further resource efficiencies, and experimental validation with increasingly sophisticated photonic integrated circuits is essential to demonstrate the practical viability and scalability of this approach.

👉 More information

🗞 Experimental data re-uploading with provable enhanced learning capabilities

🧠 DOI: https://doi.org/10.48550/arXiv.2507.05120