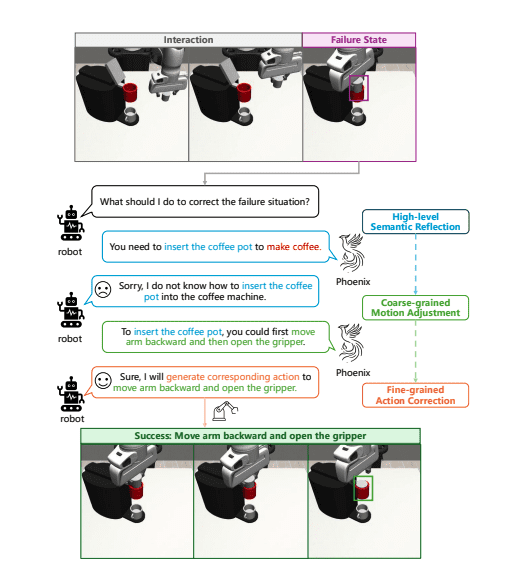

On April 20, 2025, researchers Wenke Xia, Ruoxuan Feng, Dong Wang, and Di Hu introduced Phoenix, a motion-based self-reflection framework designed to enable robots to perform precise fine-grained action corrections by integrating high-level semantic reflections with low-level motion adjustments. The framework leverages Multimodal Large Language Models (MLLMs) for coarse-grained motion instruction adjustment and visual observations for high-frequency corrections, demonstrating superior generalization across dynamic environments in both simulation and real-world experiments.

The research introduces the Phoenix framework, addressing the challenge of translating semantic reflection into fine-grained action correction for Multimodal Large Language Models (MLLMs). The framework uses motion instructions as a bridge, combining a dual-process mechanism for coarse adjustments with a multi-task diffusion policy for precise corrections. By shifting generalization demands to the MLLM-driven model, Phoenix enables robust manipulation across diverse tasks. Experiments in simulation and real-world settings validate its superior performance.

Recent advancements in robotics have explored integrating large language models (LLMs) to enhance tasks requiring precise manipulation. This innovative approach leverages LLMs for both perception and inference, enabling robots to execute complex actions more effectively.

The methodology centers on a motion prediction module that utilizes an LLM to generate motion instructions based on visual data captured by a camera. This module translates observed scenarios into actionable commands, facilitating tasks such as object placement or button pressing. To refine performance, a human-in-the-loop system is employed, where humans provide corrections for any failed attempts, enhancing the robot’s adaptability and precision over time.

Testing this approach with an xArm robot and a fine-tuned TinyLLaVA model demonstrated significant success rates in real-world settings. The integration of human feedback proved crucial, allowing continuous improvement beyond initial command generation. This dual-module system—where one generates instructions and the other adjusts based on feedback—ensures robots can navigate real-world unpredictability effectively.

While this method shows promise, scalability remains a consideration. Reliance on human corrections for each failure could limit autonomy; however, future research aims to automate parts of this feedback process. In conclusion, integrating LLMs with human feedback represents a significant advancement in robotic manipulation, offering adaptable and efficient solutions for real-world tasks. This approach not only improves task success rates but also paves the way for more autonomous and versatile robotics applications.

👉 More information

🗞 Phoenix: A Motion-based Self-Reflection Framework for Fine-grained Robotic Action Correction

🧠 DOI: https://doi.org/10.48550/arXiv.2504.14588