The era of truly massive artificial intelligence is about to expand beyond specialized labs and into the hands of more researchers and businesses. Today, Perplexity announced a breakthrough enabling the deployment of trillion-parameter AI models – the largest and most capable currently available – on widely accessible cloud platforms like AWS. Previously, these models were largely confined due to immense hardware requirements and incompatibility with standard cloud infrastructure. This innovation unlocks a new level of AI power for a broader audience, promising faster, more intelligent applications and accelerating progress across the entire field – and Perplexity is making the technology freely available to the research community.

Overcoming Barriers to Trillion-Parameter Models

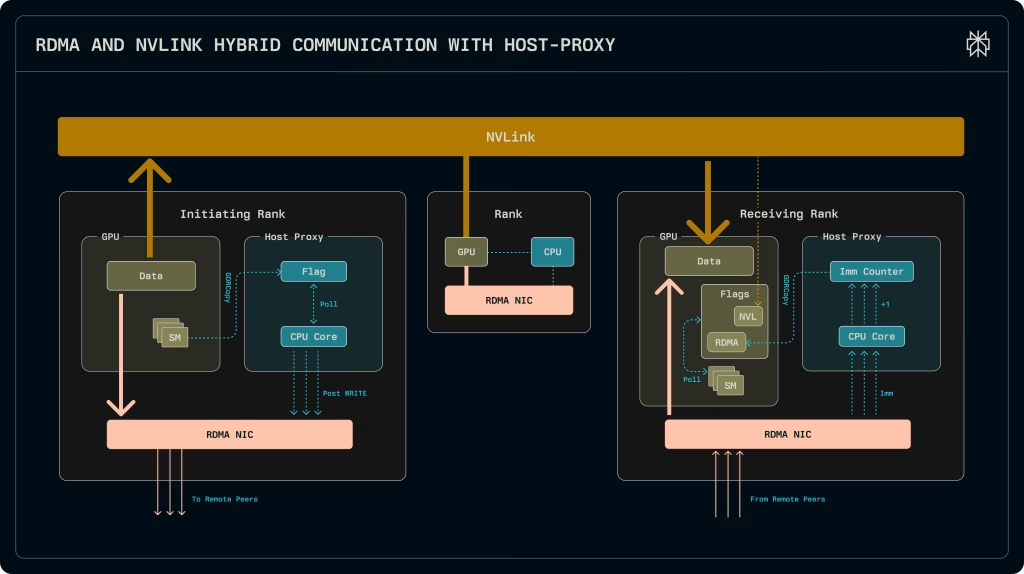

A significant hurdle preventing wider access to cutting-edge AI has been the immense scale of trillion-parameter models, requiring coordinated computing power previously limited to specialized hardware. Perplexity has broken this barrier by achieving viable performance on AWS Elastic Fabric Adapter (EFA), a feat not previously possible. While solutions existed for high-end NVIDIA networking, they didn’t translate to common cloud platforms like AWS, effectively locking advanced models within research labs. Perplexity’s newly developed kernels optimize communication between machines, allowing models like the trillion-parameter Kimi-K2 to be deployed and served at practical speeds on AWS. This breakthrough not only unlocks advanced AI capabilities for real-world applications.

Perplexity’s Solution and Future Development

Perplexity has overcome a major hurdle in AI development by becoming the first to successfully deploy trillion-parameter models with portability across cloud platforms, specifically achieving viable performance on AWS Elastic Fabric Adapter (EFA). Previously, these massive models—like the Kimi-K2—were largely confined to research labs due to infrastructure limitations and high costs. Perplexity’s newly developed kernels optimize communication between machines, surpassing existing Mixture-of-Experts (MoE) solutions and enabling practical deployment speeds even without specialized NVIDIA hardware. This breakthrough unlocks access to advanced AI capabilities for a wider range of researchers and developers, allowing them to build and innovate without reinventing complex infrastructure. Further collaboration with AWS promises continued optimizations.