Researchers are increasingly focused on optimising Retrieval-Augmented Generation (RAG) systems for dependable question answering, yet the crucial role of document chunking remains surprisingly underexplored. Sofia Bennani from École polytechnique and Charles Moslonka from Artefact Research Center, MICS, CentraleSupélec, Université Paris-Saclay, alongside S Bennani, present a systematic analysis of various chunking strategies , encompassing token, sentence, semantic, and code-based approaches , to determine their impact on RAG reliability. Their end-to-end evaluation, utilising a standard industrial setup with SPLADE and Mistral-8B on the Natural Questions dataset, reveals actionable insights for cost-effective deployment, challenging common heuristics and demonstrating, for example, that overlap offers no measurable benefit. This work is significant because it provides concrete guidance on chunk size and context length, ultimately helping developers build more robust and efficient question-answering systems , identifying a ‘context cliff’ beyond which quality diminishes and highlighting the goal-dependent nature of optimal context length.

Experiments show a distinct “context cliff” where quality diminishes beyond 2,500 tokens, highlighting the importance of context length optimisation. Researchers used a standard industrial setup, employing SPLADE for retrieval and the Mistral-8B generator, to ensure the findings are directly applicable to real-world applications. This nuanced understanding allows for tailored configurations, maximising efficiency and accuracy based on specific application requirements.

Furthermore, the study rigorously tested four chunking methods across a grid of sizes, with overlaps of 0% or 20%, and retrieved into context budgets ranging from 500 to 10,000 tokens. Metrics included BERTScore for semantic quality, Exact Match for factual accuracy, and None Ratio to assess abstention rates, providing a comprehensive reliability view. The findings consistently show that adding overlap does not improve performance but increases indexing costs, advocating for its removal. The research also identifies a critical performance drop-off beyond 2,500 tokens, termed the “context cliff”, suggesting that excessive context can dilute signal and hinder performance. Finally, the team proves that context size should be tuned to the specific goal, with smaller contexts favouring semantic quality and larger contexts improving factual accuracy, while also influencing the model’s tendency to abstain from answering when context is insufficient. These findings provide compact, deployable guidance for building robust and efficient RAG systems, offering a significant advancement in knowledge access and user support technologies.

RAG Pipeline Chunking with SPLADE and Mistral-8B delivers

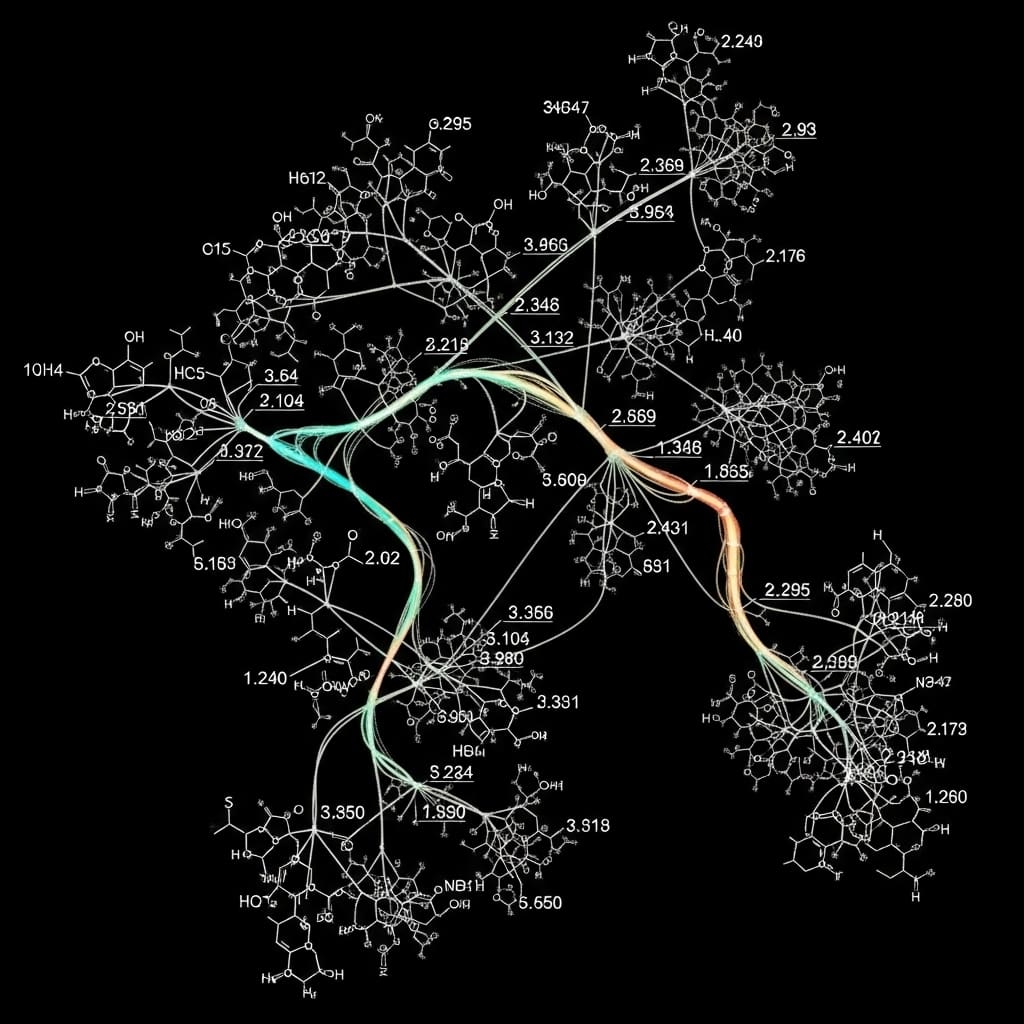

The research team engineered a two-stage RAG pipeline, beginning with sparse retrieval using SPLADE and culminating in text generation with the Mistral-8B model, a standard industrial setup, to assess the impact of various chunking choices on question answering performance. Experiments employed the Natural Questions short-answer dataset, utilising English Wikipedia as the underlying corpus, where documents were chunked and indexed according to differing strategies before retrieval. To ensure fair comparison, the study pioneered a “fill-to-budget” policy, dynamically appending ranked chunks to a token budget C (context length) using the generator’s tokenizer until the budget was reached, avoiding fixed-K bias across chunk sizes and methods. Semantic chunking merged adjacent sentences based on cosine similarity (using all-MiniLM-L12-v2) exceeding 0.5, up to a target size S, and Code chunking focused on structure-aware parsing for source code, though the dataset was primarily text-centric.

Researchers varied chunk sizes S from 50 to 500, incrementing by 50, and tested overlaps O of 0% or 20%, alongside context budgets C of {500, 1k, 2.5k, 5k, 10k} tokens, creating a comprehensive grid of parameters. The generation phase harnessed Mistral-8B-Instruct-2410 with low-temperature decoding (T=0.1), prompting the model to answer strictly from context and output “NONE” if insufficient information was available, with output length capped for concise answers. Performance was quantified using BERTScore for semantic quality, Exact Match (EM) with standard normalisation, and None Ratio, the proportion of queries resulting in “NONE” outputs, with 95% bootstrap confidence intervals calculated to determine statistically significant differences.,.

Sentence chunking optimises RAG performance and cost effectively

Experiments utilized a standard industrial setup incorporating SPLADE and a Mistral-8B model to assess performance under diverse conditions. Results demonstrate that overlap provides no measurable benefit while simultaneously increasing indexing costs, offering a clear directive for efficient deployment. Data shows a distinct “context cliff” emerges, with quality declining beyond a context length of around 2,500 tokens, highlighting a critical limitation in retrieval performance.The study precisely measured the effects of these variables on retrieval accuracy and efficiency, providing actionable insights for real-world applications.

Tests prove that avoiding overlap streamlines indexing, and defaulting to sentence chunking offers a balance between cost and performance. Researchers discovered that tuning context length to the specific task, whether prioritizing semantic understanding or precise matching, is crucial for maximizing RAG system effectiveness. Measurements confirm that careful attention to context length, particularly avoiding exceeding the 2,500-token threshold, significantly enhances robustness. This breakthrough delivers compact, deployable guidance for building reliable and cost-effective RAG agents. The work, funded by BPIFrance as part of the ArGiMi project, has already improved the robustness of client-facing agents in practice. Scientists used a low temperature of 0.1 to minimize generation variance, but key trends persisted even under bootstrap resampling with a value of 0.4.

👉 More information

🗞 A Systematic Analysis of Chunking Strategies for Reliable Question Answering

🧠 ArXiv: https://arxiv.org/abs/2601.14123