Researchers are tackling a significant problem hindering the progress of Diffusion-Interactive Text-to-Image Retrieval (DAI-TIR): the tendency of diffusion models to introduce misleading visual ‘hallucinations’ that degrade performance. Zhuocheng Zhang from Hunan University, Kangheng Liang and Paul Henderson from the University of Glasgow, along with Guanxuan Li, Richard Mccreadie and Zijun Long, demonstrate empirically how these inaccuracies can substantially reduce retrieval effectiveness. Their new framework, Diffusion-aware Multi-view Contrastive Learning (DMCL), offers a robust training approach by optimising representations of both query intent and target images, effectively filtering out these hallucinated cues and improving the alignment of textual and visual information. This innovation consistently improves multi-round retrieval accuracy across five benchmarks, achieving gains of up to 7.37% over existing methods and paving the way for more reliable DAI-TIR systems.

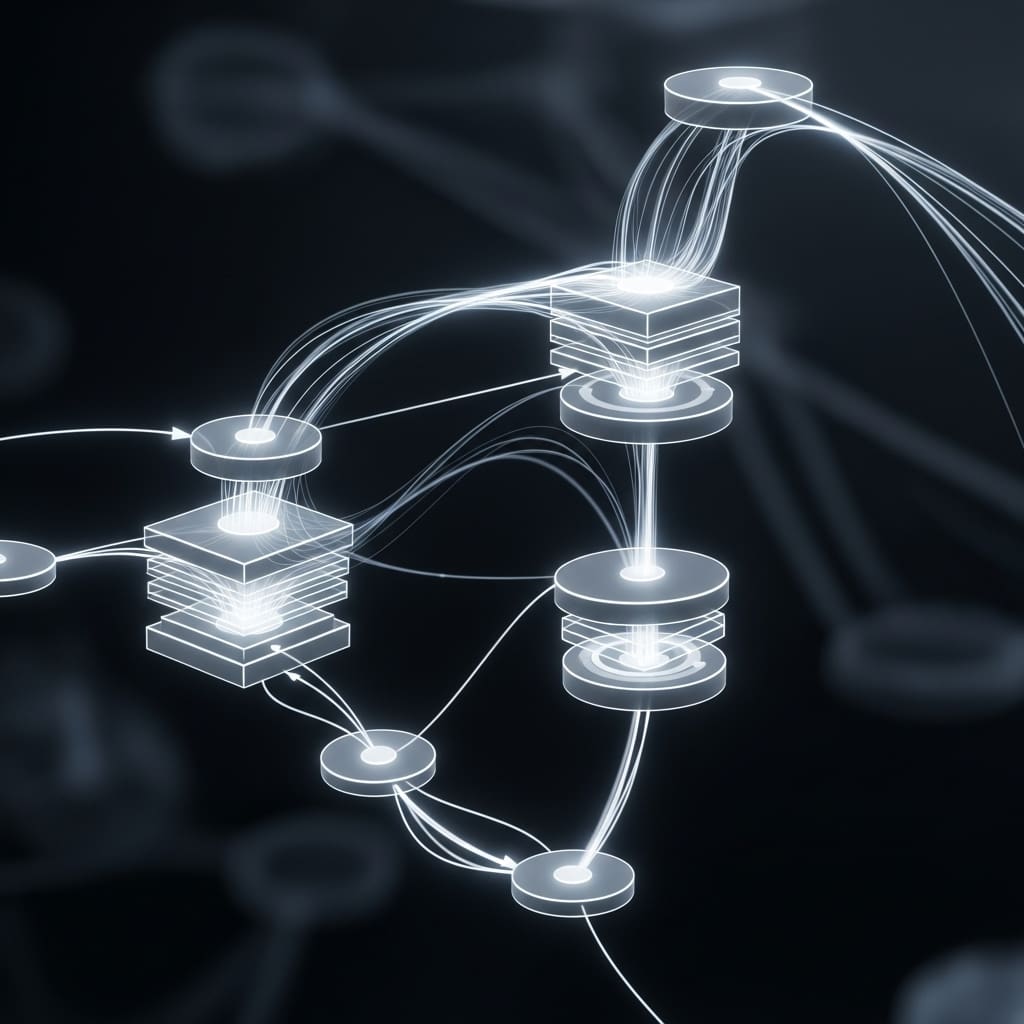

However, the team discovered that diffusion models can introduce unintended visual cues, termed “hallucinations”, which conflict with the original text query and degrade retrieval performance. This research empirically proves that these hallucinated cues significantly impact the accuracy of DAI-TIR systems. This innovative approach yields an encoder that functions as a semantic filter, effectively mapping hallucinated cues into a null space, thereby enhancing robustness and improving the representation of the user’s intended visual search. Attention visualisation and geometric embedding-space analyses provide supporting evidence for this filtering behaviour, confirming the model’s ability to discern and disregard irrelevant visual information. The work establishes a robust framework for handling the challenges posed by diffusion-generated content in image retrieval.

Furthermore, the research introduces two complementary training objectives: a Multi-View Query, Target Alignment objective and a Text, Diffusion Consistency objective. The alignment objective encourages the model to emphasise shared cues across views while filtering out inconsistencies, while the consistency objective enhances agreement between text and diffusion queries, reducing sensitivity to mismatches and generative hallucinations. Together, these objectives create a shared embedding space that reliably supports the DAI-TIR task, even when faced with shifting user intent over multiple rounds of interaction. The research addresses a key limitation of DAI-TIR, where diffusion models can introduce spurious details into generated images, potentially degrading retrieval accuracy. Researchers empirically demonstrated that these hallucinated cues significantly reduce performance, prompting the creation of DMCL to align textual and diffusion-based query views while actively suppressing these misleading signals. The study pioneered a method for casting DAI-TIR as a joint optimisation process, focusing on representations of both query intent and the target image.

The team harnessed attention visualisation and geometric embedding-space analyses to corroborate the filtering behaviour of the developed encoder. These analyses revealed how DMCL reshapes the embedding space, enhancing cross-modal alignment and suppressing conflicting signals. The system delivers a robust training framework for DAI-TIR, facilitating more accurate and reliable interactive image retrieval. Experiments revealed that these hallucinated cues can degrade DAI-TIR performance, and DMCL aims to mitigate this by optimising representations of query intent and target images. The team measured semantic consistency and employed diffusion-aware contrastive objectives to align textual and diffusion-query views, suppressing spurious signals. Current DAI-TIR methods utilise diffusion models to generate additional views of a user’s intent, expressed as a text query, but these generated views can introduce inaccurate visual cues, known as hallucinations, which negatively impact performance. DMCL addresses this issue by optimising representations of both the query intent and the target image simultaneously. The core of DMCL lies in its semantic-consistency and diffusion-aware contrastive objectives, which align textual and diffusion-based query views while actively suppressing these hallucinated signals.

The authors acknowledge that their current implementation uses a simple additive fusion scheme for query integration, and future research will focus on exploring more sophisticated fusion techniques to further enhance retrieval performance. A large-scale DAI-TIR training dataset has also been released to facilitate further investigation in this area. Ultimately, DMCL represents a significant step towards building more reliable and accurate text-to-image retrieval systems by mitigating the impact of hallucinated visual cues.

👉 More information

🗞 Eliminating Hallucination in Diffusion-Augmented Interactive Text-to-Image Retrieval

🧠 ArXiv: https://arxiv.org/abs/2601.20391