Sparse matrix-dense matrix multiplication forms a cornerstone of modern scientific computing and increasingly important graph learning applications. Kelun Lei, Hailong Yang, and Kaige Zhang, from Beihang University, alongside their colleagues, present a new framework, LOOPS, designed to dramatically accelerate these calculations on Arm SME architectures. The team addresses the challenge of efficiently utilising both standard SIMD instructions and the powerful new Scalable Matrix Extension, achieving substantial performance gains for unstructured sparse data. Results demonstrate that LOOPS surpasses existing CPU methods, delivering speedups of up to 14. 4 for 64-bit precision, and importantly, outperforms leading GPU implementations with significantly improved energy efficiency on Apple’s M4Pro processor. This advance promises to unlock new possibilities for data-intensive applications by offering a high-performance, energy-conscious solution for sparse matrix operations.

Sparse Matrix Efficiency for Graph Neural Networks

This collection of research papers focuses on optimizing sparse matrix computations, crucial for applications like graph neural networks (GNNs) and broader machine learning tasks. The core challenge lies in the fact that sparse matrices, containing mostly zero values, are common in these fields, offering potential for efficiency if handled correctly. GNNs, in particular, heavily rely on efficient sparse matrix operations, driving significant research in this area. Researchers are employing several key optimization techniques. Different sparse matrix storage formats, like Compressed Sparse Row/Column (CSR/CSC), balance storage space and access speed.

Algorithms are being tailored for sparse matrices, utilizing techniques like tiling and row/column skipping. Hardware acceleration plays a vital role, with GPUs leveraging massive parallelism and specialized libraries like cuSPARSE, and Tensor Cores increasingly used to accelerate operations. Custom accelerators, including FPGAs and ASICs, offer further performance and energy efficiency gains. Optimization also extends to ARM processors, utilizing features like Scalable Vector Extension (SVE) and runtime code generation. Several frameworks and libraries are contributing to these advancements.

Featgraph and Deep Graph Library (DGL) are graph processing frameworks designed for efficient GNN training and inference. Intel MKL provides optimized sparse matrix routines, while FusedMM and Rosko combine sparse-dense and sparse-sparse matrix operations for efficiency. TC-GNN bridges sparse GNN computation with dense Tensor Cores on GPUs. JITSPMM generates code at runtime for accelerated sparse matrix-matrix multiplication, and specialized accelerator designs like Extensor, Sextans, Hottiles, and Spade are pushing the boundaries of performance. Emerging trends include hybrid approaches, automated optimization tools, and exploring sparse-to-dense conversion. Ultimately, this research aims to overcome the challenges of sparse matrix computations, enabling faster and more scalable machine learning applications, especially in the field of graph neural networks.

Hybrid Sparse Format for Matrix Acceleration

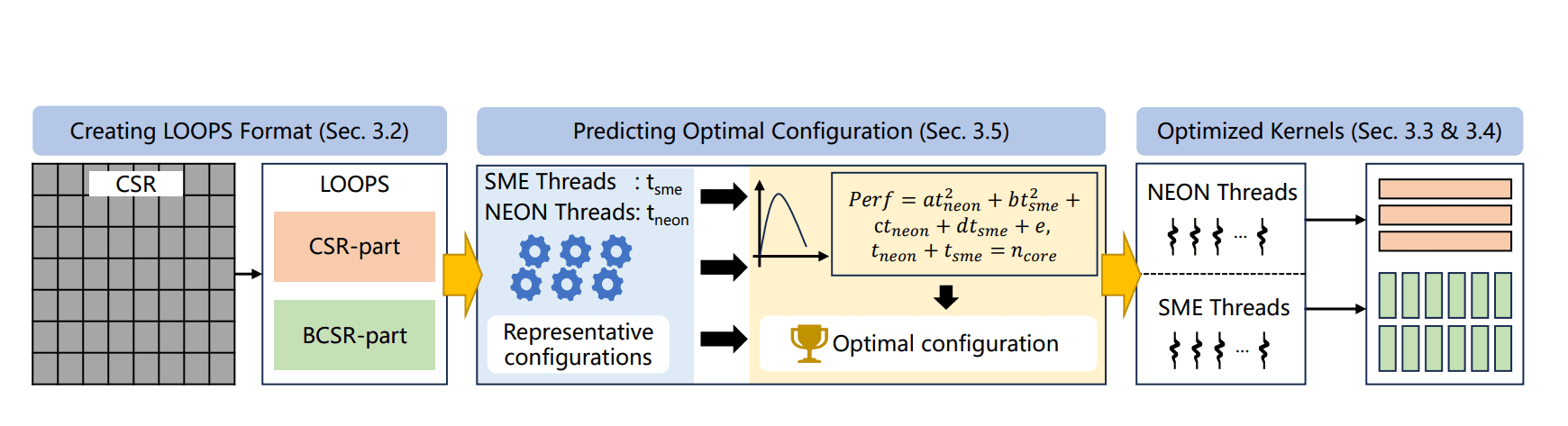

Scientists have developed LOOPS, a novel framework to unlock the potential of modern CPUs for sparse matrix computations. This innovative design allows standard vector instructions, such as NEON, to efficiently process the CSR portion, while the BCSR part leverages the power of SME matrix instructions, minimizing computational overhead and maximizing efficiency. LOOPS employs a two-level parallel execution model to further enhance performance.

At the coarse level, the CSR and BCSR kernels are assigned to separate thread groups, enabling parallel processing of the different sparse formats. Within each kernel, fine-grained thread parallelism, implemented with a customized approach, accelerates computation even further. To optimize workload distribution and thread allocation, scientists developed a lightweight quadratic performance model, calibrated during initial warm-up runs, which predicts the ideal configuration for a given matrix. Experiments employing the entire SuiteSparse dataset on an Apple M4Pro CPU demonstrate the effectiveness of LOOPS.

The results show that LOOPS achieves average speedups of 9. 93x for FP32 and 14. 4x for FP64 computations compared to the CPU baseline TACO, and even more substantial gains of 71. 3x (FP32) and 54. 8x (FP64) relative to the Armadillo library.

Notably, LOOPS also outperforms GPU-based methods, delivering average speedups between 19. 8x and 33. 5x when compared to cuSPARSE and Magicube running on an NVIDIA A100 GPU, while simultaneously exhibiting superior energy efficiency. LOOPS uniquely combines a row-wise Compressed Sparse Row (CSR) format, processed by standard vector instructions, with a vector-wise Block Compressed Sparse Row (BCSR) format, executed using SME, to efficiently utilize both types of processing resources. This hybrid approach minimizes computational overhead and maximizes hardware utilization for sparse workloads. Experiments conducted on the entire SuiteSparse dataset demonstrate substantial performance gains.

On an Apple M4Pro CPU, LOOPS achieves an average speedup of 9. 93x compared to the TACO CPU baseline and 71. 3x over Armadillo, both measured in FP64 precision. For FP32 precision, LOOPS delivers average speedups of 14. 44x over TACO and 54.

57x over Armadillo. The framework supports multi-precision computation, including FP16, and achieves significant performance improvements in this format. Notably, LOOPS outperforms state-of-the-art GPU implementations. When compared to cuSPARSE and Magicube running on an NVIDIA A100 GPU, LOOPS on the Apple M4Pro achieves average speedups ranging from 19. 79x to 33.

49x, depending on the precision. These results demonstrate that LOOPS not only accelerates SpMM on CPUs but also delivers superior performance and energy efficiency compared to leading GPU-based solutions. The framework employs a lightweight performance model to dynamically optimize workload partitioning and thread allocation, further enhancing its efficiency and adaptability.

Hybrid Layouts Boost Matrix Multiplication Performance

LOOPS represents a significant advance in sparse matrix-dense matrix multiplication, a fundamental operation in scientific computing and graph learning. This hybrid approach allows different parts of the calculation to be mapped to the most appropriate hardware, resulting in substantial performance gains. Experimental results demonstrate that LOOPS achieves considerable speedups compared to existing CPU and GPU methods when performing calculations with varying precision levels. Notably, LOOPS delivers superior energy efficiency and performance compared to existing methods.

👉 More information

🗞 \uline{LO}w-c\uline{O}st yet High-\uline{P}erformant \uline{S}parse Matrix-Matrix Multiplication on Arm SME Architectures

🧠 ArXiv: https://arxiv.org/abs/2511.08158