Researchers at UC San Francisco have enabled a paralyzed man to control a robotic arm using a brain-computer interface (BCI) that translates his thoughts into movement. Unlike previous BCIs, which required frequent recalibration, this system functioned effectively for seven months due to an artificial intelligence (AI) model that adapts to shifts in brain activity over time.

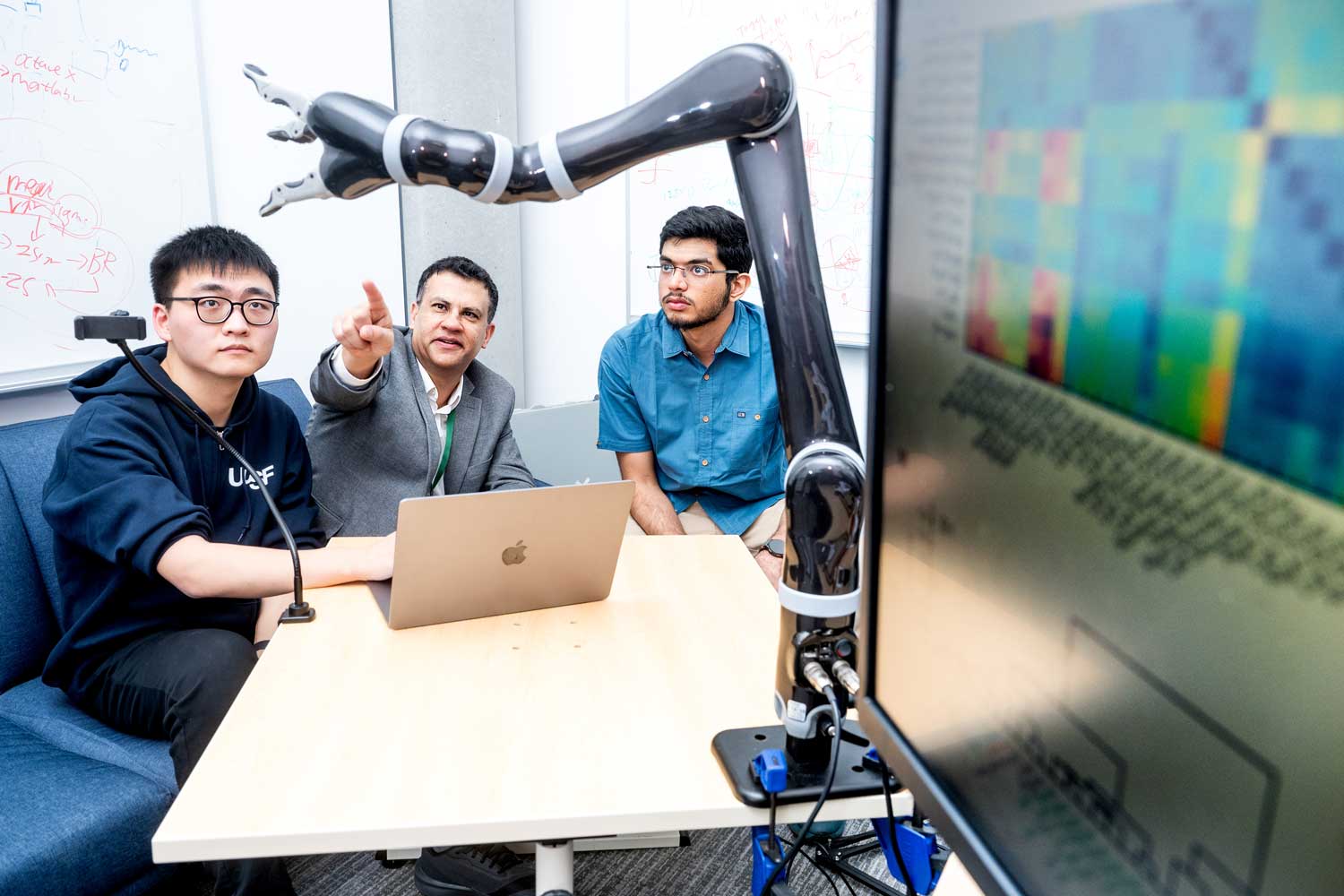

The study, led by neurologist Karunesh Ganguly, PhD, and neurology researcher Nikhilesh Natraj, PhD, explored how brain activity changes as movements—real or imagined—are repeated. The participant, who had been paralyzed by a stroke, had tiny sensors implanted in his brain to capture neural signals. Initial training involved imagining hand, foot, or head movements, revealing that while brain activity patterns remained consistent in shape, their locations shifted slightly each day. By incorporating this variability, the AI model maintained the BCI’s effectiveness over time.

To refine control, the participant first trained with a virtual robotic arm before transitioning to a real one. After practice, he was able to pick up and move objects, open a cabinet, and retrieve a cup. Even months later, he could still use the robotic arm with only a brief recalibration session.

This research represents a significant advancement in assistive technology for people with paralysis. Future work will focus on improving the speed and smoothness of the robotic arm’s movements and testing the system in home environments. The study was published in Cell and funded by the National Institutes of Health and the UCSF Weill Institute for Neurosciences.

More information

External Link: Click Here For More