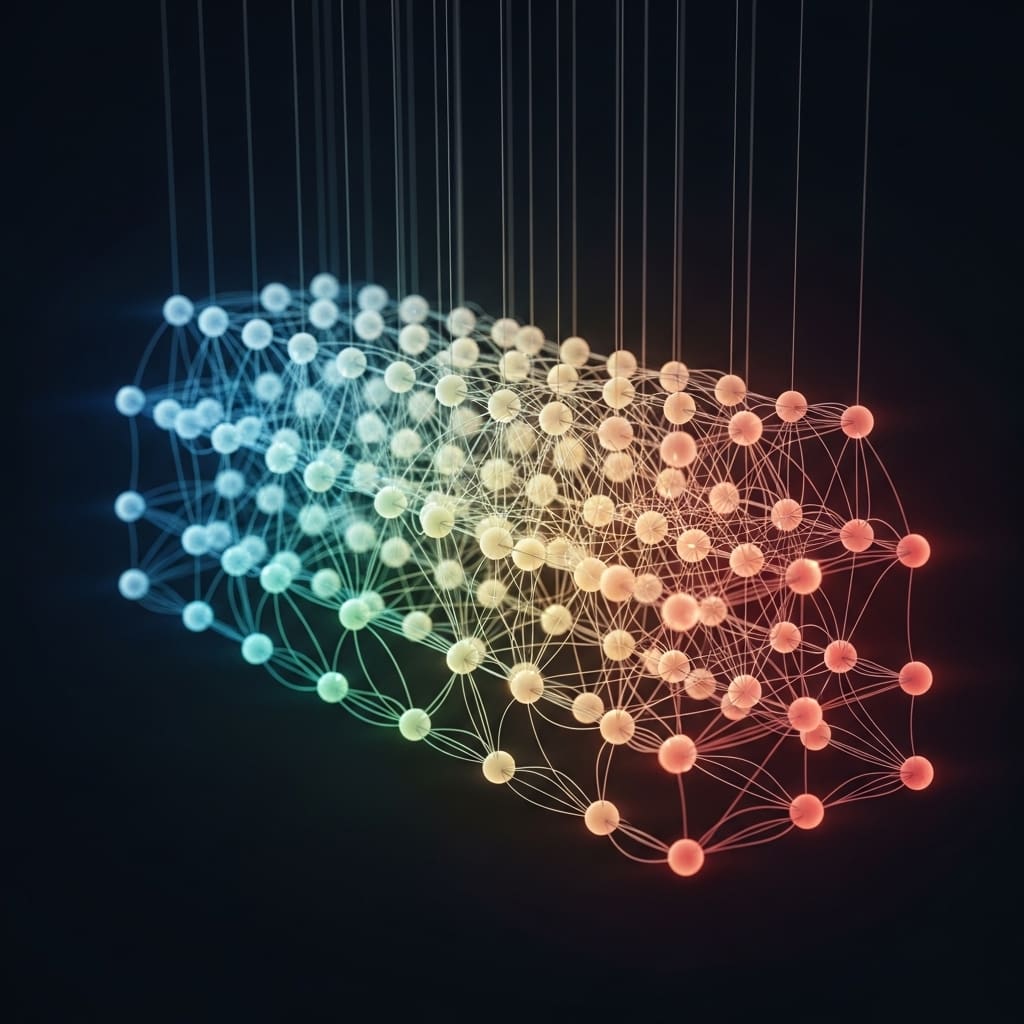

Reservoir Computing’s limitations in handling large datasets and sequential data processing have long hindered its wider application. Now, Matteo Pinna, Giacomo Lagomarsini, Andrea Ceni, and Claudio Gallicchio, all from the University of Pisa, present ParalESN, a novel approach that unlocks parallel information processing within this powerful paradigm. Their research introduces a method for building high-dimensional, efficient reservoirs using diagonal linear recurrence in the complex plane, effectively circumventing the traditional sequential processing bottleneck. This work is significant because it not only preserves the core theoretical properties of Echo State Networks but also delivers substantial computational savings and reduced energy consumption, demonstrated through competitive results on time series benchmarks and pixel-level classification tasks, paving the way for scalable and practical Reservoir Computing implementations.

This research introduces a method for constructing high-dimensional, efficient reservoirs using diagonal linear recurrence within the complex space, enabling parallel processing of temporal data.

The team achieved this by revisiting RC through structured operators and state space modelling, fundamentally rethinking reservoir construction. Experiments show that the model’s recurrence can be parallelized via associative scan, significantly accelerating processing.

On 1-D pixel-level classification tasks, ParalESN achieves competitive accuracy compared to fully trainable neural networks, but with orders of magnitude reduction in computational costs and energy consumption. This breakthrough establishes a promising, scalable, and principled pathway for integrating RC into the broader deep learning landscape.

The work opens new avenues for real-time temporal data analysis and resource-constrained applications, offering a powerful alternative to traditional recurrent neural network architectures. Researchers demonstrate that by combining the dynamical richness of RC with linear recurrence from Linear Recurrent Units, they bridge a gap between classical dynamical systems-inspired learning and contemporary large-scale modelling.

This innovative approach leverages structured operators to create reservoirs that are both powerful and computationally efficient, paving the way for more sophisticated and sustainable machine learning systems. Experiments employed time series benchmarks to validate ParalESN’s predictive accuracy, achieving performance comparable to traditional RC methods, but with substantial computational savings.

The team rescaled the reservoir to achieve a desired spectral radius, typically constrained to be less than 1, and utilized a linear readout layer defined by yt = Woutht + bout, where yt represents the network output and Wout and bout are the readout weight matrix and bias vector, respectively. Readout weights were optimised using ridge regression or least squares methods.

To ensure deterministic behaviour, the reservoir was initialised subject to the Echo State Property, verifying that states asymptotically depend only on inputs. The study pioneered a method for assessing this property by monitoring the convergence of states from different initial points, defined by the norm ∥F(ht−1, xt) −F(h′ t−1, xt)∥2 approaching zero as time increases.

On 1-D pixel-level classification tasks, ParalESN attained competitive accuracy with fully trainable neural networks, while reducing computational costs and energy consumption by orders of magnitude. This approach enables a scalable and principled integration of RC into broader learning landscapes, offering a promising pathway for efficient temporal data processing.

Complex diagonal reservoirs enable efficient and scalable temporal data processing for diverse applications

Scientists have developed ParalESN, a novel reservoir computing architecture that addresses limitations in scalability and efficiency inherent in traditional methods. Data shows that ParalESN achieves predictive accuracy comparable to traditional reservoir computing on time series benchmarks, while delivering substantial computational savings.

Specifically, tests on 1-dimensional pixel-level classification tasks demonstrate that ParalESN attains competitive accuracy with fully trainable neural networks. Measurements confirm a reduction in computational costs and energy consumption by orders of magnitude using this new approach. The breakthrough delivers a scalable and principled pathway for integrating reservoir computing into broader learning landscapes.

The team measured the time required to perform recurrence in ParalESN and traditional ESNs for increasing sequence lengths, finding that ParalESN scales logarithmically, while traditional ESNs scale linearly. Results demonstrate that with 128 recurrent neurons and five layers, ParalESN significantly outperforms traditional ESNs in processing longer sequences.

Further tests involved scaling both ParalESN and traditional ESNs to high-dimensional reservoirs on the sMNIST task, revealing that traditional ESNs encountered out-of-memory errors at approximately 100,000 reservoir neurons. Measurements confirm that ParalESN, due to its reduced memory footprint from diagonal transition matrices, successfully fit into memory even with larger reservoir sizes.

The architecture employs a linear diagonal recurrence, similar to that of Linear Recurrent Units, combined with a mixing function to combine reservoir states. The reservoir dynamics are described by an equation incorporating leaky rate, diagonal transition matrix, bias vector, and input weight matrix, with the effective transition matrix calculated as (1 −τ)I + τΛh, where I is the identity matrix. Empirical evaluations demonstrate that ParalESN achieves predictive accuracy comparable to traditional RC on time series benchmarks, alongside significant computational savings.

On 1-D pixel-level classification tasks, the model attains competitive accuracy with fully trainable neural networks, while substantially reducing computational costs and energy consumption. Future research directions include exploring the application of ParalESN to more complex datasets and investigating its potential for integration with other machine learning paradigms. These findings suggest ParalESN offers a promising, scalable, and principled approach to integrating RC into broader learning landscapes, potentially enabling efficient temporal data processing in resource-constrained environments.

👉 More information

🗞 ParalESN: Enabling parallel information processing in Reservoir Computing

🧠 ArXiv: https://arxiv.org/abs/2601.22296