Calculating the weight enumerator polynomial is essential for characterizing quantum error correction codes, but becomes increasingly difficult as codes grow more complex. Balint Pato, June Vanlerberghe, and Kenneth R. Brown, all from Duke University, investigate methods to overcome this computational challenge by optimising the LEGO framework, a tensor network approach to this calculation. Their work reveals that intermediate calculations within these networks are often far less demanding than previously assumed, allowing for significant speed increases. The team developed a new, highly accurate method for predicting computational cost based on the underlying structure of the code, and demonstrates improvements of up to several orders of magnitude in calculation speed. This achievement, facilitated by a new open-source implementation called PlanqTN, validates hyper-optimised contraction as a crucial technique for designing better quantum error correction codes and exploring the possibilities within this vital field.

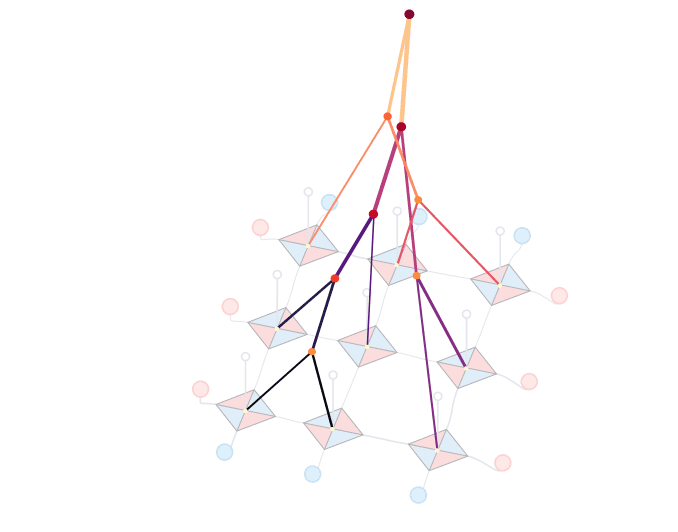

Tensor Network Contraction Cost Function Comparison

Scientists have refined methods for contracting tensor networks, a crucial process in fields like quantum physics, by comparing different approaches to estimating computational cost. The research focuses on minimizing the number of operations required to contract these networks, and investigates a standard function and a new Sparse Stabilizer Tensor (SST) function designed for the specific structure of networks used in quantum error correction. Two algorithms were also compared, a fast algorithm and a more sophisticated algorithm for improved results. The results demonstrate that the SST cost function consistently outperforms the standard function, particularly when applied to stabilizer tensor networks, resulting in significantly lower contraction costs.

The more sophisticated algorithm generally finds lower-cost solutions, although it requires more computational time. For smaller examples, the algorithms achieve results very close to the theoretically optimal solution. Analysis of the networks reveals that many intermediate tensors are sparse, containing a large proportion of zero elements, which invalidates the assumptions of standard cost functions designed for dense tensors. The SST cost function addresses this issue by considering the rank of these tensors, providing a more accurate estimate of computational cost and substantial speedups, often orders of magnitude, especially for larger networks.

Quantum Weight Enumerator via Tensor Networks

Researchers have developed a new methodology for calculating the quantum weight enumerator polynomial, a vital tool for characterizing quantum error-correcting codes. This work addresses computational challenges associated with complex codes by harnessing the Quantum LEGO framework, a tensor network approach, with the potential for substantial speedups over traditional methods. The team analyzed various stabilizer code families to determine optimal computational strategies, revealing that intermediate tensors within these networks are often highly sparse. This sparsity invalidates the assumptions of standard cost functions designed for dense tensors, prompting the scientists to introduce the Sparse Stabilizer Tensor (SST) cost function.

This function, based on the rank of parity check matrices, provides a polynomial-time calculation of computational cost and correlates perfectly with actual contraction cost, offering a significant improvement over existing methods. Optimizing contraction schedules using the SST cost function yielded substantial performance gains, achieving improvements of up to orders of magnitude in contraction cost compared to using a dense tensor cost function. The precise cost estimation provided by the SST function serves as an efficient metric for determining whether the tensor network approach is computationally superior to brute force for a given code layout. This work was enabled by PlanqTN, a new open-source implementation, and validates hyper-optimized contraction as a crucial technique for exploring the design space of quantum error-correcting codes.

Sparse Stabilizer Rank Dramatically Speeds Calculations

Scientists have achieved significant advancements in calculating weight enumerator polynomials for quantum error correction codes, a crucial step in designing more reliable quantum computers. The research team developed a hyper-optimized contraction schedule framework that dramatically improves the efficiency of these calculations using the Quantum LEGO framework. Experiments revealed that standard cost functions often overestimate the computational effort due to the sparse nature of the tensors involved in stabilizer codes. To address this, the team introduced a new, exact cost function, the Sparse Stabilizer Tensor (SST) cost function, based on the rank of the parity check matrices for intermediate tensors.

Measurements confirm that the SST cost function correlates perfectly with the actual contraction cost, providing a substantial advantage over the default cost function. Optimizing contraction schedules using the SST cost function yielded performance gains of up to orders of magnitude improvement in contraction cost compared to using a dense tensor cost function. Furthermore, the precise cost estimation provided by the SST function offers a reliable metric for determining whether the tensor network approach is computationally superior to brute-force methods for a given code layout. The team’s work, enabled by the new open-source implementation, PlanqTN, validates hyper-optimized contraction as a critical technique for exploring the design space of quantum error-correcting codes.

Sparse Stabilizer Tensor Predicts Contraction Cost

This work presents a significant advancement in the efficient calculation of weight enumerator polynomials, a crucial step in characterizing quantum error correcting codes. Researchers developed a framework leveraging Quantum LEGO and tensor networks to address the computational challenges associated with complex codes, often achieving substantial speedups compared to traditional methods. A key achievement is the introduction of a new cost function, the Sparse Stabilizer Tensor function, which accurately predicts the computational cost of tensor network contraction by accounting for the sparsity of intermediate tensors. The team demonstrated that this cost function correlates perfectly with actual contraction costs, offering a marked improvement over existing methods.

Through extensive testing across various code families, they found that hyper-optimized contraction schedules, guided by the new cost function, consistently outperform dense tensor cost functions, and in many cases, surpass the efficiency of brute-force calculations. Notably, networks employing a Message Passing Sparse layout often outperformed Tanner networks, even with a greater number of tensors. While the results indicate a substantial improvement in computational efficiency, the authors acknowledge that increasing the sample size within the optimization process may further reduce computational costs. The developed tools and findings, implemented within the open-source PlanqTN framework, validate hyper-optimized contraction as a vital technique for exploring the design space of quantum error correcting codes and pave the way for the development of more effective codes in the future.

👉 More information

🗞 Hyper-optimized Quantum Lego Contraction Schedules

🧠 ArXiv: https://arxiv.org/abs/2510.08210