Integrating artificial intelligence applications with enterprise data is becoming increasingly crucial for businesses seeking to harness the power of information retrieval at scale. To address this need, a novel approach known as Retrieval-Augmented Generation (RAG) has emerged, leveraging industry-leading embedding and reranking models to connect Large Language Models (LLMs) with vast corpora of enterprise data. Using RAG pipelines, developers can create applications that deliver context-aware responses, enabling actionable insights grounded in relevant data.

A recently introduced AI blueprint provides a foundational starting point for building scalable and customizable retrieval pipelines, offering features such as openAPI compatibility, multi-turn conversations, and multilingual support, while also allowing for configurability options and optimized data storage. This architecture has the potential to enhance decision-making and productivity and can be deployed using various options, including Docker and Kubernetes, making it an attractive solution for enterprises seeking to unlock the full potential of their data.

Introduction to Retrieval-Augmented Generation Pipelines

Retrieval-augmented generation (RAG) pipelines have gained significant attention in recent years, particularly in the context of large language models (LLMs) and their applications in enterprise settings. A RAG pipeline is designed to connect AI applications to vast amounts of enterprise data, enabling the retrieval of relevant information and generation of context-aware responses. This approach leverages embedding and reranking models for information retrieval at scale, making it a crucial component of modern AI systems.

The NVIDIA AI Blueprint for RAG provides a foundational framework for building scalable and customizable retrieval pipelines. This blueprint is designed to deliver high-accuracy and throughput, allowing developers to create RAG applications that can connect LLMs to large corpora of enterprise data. By doing so, it enables actionable insights grounded in relevant data, which can enhance decision-making and productivity. The blueprint can be used as a standalone solution or combined with other NVIDIA Blueprints to address more advanced use cases, such as digital humans and AI assistants for customer service.

One of the key features of the NVIDIA AI Blueprint for RAG is its support for OpenAI-compatible APIs, multi-turn conversations, and multi-collection and multi-session support. Additionally, it offers multilingual and cross-lingual retrieval capabilities, optimized data storage, and configurability options for NIM selection and NIM endpoints. The blueprint also includes reranking usage, which allows for more accurate and relevant results. These features make the NVIDIA AI Blueprint for RAG a robust solution for building enterprise-grade RAG pipelines.

Architecture and Components of RAG Pipelines

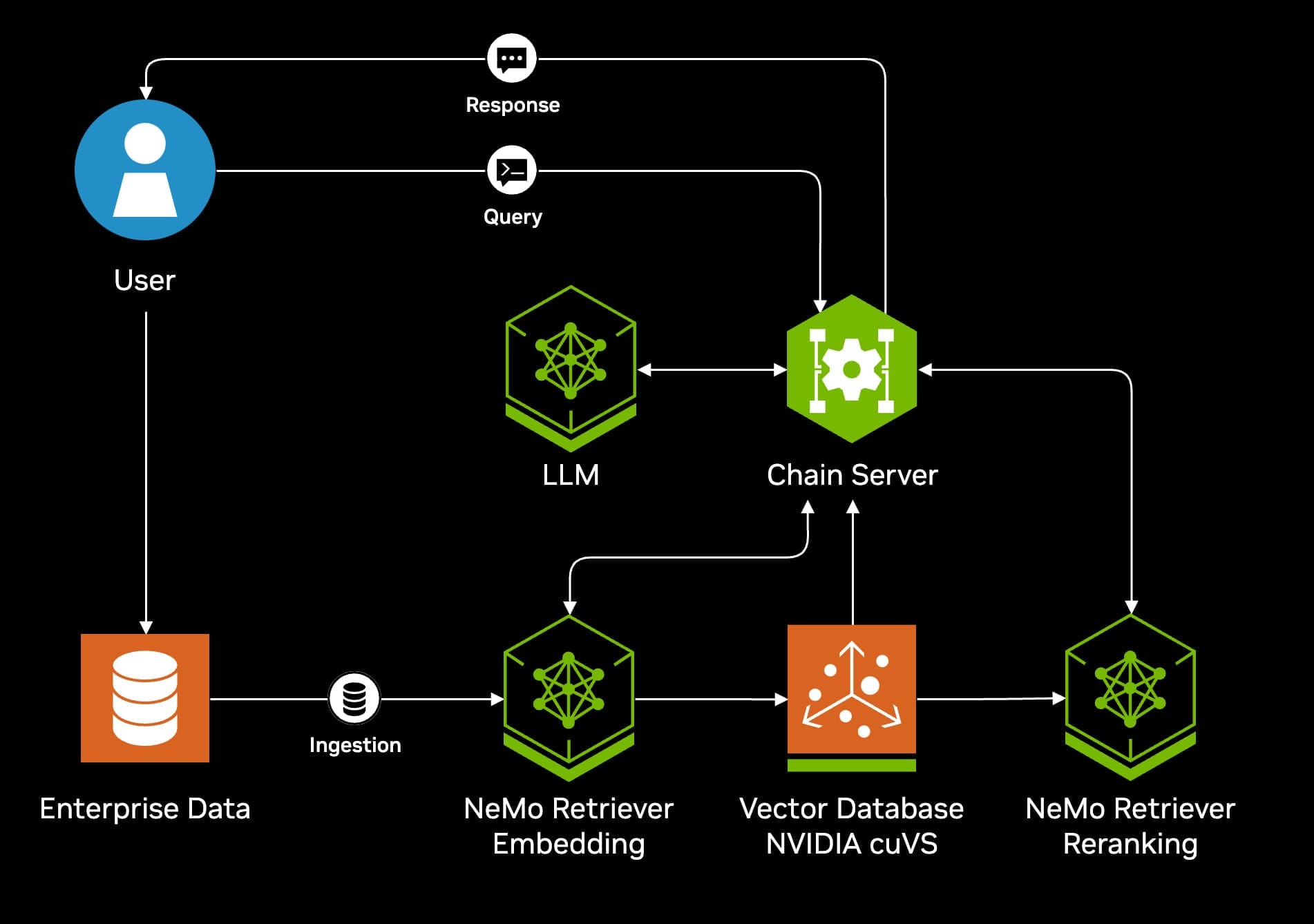

The architecture of a RAG pipeline typically consists of several components, including a retriever, a ranker, and a generator. The retriever is responsible for fetching relevant documents or information from a large corpus of data, while the ranker reorders the retrieved documents based on their relevance to the input query. The generator then uses the ranked documents to produce a response. In the context of the NVIDIA AI Blueprint for RAG, these components are implemented using various NIM microservices, including the NeMo Retriever Llama 3.2 Embedding NIM and the NeMo Retriever Llama 3.2 Reranking NIM.

The NeMo Retriever is a key component of the NVIDIA AI Blueprint for RAG, as it provides an efficient and scalable way to retrieve relevant information from large datasets. The retriever uses embedding models to represent documents and queries in a high-dimensional vector space, allowing for fast and accurate retrieval of relevant documents. The reranking NIM, on the other hand, uses a separate model to reorder the retrieved documents based on their relevance to the input query. This two-stage approach enables more accurate and efficient retrieval of relevant information.

The NVIDIA AI Blueprint for RAG also includes support for various software components, including LangChain and the Milvus database accelerated with NVIDIA cuVS. These components provide additional functionality and optimization for building scalable and customizable RAG pipelines. For example, LangChain is a framework for building conversational AI applications, while the Milvus database provides an efficient way to store and manage large amounts of data.

Deployment and System Requirements

The deployment of a RAG pipeline can be done using various methods, including Docker and Kubernetes. The NVIDIA AI Blueprint for RAG provides support for these deployment options, making it easy to deploy and manage RAG pipelines in different environments. In terms of system requirements, the blueprint recommends using 5 H100 or A100 GPUs with the Llama 3.1 70b NIM, the NeMo Retriever embedding and reranking NIM, and the Milvus database accelerated with NVIDIA cuVS.

The use of GPUs is essential for building scalable and customizable RAG pipelines, as they provide the necessary computational power to handle large amounts of data and complex models. The recommended system requirements also include Ubuntu 22.04 OS, which provides a stable and reliable environment for deploying and managing RAG pipelines. Additionally, the blueprint uses API endpoints by default, making it easy to experience without needing GPUs. However, as development progresses, it is expected that the NIM microservices will need to be self-hosted, requiring more computational resources.

Applications and Use Cases of RAG Pipelines

RAG pipelines have a wide range of applications and use cases, particularly in enterprise settings. One of the primary use cases is in customer service, where RAG pipelines can be used to build AI assistants that provide context-aware responses to customer inquiries. These assistants can connect to large corpora of data, including knowledge bases and FAQs, to provide accurate and relevant information to customers.

Another use case for RAG pipelines is in digital humans, where they can be used to create more realistic and engaging interactions between humans and machines. By connecting to large amounts of data, digital humans can provide more accurate and context-aware responses, making them more effective and efficient. Additionally, RAG pipelines can be used in various other applications, such as language translation, text summarization, and question answering.

The use of RAG pipelines also enables more advanced analytics and insights, particularly in the context of large language models. By connecting to large corpora of data, LLMs can provide more accurate and relevant results, making them more effective and efficient. Additionally, RAG pipelines can be used to build more transparent and explainable AI systems, as they provide a clear understanding of how the system is generating responses.

External Link: Click Here For More