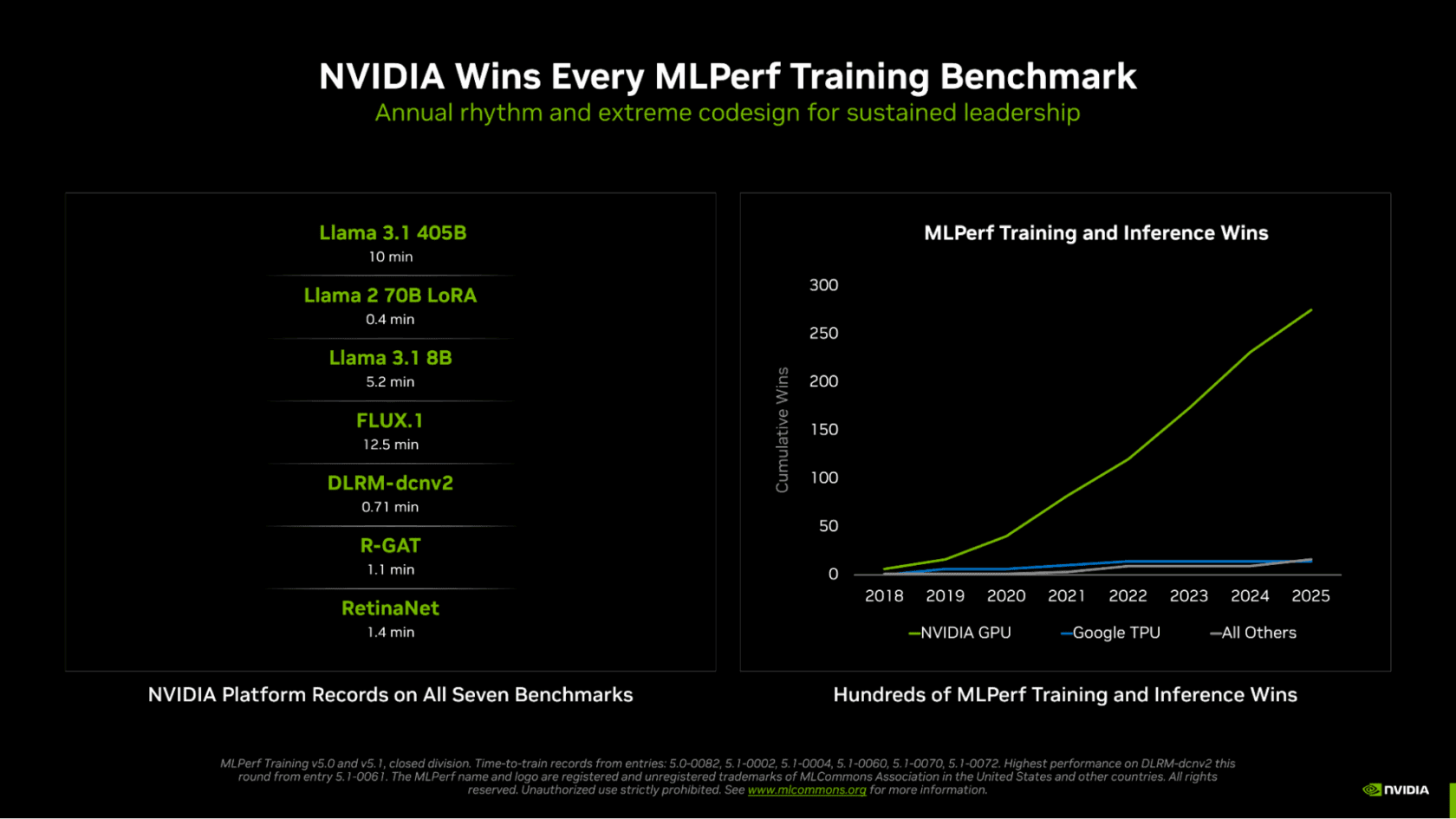

NVIDIA achieved a decisive victory in the MLPerf Training v5.1 benchmarks, securing fastest training times across all seven tests encompassing large language models, image generation, recommender systems, computer vision, and graph neural networks. Utilizing the newly debuted GB300 NVL72 rack-scale system powered by the NVIDIA Blackwell Ultra GPU architecture, the platform delivered over 4x Llama 3.1 405B pretraining and nearly 5x Llama 2 70B LoRA fine-tuning performance compared to the prior Hopper generation. These gains were enabled by architectural enhancements—including 15 petaflops of NVFP4 AI compute and 279GB of HBM3e memory—and innovative training methods leveraging the new NVFP4 precision format.

NVIDIA Blackwell Ultra Dominates MLPerf Training v5.1

NVIDIA’s Blackwell Ultra GPU architecture dominated the MLPerf Training v5.1 benchmark suite, achieving fastest times across all seven tests – large language models, image generation, recommender systems, and more. Notably, NVIDIA was the only vendor to submit results for every test, showcasing the breadth of its CUDA software and GPU programmability. This round marked the debut of the GB300 NVL72 system powered by Blackwell Ultra, immediately establishing a new performance baseline for AI model training.

A key driver of Blackwell Ultra’s success was the implementation of NVFP4 precision for LLM training – a first in MLPerf history. Utilizing new Tensor Cores capable of 15 petaflops of NVFP4 compute, NVIDIA achieved over 4x faster Llama 3.1 405B pretraining and nearly 5x faster Llama 2 70B LoRA fine-tuning, compared to the previous generation Hopper architecture—all while maintaining accuracy requirements. This precision, combined with 279GB of HBM3e memory, unlocked significant performance gains.

The Blackwell Ultra-powered systems achieved a record-breaking 10 minutes to train Llama 3.1 405B using over 5,000 GPUs. Furthermore, NVIDIA set new records on the newly added Llama 3.1 8B and FLUX.1 benchmarks. These results were also bolstered by the Quantum-X800 InfiniBand platform, doubling scale-out networking bandwidth. A broad ecosystem of partners also contributed compelling submissions, highlighting NVIDIA’s widespread influence in the AI landscape.

NVFP4 Precision Unlocks Accelerated LLM Performance

NVIDIA recently swept all seven tests in MLPerf Training v5.1, showcasing significant advancements in AI training performance. Key to these gains was the introduction of NVFP4 precision on the new Blackwell Ultra architecture. This format allows for double—and in some cases triple—the compute rate of FP8, dramatically accelerating LLM training. NVIDIA is the only platform to date achieving MLPerf results with FP4 precision while meeting stringent accuracy requirements, highlighting a substantial engineering achievement.

The GB300 NVL72 system, powered by Blackwell Ultra, delivered over 4x faster Llama 3.1 405B pretraining and nearly 5x faster Llama 2 70B LoRA fine-tuning compared to the previous generation. This leap in performance isn’t just about hardware; it’s a result of carefully designed software and algorithms leveraging the 15 petaflops of NVFP4 compute offered by Blackwell Ultra, coupled with 279GB of HBM3e memory.

NVIDIA achieved a record-breaking 10-minute time-to-train for Llama 3.1 405B using over 5,000 Blackwell GPUs. A submission using 2,560 GPUs achieved a 45% speedup compared to the previous round—demonstrating efficient scaling and the benefits of NVFP4 precision. These results, along with new records on benchmarks like FLUX.1, underscore NVIDIA’s commitment to rapidly pushing the boundaries of AI training capabilities.

Record-Breaking Performance Across Diverse Benchmarks

NVIDIA swept all seven tests in the latest MLPerf Training v5.1 benchmarks, demonstrating record-breaking performance across large language models (LLMs), image generation, and more. Notably, they were the only platform to submit results for every test, highlighting the versatility of their CUDA software and GPU programmability. This comprehensive success underscores NVIDIA’s dominance in AI training and their ability to deliver consistent, leading-edge results across diverse AI workloads.

The new Blackwell Ultra architecture, debuting in the GB300 NVL72 system, delivered over 4x faster Llama 3.1 405B pretraining and nearly 5x faster Llama 2 70B LoRA fine-tuning compared to the prior Hopper generation – all using the same number of GPUs. This leap is driven by innovations like new Tensor Cores offering 15 petaflops of NVFP4 AI compute and 279GB of HBM3e memory. Coupled with the Quantum-X800 InfiniBand platform (doubling networking bandwidth), Blackwell Ultra represents a significant architectural advancement.

A key enabler of these results was the adoption of NVFP4 precision – a first in MLPerf Training history. NVIDIA achieved a record-breaking Llama 3.1 405B training time of just 10 minutes using over 5,000 Blackwell GPUs. This was accomplished through careful design to maintain accuracy with lower-precision calculations and by leveraging Blackwell Ultra’s ability to perform FP4 calculations at up to 3x the rate of FP8, unlocking substantial AI compute performance.