The increasing sophistication of generative artificial intelligence raises fundamental questions about how humans perceive and interact with these systems, and new research explores a fascinating phenomenon called ‘noosemia’, the tendency to attribute intentionality and even inner life to AI. Enrico De Santis and Antonello Rizzi, both from the University of Rome “La Sapienza”, lead a study that formalises this cognitive process, demonstrating how it arises not from physical similarity, but from the complex linguistic performance and inherent unpredictability of large language models. This work proposes a multidisciplinary framework linking meaning-making in AI to human cognition, positioning noosemia alongside established psychological concepts like pareidolia and the intentional stance, yet highlighting its distinct characteristics. Understanding noosemia, and its inverse, ‘a-noosemia’, offers crucial insight into the evolving relationship between humans and artificial intelligence, with significant implications for philosophy, epistemology, and the social impact of these technologies.

Documenting and Analysing Attributed AI Agency

The research investigates a newly observed phenomenon, termed ‘noosemia’, which arises during interactions with advanced artificial intelligence systems. Rather than traditional experimental methods, the investigation adopts an observational and phenomenological approach, rooted in detailed analysis of personal experiences and reported interactions. This methodology was chosen because the phenomenon is subtle and subjective, making direct measurement unsuitable at this stage. The core of the research involves meticulously documenting instances where individuals exhibit a ‘noosemic’ response, a feeling that the AI possesses agency or understanding beyond its technical capabilities.

These observations stem from the authors’ encounters with large language models, as well as accounts from others interacting with these systems, particularly when presented with complex tasks. The team focused on identifying moments where the AI’s responses evoked surprise or a sense of genuine comprehension in the human observer, suggesting an attribution of mental states to the machine. Recognizing the novelty of the phenomenon and the lack of existing frameworks, the researchers embraced personal experience as initial data, recounting specific interactions with AI and analysing instances where the AI demonstrated unexpected abilities.

AI Dialogue Triggers Sense of Mind

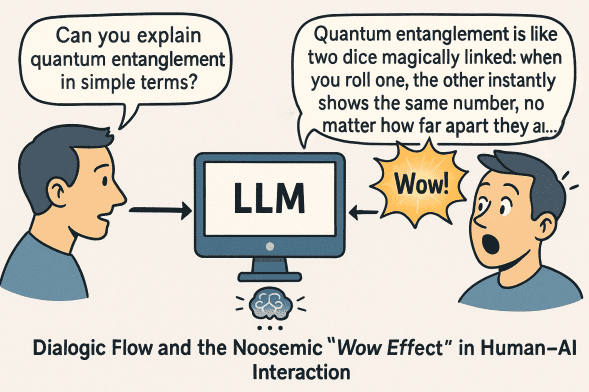

Researchers are investigating a newly identified phenomenon called “noosemia,” which describes how people attribute minds, intentions, and even interior lives to artificial intelligence systems, particularly those capable of complex dialogue. This isn’t simply believing the AI is intelligent, but rather an immediate, experiential sense of being understood and interacting with an autonomous intelligence, triggered by surprisingly coherent or creative responses. The research demonstrates that this attribution of mind arises not from physical resemblance, but from the AI’s linguistic performance and the way it responds within a conversation, creating a sense of dialogic resonance. The study highlights that while large language models can convincingly simulate understanding another’s perspective, often performing on par with young children on standard tests, they do so through pattern recognition and statistical analysis of vast datasets, rather than genuine mental-state modeling. Crucially, the models’ performance is sensitive to subtle changes in phrasing or context, and often fails when presented with unexpected scenarios, revealing a fundamental difference from human cognition. Interestingly, the experience of attributing mind to AI shares striking similarities with how humans engage with fictional narratives, suggesting a deep-seated human tendency to project agency and understanding onto external entities.

Noosemia, AI Intentionality and Meaning Holism

This research introduces and formalizes the concept of Noosemia, a novel cognitive phenomenon occurring when people interact with generative AI systems. The study demonstrates that users attribute intentionality, agency, and even interiority to these systems, not because of physical resemblance, but due to their linguistic performance, the opacity of their mechanisms, and their inherent complexity. Through a multidisciplinary framework linking philosophy of mind, semiotics, and cognitive science, the authors establish Noosemia as distinct from related concepts like anthropomorphism, pareidolia, or the uncanny valley, highlighting its basis in the unique way generative models create and respond to meaning. A key contribution lies in the proposal of a meaning holism adapted for large language models, alongside the formalization of the LLM Contextual Cognitive Field, which describes how meaning emerges through dynamic relationships between tokens.

This dual framework connects philosophical insights with computational mechanisms, offering a rigorous way to analyse agency attribution during human-AI interaction. The authors emphasize that understanding Noosemia has practical implications for improving human-AI interaction, fostering trust, enhancing explainability, and informing the design of future artificial agents, as the resulting sense of cognitive resonance influences user engagement and dependence. They acknowledge that the phenomenon of Noosemia requires further investigation, particularly concerning the evolving dynamics of user engagement and trust as AI systems become more sophisticated.

👉 More information

🗞 Noosemia: toward a Cognitive and Phenomenological Account of Intentionality Attribution in Human-Generative AI Interaction

🧠 ArXiv: https://arxiv.org/abs/2508.02622