Anthropic has developed new statistical techniques to improve the evaluation of language models and artificial intelligence systems that generate human-like text. The current methods for evaluating these models can be flawed, leading to inaccurate or misleading results.

A team of researchers has proposed five recommendations to address these issues, including analyzing paired differences between models, using power analysis to determine the number of questions needed to detect significant differences, and generating multiple answers per question to reduce randomness in scoring.

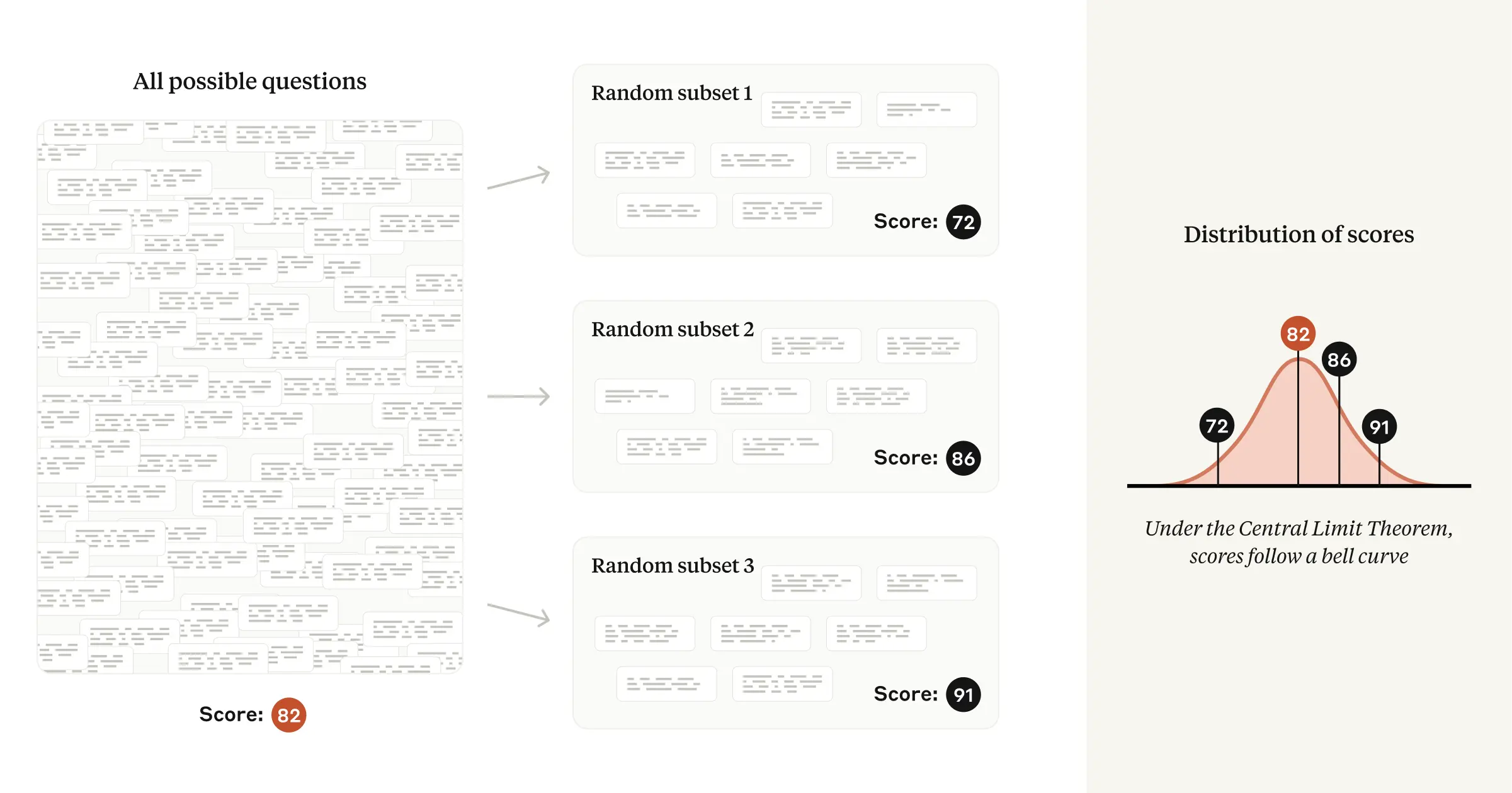

These techniques can help eliminate variance in question difficulty and focus on the variance in responses, providing a more precise signal from the data. The researchers’ paper, “Adding Error Bars to Evals: A Statistical Approach to Language Model Evaluations,” aims to provide more precise and clear evaluations of language models, which are critical for advancing AI research.

The five recommendations outlined in the article are spot on. Let me break them down for you:

- Recommendation #1: Report standard errors and confidence intervals

This is a no-brainer. Without error bars, eval scores are essentially meaningless. By reporting standard errors and confidence intervals, researchers can provide a more accurate picture of their models’ performance. - Recommendation #2: Use chain-of-thought reasoning (CoT) with resampling

I completely agree with the authors on this one. CoT is an excellent technique for evaluating language models, and resampling answers from the same model multiple times can help reduce the spread in eval scores. - Recommendation #3: Analyze paired differences

This is a crucial point. By conducting paired-differences tests, researchers can eliminate the variance in question difficulty and focus on the variance in responses between models. The correlation coefficient between two models’ question scores can also provide valuable insights into their performance. - Recommendation #4: Use power analysis

Power analysis is an essential tool for evaluating language models. By calculating the number of questions required to detect a statistically significant difference between models, researchers can design more effective evals and avoid wasting resources on underpowered studies. - Recommendation #5: Report pairwise information

Finally, reporting pairwise information such as mean differences, standard errors, confidence intervals, and correlations can provide a more comprehensive understanding of how different models perform relative to each other.

In conclusion, I wholeheartedly endorse the recommendations outlined in this article. By adopting these statistical best practices, researchers can elevate the field of language model evaluations and gain a deeper understanding of AI capabilities.

External Link: Click Here For More