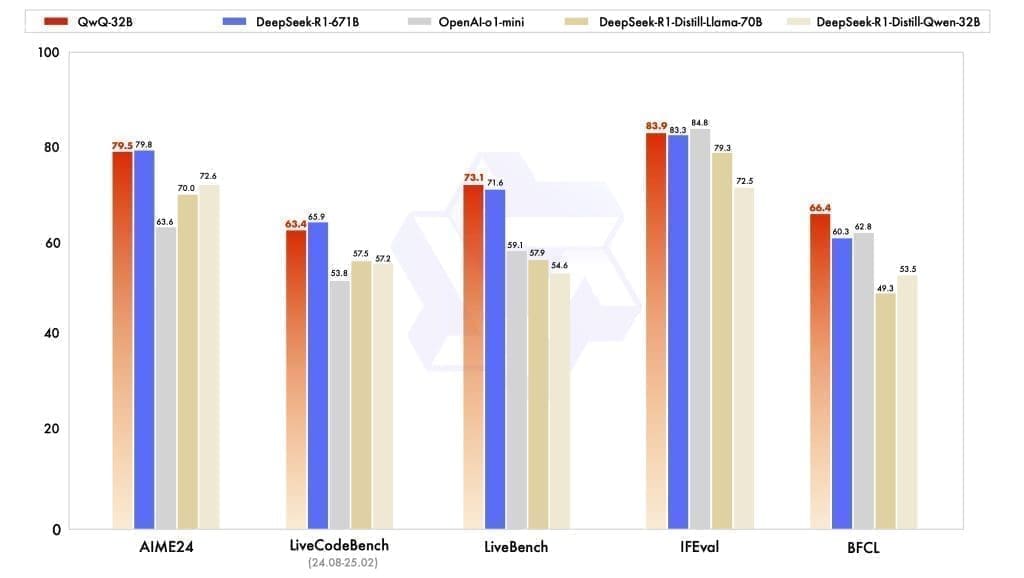

The Qwen Team has introduced QwQ-32B. It is a 32 billion-parameter large language model. This model leverages reinforcement learning (RL) to achieve performance comparable to DeepSeek-R1. Remarkably, it does so despite having fewer parameters. This advancement highlights the effectiveness of scaling RL on strong foundation models pre-trained with extensive knowledge.

The model integrates agent-related capabilities, enabling critical thinking and adaptability through tool use and feedback. QwQ-32B is open-source under Apache 2.0 and accessible via Hugging Face, ModelScope, and Qwen Chat. It has been evaluated across benchmarks for math, coding, and general problem-solving. The development underscores the potential of RL in enhancing model capabilities and moving closer to artificial general intelligence (AGI).

Scaling Reinforcement Learning for Enhanced Intelligence

Scaling reinforcement learning (RL) plays a crucial role in enhancing the reasoning capabilities of models like QwQ-32B. By integrating RL with pretrained language models, researchers can significantly improve performance across various tasks. This approach allows models to learn from interactions and adapt strategies over time, leading to more robust decision-making processes.

Compared to other models such as DeepSeek-R1, QwQ-32B demonstrates enhanced reasoning abilities through the effective use of scaled RL. Integrating agents with RL further extends these capabilities by enabling long-horizon reasoning, which is essential for solving complex problems that require sequential decision-making over extended periods.

Looking ahead, future work aims to combine stronger foundation models with scaled computational resources to advance towards artificial general intelligence (AGI). This research underscores the potential of RL in unlocking greater intelligence and highlights its importance as a tool for developing more sophisticated AI systems.

Model Architecture and Scaled RL Implementation

The QwQ-32B model incorporates a robust architecture designed to support scaled reinforcement learning (RL), enabling enhanced reasoning capabilities. This architecture allows the model to process complex tasks by leveraging distributed computing resources, ensuring efficient scaling of RL processes.

A key aspect of this implementation involves the use of advanced algorithms tailored for large-scale environments. These algorithms optimize decision-making through iterative learning, allowing the model to adapt and improve over time without compromising performance.

Furthermore, the integration of agents within the RL framework is pivotal for handling long-term reasoning tasks. This setup enables the model to manage sequential decisions effectively, addressing intricate problems that require extended periods of analysis and strategic planning.

More information

External Link: Click Here For More