A breakthrough in artificial intelligence has been achieved with the development of bitnet.cpp, a novel inference framework designed specifically for 1-bit Large Language Models (LLMs). This innovative technology enables fast and lossless inference of 1.58-bit models on central processing units (CPUs), with support for neural processing units (NPUs) and graphics processing units (GPUs) forthcoming.

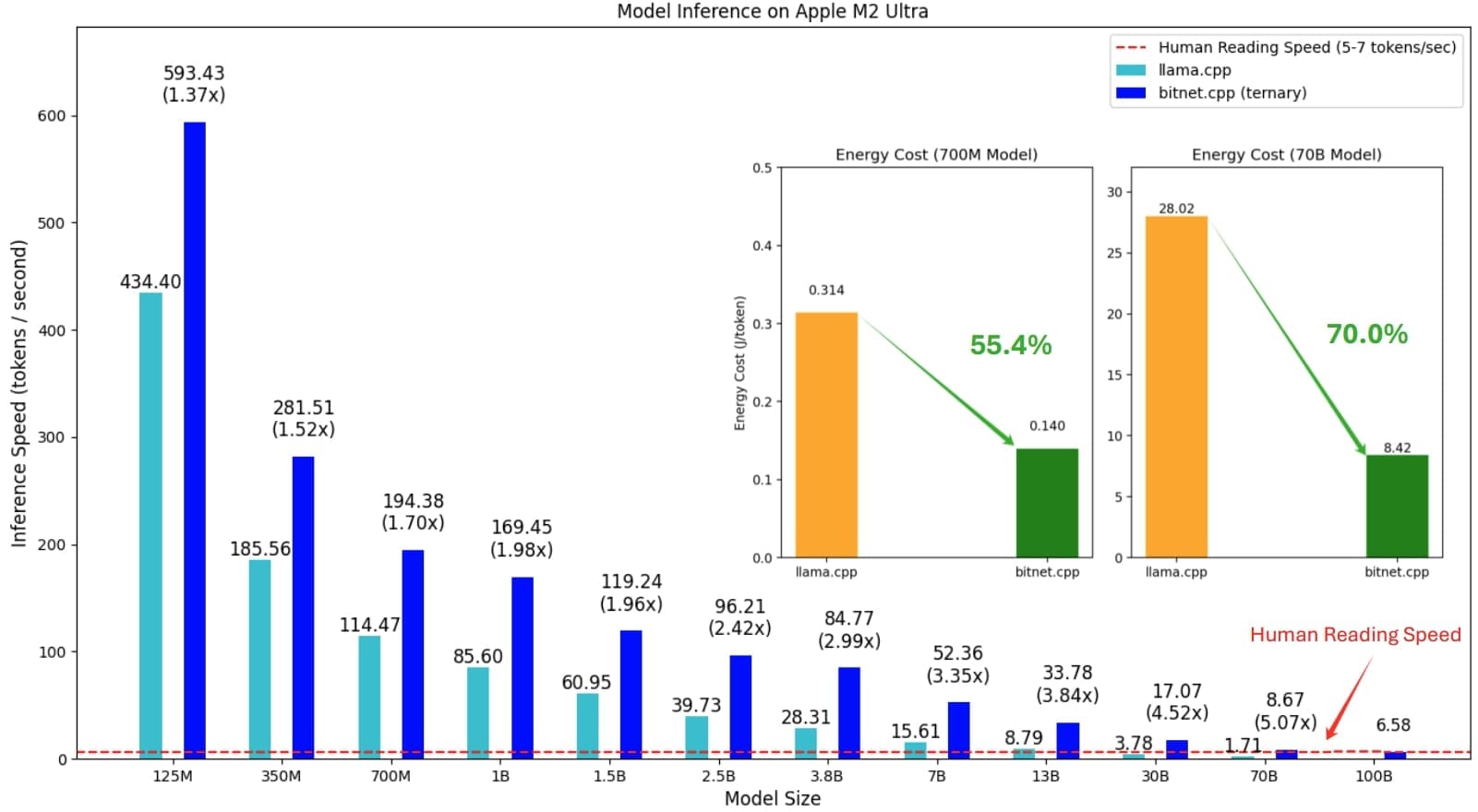

The initial release of bitnet.cpp has demonstrated remarkable speedups, ranging from 1.37x to 5.07x on ARM CPUs, and 2.37x to 6.17x on x86 CPUs. Moreover, energy consumption is significantly reduced, by 55.4% to 70.0% on ARM CPUs and 71.9% to 82.2% on x86 CPUs. This technological advancement has far-reaching implications, as it enables the deployment of LLMs on local devices, such as smartphones and laptops, with processing speeds comparable to human reading rates.

Efficient Inference Framework for 1-Bit Large Language Models

BitNet, an innovative framework, has been developed to facilitate fast and lossless inference of 1-bit large language models (LLMs) on various hardware platforms. The initial release of BitNet focuses on central processing units (CPUs), with plans to extend support to neural processing units (NPUs) and graphics processing units (GPUs) in the future.

This development is significant because it can enable efficient inference of LLMs on local devices, which is crucial for various applications such as natural language processing, machine learning, and artificial intelligence. The current limitations of running LLMs on local devices are primarily due to their computational intensity and memory requirements. BitNet addresses these challenges by providing a suite of optimized kernels that can efficiently execute 1-bit LLMs on CPUs.

The performance benchmarks of BitNet demonstrate its capabilities to achieve significant speedups and energy reductions compared to existing inference frameworks. On ARM CPUs, BitNet achieves speedups ranging from 1.37x to 5.07x, with larger models experiencing greater performance gains. Additionally, it reduces energy consumption by 55.4% to 70.0%, further boosting overall efficiency. Similarly, on x86 CPUs, speedups range from 2.37x to 6.17x, with energy reductions between 71.9% to 82.2%. These results underscore the potential of BitNet in enabling efficient inference of LLMs on local devices.

Optimized Kernels for Efficient Inference

The optimized kernels provided by BitNet are a key component of its efficiency in executing 1-bit LLMs on CPUs. These kernels have been specifically designed to take advantage of the unique characteristics of 1-bit models, allowing for fast and lossless inference. The development of these kernels involved careful optimization of various parameters, including memory access patterns, data types, and computational instructions.

The optimized kernels in BitNet can execute complex computations required by LLMs while minimizing energy consumption and maximizing performance. This is achieved through a combination of techniques, including parallelization, pipelining, and register blocking. By leveraging these techniques, BitNet’s kernels can efficiently execute the matrix multiplications, convolutions, and other operations that are fundamental to LLMs.

Running Large Language Models on Local Devices

One of the primary advantages of BitNet is its ability to enable running large language models on local devices, such as smartphones, laptops, and desktop computers. This capability has significant implications for various applications, including natural language processing, machine learning, and artificial intelligence. By executing LLMs locally, devices can perform complex tasks without relying on cloud-based services or high-performance computing infrastructure.

The demonstration of running a 100B BitNet b1.58 model on a single CPU is a testament to the capabilities of BitNet in enabling efficient inference of LLMs on local devices. This achievement is significant, as it allows for real-time processing of natural language inputs, with speeds comparable to human reading (5-7 tokens per second). The potential applications of this capability are vast, ranging from virtual assistants and chatbots to language translation and sentiment analysis.

Future Directions and Extensions

While the initial release of BitNet focuses on CPUs, future extensions will target NPUs and GPUs. This will further expand the capabilities of BitNet in enabling efficient inference of LLMs on a broader range of hardware platforms. Additionally, ongoing research is exploring ways to optimize BitNet’s kernels for specific use cases, such as edge computing and autonomous vehicles.

The development of BitNet has significant implications for the field of artificial intelligence, as it enables efficient execution of complex models on local devices. As the capabilities of LLMs continue to evolve, the importance of efficient inference frameworks like BitNet will only increase. By providing a suite of optimized kernels and efficient inference capabilities, BitNet is poised to play a critical role in shaping the future of AI research and applications.

External Link: Click Here For More