The pursuit of artificial intelligence increasingly focuses on replicating the efficiency and adaptability of the human brain, and a new approach, termed neuromorphic intelligence, offers a promising path forward. Marcel van Gerven from Radboud University and colleagues demonstrate how brain-inspired systems can achieve significantly greater energy efficiency than conventional digital computers. This research establishes a unifying theoretical framework, rooted in dynamical systems theory, to integrate insights from diverse fields including neuroscience, physics, and artificial intelligence. By harnessing noise as a learning resource and employing differential genetic programming, the team advances the development of truly adaptive and sustainable artificial intelligence, paving the way for emergent intelligence arising directly from physical substrates.

Brain-Inspired Computation and Evolutionary Machine Learning

This collection of papers explores interconnected fields centered on building intelligent systems inspired by the brain. A significant focus lies on neuromorphic computing, which aims to create hardware that mimics the brain’s structure and function, including spiking neural networks and energy-based models. Researchers are also investigating machine learning techniques, often with an emphasis on making them more biologically plausible, efficient, and robust. A strong theme is the use of evolutionary computation, employing algorithms like genetic programming to design circuits, learning rules, and entire AI systems.

This work extends to robotics, embodied AI, computational neuroscience, and the foundational principles of cybernetics and control theory. Scientists are actively developing neuromorphic computing systems, exemplified by self-organizing nanowire networks that function as stochastic dynamical systems. Early work on learning in analog neuromorphic hardware continues to inform modern approaches, while analysis of learning dynamics in spiking neural networks reveals key principles for efficient computation. Researchers are bridging the gap between energy-based models and backpropagation with novel learning rules, and demonstrating learning in physical, rather than simulated, neural networks.

Adaptable time constants in spiking neural networks are also being explored to enhance performance, and computational models are providing insights into brain oscillations. Alongside neuromorphic computing, advancements in machine learning are pushing the boundaries of artificial intelligence. The Transformer architecture, a cornerstone of modern deep learning, continues to inspire new approaches, including a revival of Hopfield networks using contemporary techniques. Researchers are questioning the necessity of recurrent neural networks, exploring alternative architectures for sequence processing.

Meta-learning, a technique for learning how to learn, is also receiving significant attention, and learning rules for spiking networks are being refined to improve efficiency and robustness. Evolutionary computation and genetic programming play a crucial role in this research landscape. Modern implementations of genetic programming, such as those built in JAX, are enabling the discovery of flexible and scalable solutions. Researchers are utilizing evolutionary algorithms to design circuits and solve general problems, and exploring the potential of evolutionary programming for adaptive systems. The development of intelligent agents extends to the realm of robotics and embodied AI.

Researchers are investigating swarm robotics, exploring how groups of robots can cooperate to achieve complex tasks. Intrinsic motivation, a driving force for exploration and learning, is being incorporated into robotic systems, and researchers are creating agents that can interact with the physical world and learn from their experiences. Computational neuroscience provides insights into brain function, informing the design of more intelligent and adaptive systems. Foundational works in cybernetics and dynamical systems theory provide a theoretical framework for understanding intelligence. Wiener’s seminal text on cybernetics remains relevant, and philosophical discussions of technology and its impact continue to shape the field.

Ornstein-Uhlenbeck Adaptation for Neural Networks

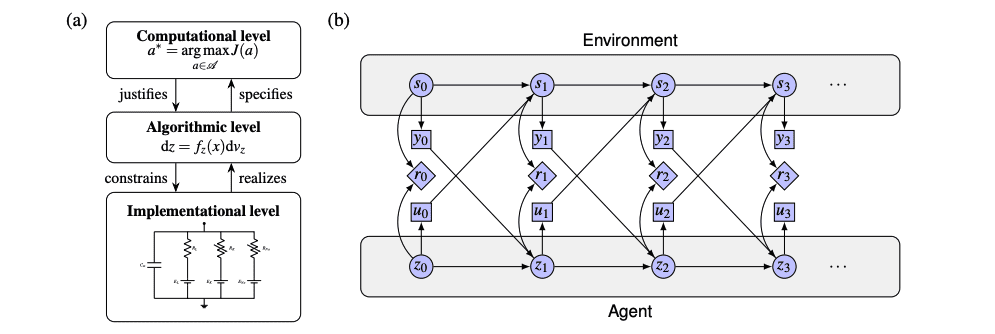

Scientists have developed a novel methodology for creating adaptive artificial systems inspired by the efficiency of the human brain. This work pioneers the use of noise not as an impediment, but as a fundamental resource for learning within artificial neural networks. The team engineered a system where parameter adjustments occur purely through the equations of motion, eliminating the need for traditional gradient-based optimization. This approach centers on Ornstein-Uhlenbeck adaptation (OUA), a mechanism that introduces state variables representing parameters, parameter means, and reward estimates.

The core of the OUA method involves defining the dynamics of each parameter through an Ornstein-Uhlenbeck process, where the rate of adaptation and the influence of Brownian noise are carefully controlled. Learning is induced by modifying the parameter means through the reward prediction error, effectively implementing credit assignment. To quantify reward, the team defined a reward estimate with a dynamic equation, allowing the system to track and respond to its environment. To demonstrate the efficacy of this noise-based learning, scientists applied OUA to a continuous-time recurrent neural network, extending its state to include the OUA variables.

Simulations revealed that the network parameters dynamically adjusted to achieve a more desirable state, solely through the system’s inherent equations of motion. Furthermore, the team explored dynamic updating of the noise variance, allowing the system to adaptively balance exploration and exploitation. Beyond adaptation during an agent’s lifetime, the research extends to a phylogenetic timescale through differential genetic programming, evolving populations of agents to increase average fitness across generations. This evolutionary approach allows for optimization of the system’s symbolic structure and slow-changing components, achieving improvements beyond those attainable through experience-dependent learning alone.

Brain-Inspired Computing Achieves Extreme Efficiency

Scientists are investigating brain-inspired computing as a pathway to more sustainable and efficient artificial intelligence. Current deep learning approaches consume vast resources, with projections indicating that compute costs could approach global energy production by 2050. This work proposes a shift towards neuromorphic computing, which mimics the human brain’s structure and function to dramatically reduce energy consumption. The human brain achieves approximately 1 exaFLOPS while consuming only 20W of power, comparable to a single light bulb. Researchers aim to replicate this efficiency in artificial systems, recognizing that current AI’s resource intensity limits accessibility and raises environmental concerns.

This study champions a theoretical framework rooted in dynamical systems theory, a mathematical approach using differential calculus to model intelligence in both natural and artificial systems. Dynamical systems theory provides a language for understanding how inference, learning, and control can emerge from the inherent dynamics of a system, removing the need for traditional software-hardware separation. The team proposes that by directly mapping these dynamical equations onto physical substrates, neuromorphic systems can be developed that more closely align with natural intelligence. This approach allows for the automatic discovery of intelligent systems through evolutionary algorithms, searching for optimal configurations within the physical constraints of the hardware. By embracing this common theoretical foundation, scientists can foster collaboration across diverse disciplines, including neuroscience, materials science, and artificial intelligence, to unlock the full potential of neuromorphic computing and create truly sustainable AI.

Evolving Equations Drives Complex Adaptive Behaviour

This research demonstrates a pathway towards more efficient artificial intelligence by drawing inspiration from the principles governing the human brain, specifically focusing on dynamical systems theory. The team successfully evolved the equations defining the behaviour of neuromorphic agents, achieving improved control over simulated environments through both experience-dependent learning and a process of symbolic evolution. This work establishes that complex adaptive behaviour can emerge directly from the physical equations governing a system, suggesting a fundamental shift in how AI systems are designed and implemented. The study highlights the potential of harnessing noise as a resource for learning, utilizing an Ornstein-Uhlenbeck adaptation mechanism to drive the development of these agents.

👉 More information

🗞 Neuromorphic Intelligence

🧠 ArXiv: https://arxiv.org/abs/2509.11940