The increasing demand for robots operating seamlessly within complex, real-world environments presents a significant challenge for computing infrastructure. Jakub Fil of WAIYS GmbH, Yulia Sandamirskaya and Loïc Azzalin from Zurich University of Applied Sciences, alongside Hector Gonzalez et al., address this need by exploring a novel heterogeneous computing platform designed for real-time robotics. Their research details a system combining the strengths of neuromorphic computing, utilising the Loihi2 processor and event-based cameras, with the processing power of conventional GPU clusters. This innovative architecture facilitates both rapid sensory perception and high-level cognitive functions, demonstrated through an interactive task involving a humanoid robot and a human musician. The work highlights a crucial step towards realising the vision of Society 5.0, where robots and humans co-exist and collaborate within intelligent, responsive infrastructures.

Scientists are addressing this need by exploring a novel heterogeneous computing platform designed for real-time robotics, combining neuromorphic computing with the processing power of conventional GPU clusters. This innovative architecture facilitates both rapid sensory perception and high-level cognitive functions, demonstrated through an interactive task involving a humanoid robot and a human musician. The work highlights a crucial step towards realising the vision of Society 5.0, where robots and humans co-exist and collaborate within intelligent, responsive infrastructures.

Neuromorphic-GPU Synergy for Real-Time Cognition

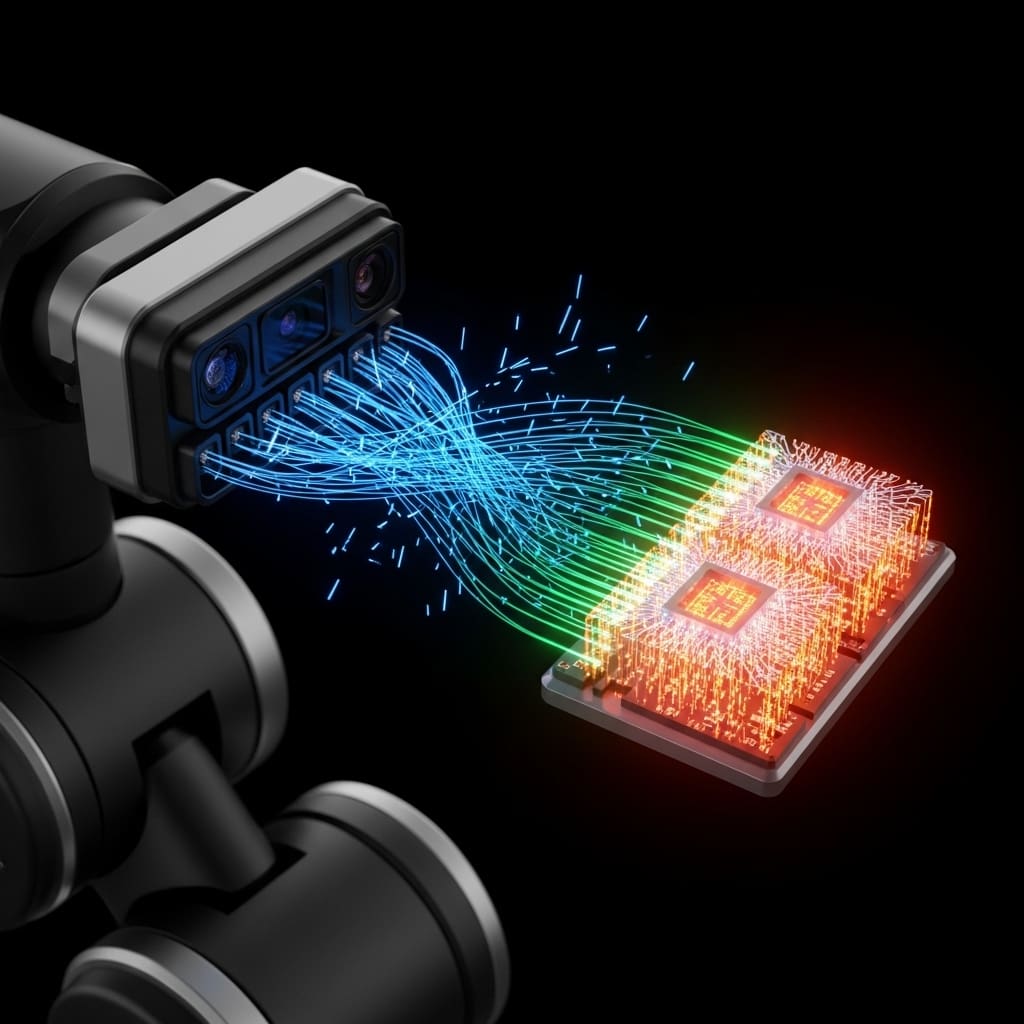

Scientists demonstrate a novel computing platform poised to underpin the next generation of smart cities and robotics, moving beyond the integrated systems of Industry 4.0 towards the ambitious vision of Society 5.0. This research establishes a synergistic architecture combining the strengths of neuromorphic computing, exemplified by the Intel Loihi2 processor, with the power of conventional GPU clusters. The team achieved real-time perception and interaction by pairing Loihi2 with event-based cameras, enabling rapid processing of visual information at the network edge, while leveraging GPUs for complex language processing, cognition, and task planning. This innovative approach addresses the critical need for energy-efficient, low-latency computing to support the increasing demands of cognitive cities co-inhabited by humans and robots.

Researchers successfully integrated the Loihi2 processor and a Dynamic Vision Sensor (DVS) camera, training an algorithm to track human hands in real-time, a first for this specific system. This neuromorphic foundation is then extended by incorporating NVIDIA DGX GPU systems, capable of running large language models and a specialized version of the Spaun cognitive architecture, which implements brain-inspired computation mechanisms for complex memory tasks. Experiments show the system can process sensor streams in real-time, enabling a humanoid robot to perform intricate actions, such as playing a musical instrument alongside a human partner. The research team designed a specialized, sustainable supercomputing Micro Data Center featuring an innovative direct hot liquid cooling system, further enhancing energy efficiency and thermal management.

This multifaceted approach facilitates real-time speech and action prediction, critical for controlling the humanoid robot during the interactive musical performance. The work opens new avenues for managing complex systems within future cognitive cities, including vehicles, drones, and robots, demanding advanced algorithms for adaptation, predictive maintenance, and resource allocation. This research proves the viability of a hybrid architecture combining large-scale deep learning with symbolic reasoning and structured knowledge, essential for effective artificial intelligence. The team exemplifies this integration through an innovative demonstration of real-time interaction between a robot and a human playing a theremin, showcasing the potential of this platform for natural human-robot communication. By leveraging high-density GPUs for long-term, compute-intensive tasks and energy-efficient neuromorphic hardware for real-time decision-making, the system optimizes performance while minimizing energy consumption, paving the way for sustainable operation within smart city environments.

Loihi2, Ameca, and Multimodal Dataset for Interaction

Scientists engineered a hybrid system combining the neuromorphic Loihi2 processor with a local GPU cluster, enabling both real-time perception and high-level cognitive processing. This architecture was demonstrated through an interactive task involving the Ameca humanoid robot playing a theremin alongside a human musician, showcasing the potential for collaborative performance. Central to the study was the development of a training dataset comprising video recordings captured with a ZED2 Stereo camera, data from an iniVation Dynamic Vision Sensor (DVS), and accompanying audio. Images from the ZED2 camera were processed using the Body Tracking API from Stereolabs, allowing for the detection of 18 keypoints on the human body and accurate prediction of limb movements.

The Object Detection API further refined the recordings, providing enhanced data for training the robot to replicate the movements of a master theremin player, Carolina Eyck. Researchers utilized Ameca’s proprietary operating system, Tritium, to program sequences of movements based on these reference recordings. The system translates musical notes and volume levels into corresponding hand movements for the robot, allowing it to perform complex musical pieces. Beyond performance, the robot was equipped with speech and language models deployed on the GPU cluster, enabling conversational interaction with both individuals on stage and the audience.

This capability allows users to request solos, activate teaching modes, or engage in duet performances via a graphical user interface displayed on a tablet. This work pioneers the use of heterogeneous computing for real-time robotics, aligning software algorithms with hardware architectures to minimize latency and maximize efficiency. The integration of neuromorphic hardware, such as Loihi2, with conventional GPU clusters proves essential for addressing the diverse computational demands of social robotics, from immediate sensory feedback to complex memory tasks.

Robot Learns Theremin Through Neuromorphic-GPU Hybrid

Scientists achieved a significant breakthrough in robotic autonomy by integrating neuromorphic computing with high-level cognitive processing, demonstrated through an interactive musical performance between a humanoid robot and a human. The research team successfully combined Intel’s Loihi2 processor with a cluster of NVIDIA GPUs to create a hybrid computing architecture capable of real-time perception and complex task planning. Experiments revealed the system’s ability to enable the Ameca humanoid robot to play the theremin, an electronic instrument requiring precise, contactless control, in response to a human musician. Central to this work is the deployment of the Spaun 2.0 brain model, comprising 6.5 million neurons on an NVIDIA DGX A100 system, alongside the Loihi2 chip for rapid visual information processing.

Measurements confirm the system’s capacity for cognitive memory tasks, influencing the robot’s decision-making and state transitions during the musical interaction. The integration of these disparate components represents the first demonstration of a real-time workload on a neuromorphic platform coupled with a cognitive model running on a computing cluster, paving the way for advanced vision systems. The Ameca robot, equipped with a facial mechanism featuring 32 motors, facilitated nuanced and expressive communication through nonverbal cues, crucial for natural human-robot interaction. Tests prove the effectiveness of conventional note pre-processing techniques and Deep Neural Network models in ensuring precise sound identification from the theremin.

Scientists recorded successful replication of musical notes produced by a human reference, demonstrating the robot’s ability to align its movements with a live performer. This innovative system architecture underscores the potential of brain-inspired hierarchies of processing, combining the capabilities of large-scale neural networks with the energy efficiency of neuromorphic hardware at the edge. The breakthrough delivers a sustainable, energy-efficient platform for advanced robotics, with potential applications in cognitive cities and reducing the energy consumption of robotics at scale. The work builds upon historical precedents, such as the musical automata constructed by Ismail al-Jazari in the 12th century, and advances contemporary efforts to create robots capable of dynamic, interactive musical performances.

Neuromorphic Robotics Enables Realtime Human Interaction

This work presents a novel computing platform tailored to meet the demands of increasingly sophisticated robotic systems envisioned for future smart cities. The researchers successfully integrated neuromorphic hardware—specifically the Loihi2 processor and event-based cameras—with conventional GPU-based computing to enable real-time perception and advanced cognitive functionalities.

👉 More information

🗞 Heterogeneous computing platform for real-time robotics

🧠 ArXiv: https://arxiv.org/abs/2601.09755