The quest for energy-efficient artificial intelligence draws inspiration from the human brain, which performs complex tasks on a mere 20 watts. Jinxuan Ma and Wanlin Guo, both from Nanjing University of Aeronautics and Astronautics, and their colleagues investigate the brain’s fundamental information processing unit, the neural sphere, to unlock the secrets of its remarkable efficiency. Their research reveals how these spheres, formed by clustered neurons, utilise chaotic dynamics and fractal theory to memorise and process information through varied electrical activity, ultimately leading to the development of a novel brain-inspired computing architecture. This architecture predicts a storage capacity comparable to Bytes and processing power of FLOPS for the human brain, with an energy efficiency up to 79%, exceeding current chip technology by a factor of eight and validating the potential of this biologically-inspired approach.

Neurons, Networks and Brain-Inspired Computation

This research details a comprehensive analysis of the human brain, aiming to inspire new computing paradigms. The study seeks to understand the brain’s computational principles to develop more efficient and powerful computing systems, focusing on neuromorphic computing and overcoming the limitations of traditional computer architecture. The team meticulously models neuronal behaviour, incorporating detailed biophysical properties and acknowledging the inherent complexity of these cells. Understanding brain connectivity is crucial, so they reconstruct neuronal networks at various scales, utilising techniques like detailed anatomical tracing and advanced imaging.

Creating comprehensive cell type atlases, detailing the characteristics of different neuron types, is also essential for understanding the functional diversity of neuronal circuits. The research highlights the role of neuronal oscillations in information processing and communication within the brain, connecting brain function to information theory. They consider reaction time as a fundamental limit on processing speed and explore the relationship between information and energy consumption, acknowledging the thermodynamic cost of computation and referencing Landauer’s principle. This work advocates for neuromorphic computing architectures that mimic the brain’s parallel, distributed, and energy-efficient processing, exploring novel materials to create artificial synapses and neurons with brain-like properties. The research presents a holistic view of the brain as an information processing system, aiming to inspire a new generation of computing technologies that are more efficient, powerful, and adaptable.

Digital Neurons Model Local Brain Networks

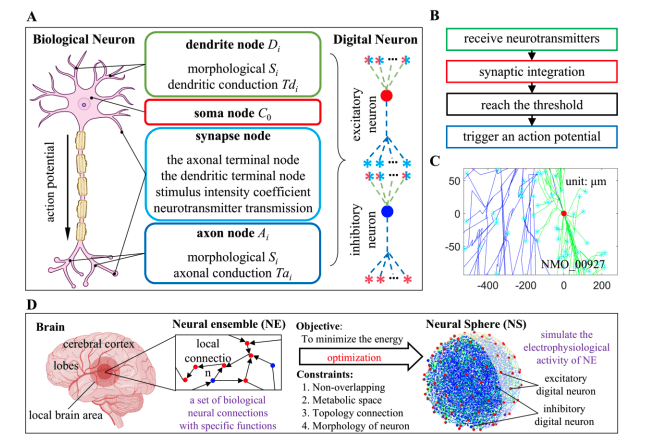

Researchers approached understanding brain efficiency by developing a computational model centred around the ‘neural sphere’, a digitally constructed network mimicking a local network of neurons. This approach focuses on both the physical structure and functional electrophysiological activity of these networks, aiming to decode the principles behind the brain’s remarkable energy efficiency. Understanding how neurons are organised and interact locally is crucial to unlocking whole-brain intelligence. The core of this methodology lies in the creation of ‘digital neurons’, meticulously constructed nodes representing the key components of biological neurons, including dendrites, soma, axon, and synapses.

By carefully connecting these digital neurons, researchers recreated networks that topologically resemble those found in the brain, allowing them to simulate information flow and observe emergent activity patterns. A key innovation was the application of mathematical optimisation to determine the most energy-efficient arrangement of these digital neurons within the neural sphere, establishing constraints to ensure biologically plausible arrangements and minimise energy consumption. This revealed that a spherical distribution of neuron positions consistently yielded the lowest energy expenditure, suggesting this arrangement may be fundamental to brain efficiency. The resulting neural sphere provides a powerful platform for simulating brain activity, allowing researchers to systematically vary parameters and observe resulting electrophysiological activity, providing insights into the computational principles underlying brain function and its remarkable energy efficiency.

Brain Capacity via Chaotic Neural Spheres

Researchers have developed a new understanding of how the human brain achieves remarkable computational power with surprisingly low energy consumption, drawing inspiration from the ‘neural sphere’. This structure organizes neurons into spherical arrangements, enabling efficient information processing through complex electrical activity. The team proposes that the brain utilises chaotic dynamics and fractal geometry to both store and process information, relying on ‘strange attractors’ to manage these complex signals. Based on this understanding, they constructed a theoretical brain architecture, revealing a potential storage capacity ranging from 8.

Bytes, significantly exceeding previous estimates. The High-performance Neuromorphic Computing Architecture (HNCA) differs from traditional computer designs by employing a non-von Neumann approach, offering advantages in energy efficiency, storage density, and processing speed. The HNCA consists of processing and memory neural spheres, working together to receive, process, and store information through intricate patterns of electrical activity. Its fractal structure, where smaller neural spheres are integrated into larger, multi-layered arrangements, enhances information processing capabilities, allowing the brain to reprocess information and extract additional meaning. Importantly, the theoretical calculations demonstrate that the HNCA achieves an energy efficiency up to eight orders of magnitude greater than the latest computer chips, suggesting the brain’s architecture is fundamentally more efficient, operating at approximately 20 watts despite its immense complexity.

Neural Spheres Boost Brain-Like Efficiency

This research presents a computational model of brain architecture based on the concept of “neural spheres”, agglomerations of neurons that optimise energy efficiency and information processing. The team demonstrates that arranging neurons in spherical formations, combined with specific connection probabilities, can significantly reduce energy consumption while maintaining complex electrophysiological activity. Simulations using this model predict a storage capacity and computational power comparable to the human brain, with an energy efficiency several orders of magnitude greater than current computer chips. The findings support the idea that the brain’s efficiency stems from its fundamental architectural principles, specifically the arrangement and connectivity of neurons. The model successfully simulates realistic membrane potential activity and action potentials, suggesting it captures key aspects of neural computation. While the simulations were conducted on relatively small-scale neural spheres, the results provide a foundation for exploring larger, more complex brain models.

👉 More information

🗞 High-performance neuromorphic computing architecture of brain

🧠 ArXiv: https://arxiv.org/abs/2508.03191