The challenge of creating realistic virtual and augmented reality experiences hinges on resolving a fundamental conflict between how our eyes focus and how they perceive depth, a problem known as vergence-accommodation conflict. Ugur Akpinar and Erdem Sahin, both from Tampere University, alongside Tina M. Hayward, Apratim Majumder, Rajesh Menon, and Atanas Gotchev from the University of Utah, now present a new approach to near-eye display technology that overcomes this limitation through accommodation-invariance. Their system combines specially designed optics with an intelligent image processing system, and represents a significant step towards more comfortable and immersive visual experiences. By jointly optimising both the optical elements and the image itself, the researchers demonstrate a system capable of maintaining clear focus across a range of simulated depths, effectively eliminating the strain and discomfort often associated with current virtual and augmented reality headsets.

Key areas of investigation include holographic displays, which reconstruct light fields to create truly three-dimensional images, and near-eye displays, a broader category encompassing various techniques for presenting images directly to the viewer’s eyes. Scientists are exploring methods to extend the depth of field, ensuring images remain sharp and clear at different distances, while also understanding how the eye perceives contrast and sharpness through perceptual metrics. Advanced techniques, such as deep learning, are being applied to optimize image processing and depth estimation, and software tools like Blender and Python facilitate the design and testing of new display systems. Accurate measurement of display resolution and analysis of visual stimuli are essential for evaluating performance and ensuring a comfortable viewing experience, considering factors like the eyebox, field of view, and the interplay between accommodation and convergence.

Jointly Optimised Optics and Neural Image Processing

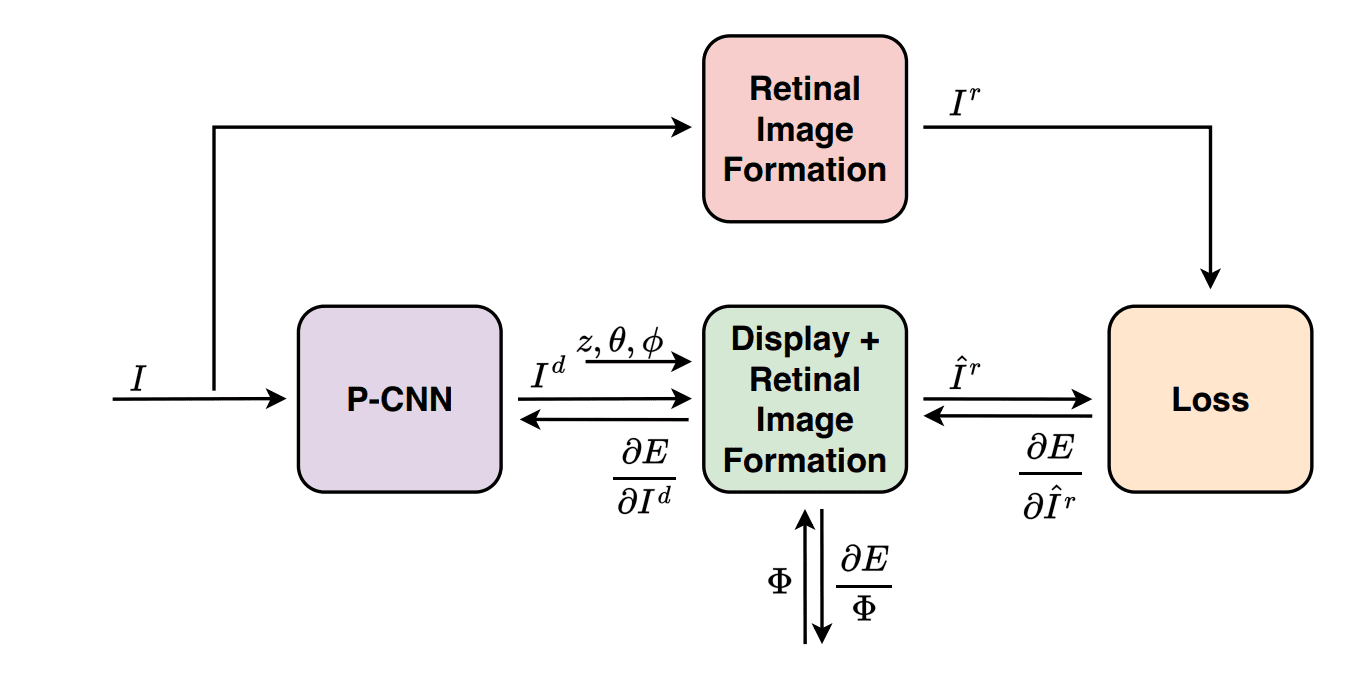

Scientists have developed a novel near-eye display system that addresses the vergence-accommodation conflict, a major obstacle in virtual and augmented reality technologies. The research team engineered an approach based on accommodation-invariance, aiming to remove retinal defocus blur and restore a natural link between eye convergence and accommodation. This system integrates a refractive lens eyepiece with a newly designed diffractive optical element, working in tandem with a pre-processing convolutional neural network. The core innovation lies in the end-to-end learning process, where both the optical element and the image pre-processing are jointly optimized to deliver clear images across a range of depths.

To achieve this, the team developed a fully differentiable model of retinal image formation, accurately simulating how light focuses on the retina and accounting for the limitations of the human eye. Crucially, the researchers incorporated perceptual modeling into the optimization process, integrating the neural transfer function and contrast sensitivity function into the loss model, ensuring displayed images are not only sharp but also perceived naturally. This research represents a substantial advance in near-eye display technology, paving the way for more immersive and comfortable virtual and augmented reality experiences.

Accommodation Invariance Achieved with Wavefront Coding

Scientists have developed a new near-eye display method that addresses the longstanding vergence-accommodation conflict, a key challenge in stereoscopic displays. The work centers on achieving accommodation invariance, meaning the display does not force the eye to focus at a specific distance. This breakthrough integrates a refractive lens eyepiece with a novel diffractive element that utilizes wavefront coding, working in tandem with a pre-processing convolutional neural network. The team employed end-to-end learning to simultaneously optimize both the wavefront coding and the image pre-processing module, creating a system capable of dynamically adjusting to the viewer’s gaze.

Rigorous analysis demonstrates the system’s ability to maintain accommodation invariance across depth ranges of up to four diopters. By extending the depth of field, the system minimizes defocus blur, effectively decoupling accommodation from cues that cause eye strain. Further experiments involved fabricating the designed diffractive element and constructing a benchtop setup, confirming the ability to achieve accommodation invariance. The team’s approach leverages the relationship between defocus blur and binocular disparity, aiming to create a condition where accommodation is dictated by disparity rather than blur cues. To optimize performance, scientists developed an end-to-end learning framework that jointly optimizes a pre-processing convolutional neural network and the novel display optics, utilizing a physics-based differentiable simulation model to propagate images through the display and viewer optics, forming an image on the retina. By comparing the simulated retinal image with a ground-truth image, and incorporating neural contrast sensitivity, the team guides the optimization process toward perceptually meaningful improvements.

Accommodation Invariance with Neural Display Optimisation

This research presents a new near-eye display method designed to overcome the vergence-accommodation conflict, a common problem in stereoscopic displays that causes visual discomfort and fatigue. The team achieved this by developing a system integrating a refractive lens with a novel diffractive element, and optimizing both through a pre-processing convolutional neural network. A key innovation lies in the development of a differentiable model of retinal image formation, accounting for the limitations and aberrations of the human eye, and incorporating perceptual effects like contrast sensitivity. Through both detailed simulations and a functional benchtop setup, the researchers demonstrate accommodation-invariance across a depth range of up to four diopters, successfully coupling accommodation with vergence, reducing strain and improving visual comfort. The method relies on static optics, eliminating the need for complex adaptive optics or gaze tracking systems, and extends the depth of field achievable in conventional displays. Further work is needed to fully evaluate the system’s performance with human subjects, with future research focusing on controlled experiments measuring accommodation responses and subjective assessments of visual comfort, as well as widening the field of view and increasing the usable eye-box size.

👉 More information

🗞 Wavefront Coding for Accommodation-Invariant Near-Eye Displays

🧠 ArXiv: https://arxiv.org/abs/2510.12778