Scientists are increasingly focused on understanding why neural networks demonstrate such remarkable compressibility. Pedram Bakhtiarifard, Tong Chen, and Jonathan Wenshøj, all from the Department of Computer Science at the University of Copenhagen, Denmark, alongside Erik B Dam and Raghavendra Selvan, also from the same institution, present a novel approach to this problem by examining algorithmic complexity. Their research investigates the hypothesis that trained neural network parameters possess inherent structure and, therefore, lower algorithmic complexity than randomly initialised weights, and that compression methods effectively exploit this characteristic. By formalising a constrained parameterisation termed ‘Mosaic-of-Motifs’ (MoMos), the team demonstrates the creation of algorithmically simpler models, achieving comparable performance to unconstrained networks while exhibiting reduced complexity, a finding with significant implications for efficient model design and deployment.

Scientists are beginning to understand why artificial intelligence systems can be dramatically slimmed down without losing accuracy. The ability to compress these ‘neural networks’ could unlock AI on a far wider range of devices, from smartphones to satellites. Empirical results indicate that MoMos not only compresses models without significant performance loss but also creates parameterizations that are demonstrably simpler from an algorithmic standpoint.

The study challenges conventional understandings of model complexity, which typically focus on the number of parameters or computational cost. Instead, this research formalizes complexity as the length of the shortest program required to generate the model’s weights, suggesting that effective training yields solutions with inherent, repeatable patterns.

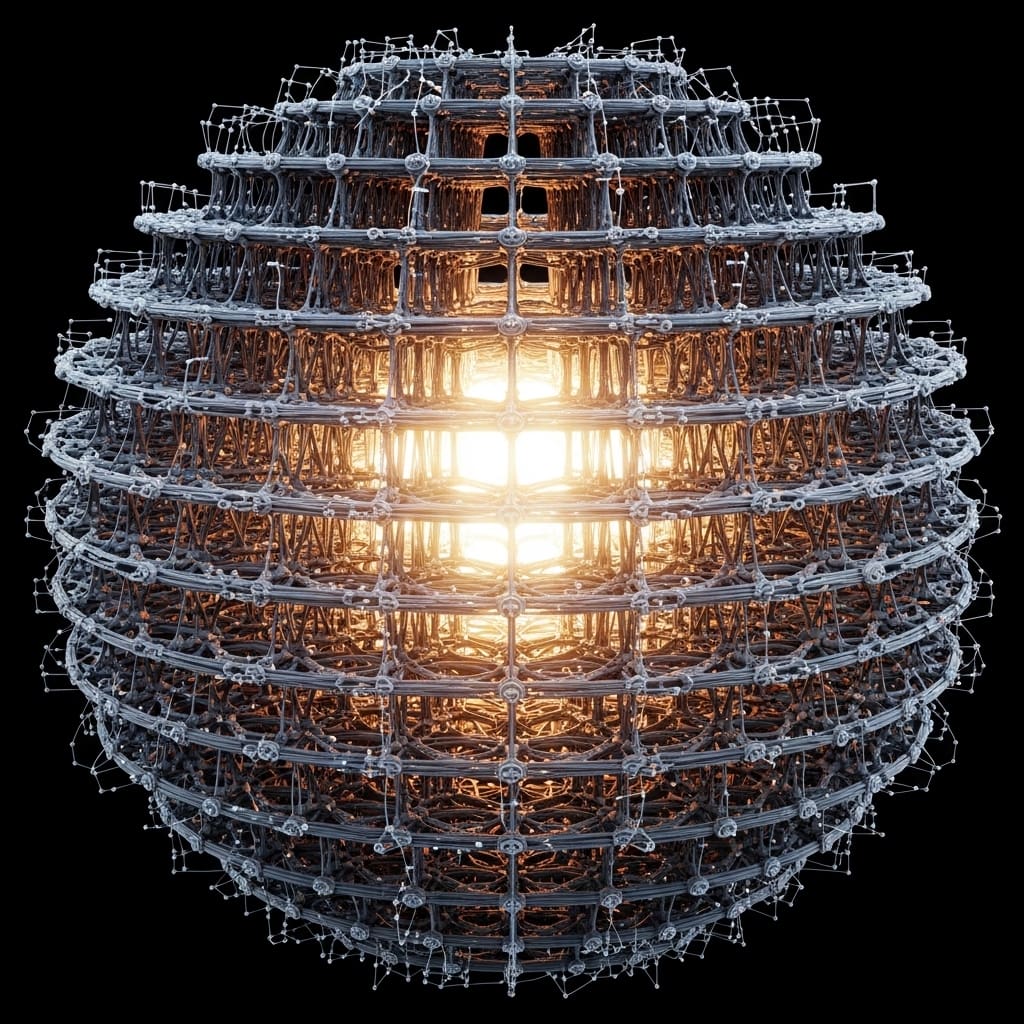

MoMos achieves this by constraining the parameter space, forcing the network to select from a limited set of “motifs” arranged in a defined “mosaic” pattern. This constrained parameterization, partitioning parameters into blocks of size s and selecting from k reusable motifs, demonstrably lowers algorithmic complexity compared to unrestricted models.

By approximating Kolmogorov complexity, a theoretical measure of algorithmic information content, the team has shown that training actively reduces the complexity of neural networks. This simplification translates into models that maintain performance levels comparable to their unconstrained counterparts while requiring fewer resources for storage and computation.

The implications extend beyond mere efficiency gains, suggesting a fundamental principle governing the scalability of deep learning: that the true power of large models lies not just in their size, but in the underlying algorithmic simplicity of their learned representations. This discovery opens avenues for designing intrinsically compressible architectures and developing more efficient training strategies.

Constrained weight matrices leverage reusable motifs to reduce network complexity

Mosaic-of-Motifs (MoMos), a novel method for algorithmically simplifying neural networks, centres on a constrained parameterization technique. The study partitions weight matrices into blocks of size s, then restricts each block to be selected from a set of k reusable motifs, defined by a specific reuse pattern or ‘mosaic’. This contrasts with traditional unconstrained parameterization where weights can assume arbitrary values.

To implement MoMos, the team developed a system that generates these motif libraries and applies them during model training. Initial weight matrices were randomly initialised, then subjected to iterative updates guided by standard backpropagation. The optimisation process selected entire motifs to populate the parameter blocks, ensuring a consistent application of the algorithmic simplification principle.

A key innovation lies in approximating Kolmogorov complexity, a measure of the shortest program required to generate an object. Direct calculation of Kolmogorov complexity is intractable, so the study leverages the size of the motif library as a proxy. Smaller libraries imply greater algorithmic simplicity, as fewer motifs are needed to construct the weight matrix.

This approach allows for a quantifiable assessment of the structural complexity of the trained models. The work deliberately focuses on the parameterization itself, the structure of the weight matrix, rather than the numerical values, differentiating it from techniques like weight sharing and quantization that primarily constrain values.

Algorithmic complexity reduction via constrained motif-based neural network parameterisation

Mosaic-of-Motifs (MoMos) demonstrably reduces the algorithmic complexity of neural networks, achieving parameterizations that are both compressible and perform comparably to unconstrained models. The core of this reduction lies in partitioning parameters into blocks of size s, restricting each block’s selection from a set of k reusable motifs defined by a mosaic pattern.

Empirical results reveal that this constrained parameterization yields algorithmically simpler models than those with unrestricted weights. Specifically, the research team measured algorithmic complexity using approximations to Kolmogorov complexity, observing a quantifiable decrease during the training process. This work establishes that MoMos guarantees lower worst-case algorithmic complexity, with potential for further improvement through strategic choices of block size s and the number of motifs k.

The study demonstrates that learned weights, when subjected to MoMos constraints, exhibit increased algorithmic regularity and compressibility compared to baseline unconstrained models. By imposing repeatability and reuse within parameter space, MoMos effectively induces algorithmic simplicity, resulting in models with provably shorter descriptions in the worst case and enhanced compressibility in practice.

The research highlights a fundamental shift in understanding model complexity, moving beyond simple parameter counts to consider the structure and reuse of learned parameters. This approach differs from prior work on weight-sharing and quantization, which primarily focus on constraining parameter values rather than the underlying parameterization itself. The team’s findings represent the first work, to their knowledge, to provide guarantees on the algorithmic complexity of learned parameters through explicit constraints on the model class.

Revealing inherent simplicity explains efficient compression in neural networks

Scientists have long known that large neural networks are surprisingly amenable to compression, yet the underlying reasons have remained elusive. This work offers a compelling shift in perspective, framing the issue not as one of clever engineering, but of inherent algorithmic simplicity. The observation that trained networks exhibit a structured, repeatable quality, a lower algorithmic complexity, is not merely a technical detail, but a fundamental insight into how these models learn.

For years, the field has focused on how to compress models, largely through trial and error. This research begins to explain why compression works so well, suggesting that we are, in effect, uncovering a pre-existing simplicity within the learned parameters. The implications extend beyond simply shrinking model size. Understanding algorithmic complexity opens the door to designing networks that are inherently more efficient from the outset, potentially reducing the computational burden of both training and inference.

Real-world applications, from edge devices with limited power to large-scale data centres, stand to benefit significantly. However, the approximations used to estimate Kolmogorov complexity, a notoriously difficult quantity to compute, represent a clear limitation. While the empirical results are promising, establishing a truly rigorous link between algorithmic complexity and model performance remains a challenge.

Furthermore, the “mosaic” method introduced here is just one way to harness this underlying simplicity. Future work might explore alternative constrained parameterizations, or even algorithms that directly optimise for algorithmic complexity during training. The broader effort will likely see this algorithmic lens applied to other areas of machine learning, potentially revealing similar principles at play in different model architectures and learning paradigms. This isn’t just about smaller models; it’s about a deeper understanding of intelligence itself, and how it can be efficiently represented.

👉 More information

🗞 Algorithmic Simplification of Neural Networks with Mosaic-of-Motifs

🧠 ArXiv: https://arxiv.org/abs/2602.14896