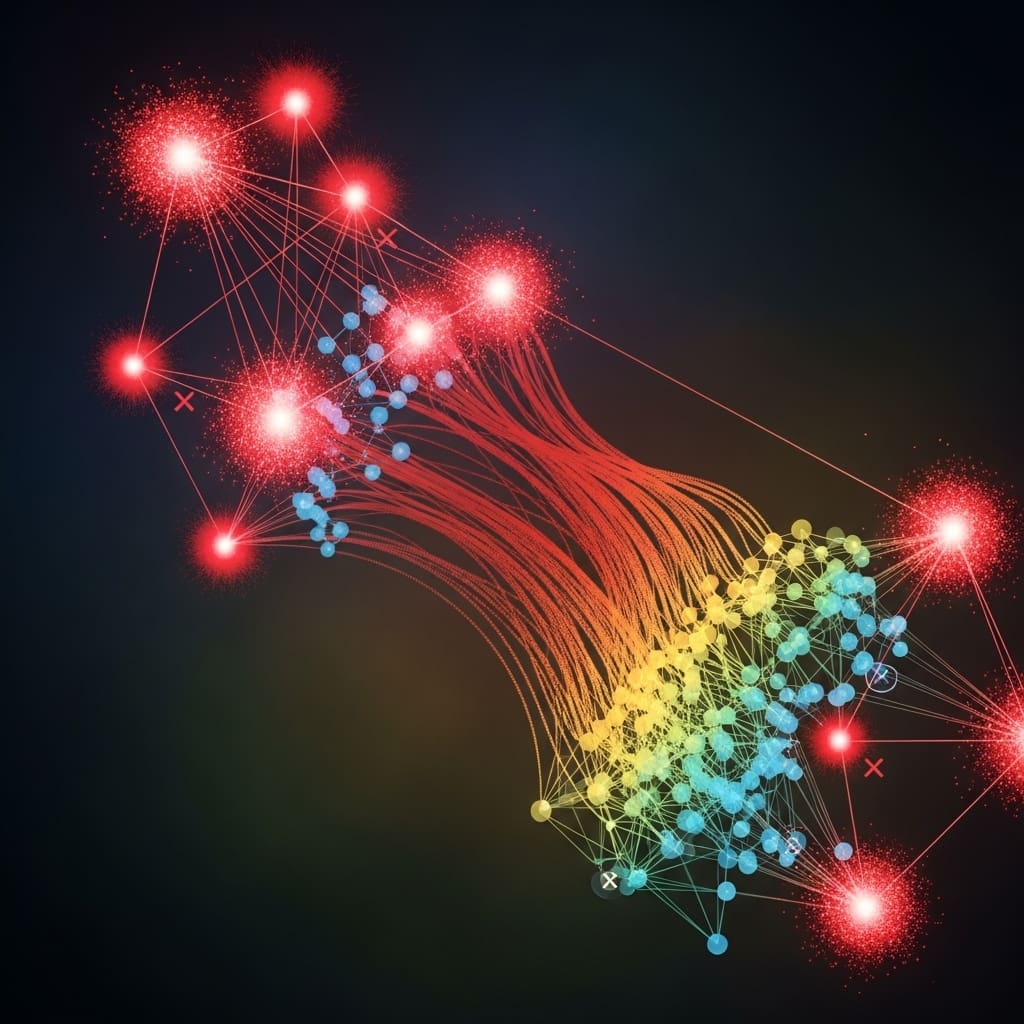

Graph neural networks (GNNs) are increasingly used to tackle complex problems, but remain vulnerable to malicious manipulation. Researchers Md Nabi Newaz Khan, Abdullah Arafat Miah, and Yu Bi, all from the Department of Electrical, Computer and Biomedical Engineering at the University of Rhode Island, have identified a significant new threat: a multi-targeted backdoor attack capable of redirecting GNN predictions to multiple different labels simultaneously. Unlike previous work focusing on single-target attacks, this study introduces a novel ‘subgraph injection’ technique , preserving original graph structure while subtly poisoning data , and demonstrates remarkably high attack success rates across several datasets and GNN architectures. This research is crucial because it reveals a previously unknown level of GNN vulnerability, even against existing defence mechanisms, and underscores the urgent need for more robust security measures in graph-based machine learning applications.

Multi-target graph backdoors via subgraph injection

Scientists have identified limitations in current backdoor attacks for graph classification, which are typically single-target attacks using subgraph replacement. Instead of subgraph replacement, they propose subgraph injection, a method that preserves the structure of original graphs while introducing malicious modifications. Experimental results on five datasets confirm the superior performance of their attack framework compared to conventional subgraph replacement-based attacks. Analysis on four GNN models confirms the generalizability of the attack, proving its effectiveness regardless of GNN model architectures and training parameter settings.

The source code will be available at https://github. com/SiSL-URI/Multi-Targeted-Graph-Backdoor-Attack. GNNs have demonstrated. Unlike prior work limited to single-target attacks, the team engineered a method capable of simultaneously redirecting predictions to multiple, distinct target labels, a significantly more complex and potent form of attack. Instead, they implemented subgraph injection, carefully inserting triggers designed to influence the global graph-level representation used for classification. This approach enables the attacker to control the GNN’s predictions with precision, directing graphs containing specific triggers to predetermined classes.

The team further validated the generalizability of their attack by testing it on four different GNN models, including Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs), and across various training parameter settings. Crucially, the work demonstrates that the attack’s success isn’t tied to specific GNN architectures or training configurations. Scientists harnessed this capability to reveal a fundamental vulnerability in GNNs, showcasing how malicious actors can compromise graph classification models with minimal disruption to normal operation. The source code for this research is publicly available, facilitating further investigation and the development of robust defence strategies against this emerging threat.

Multi-targeted Backdoor Attacks via Subgraph Injection pose significant

The team measured attack performance across five datasets, consistently outperforming conventional subgraph replacement-based attacks. Results demonstrate that the proposed attack framework achieves superior performance, effectively manipulating GNN predictions without drastically altering the model’s ability to classify uncompromised graphs. Analysis on four distinct GNN models, confirming the generalizability of the attack, shows its effectiveness regardless of the specific GNN architecture or training parameters used. Measurements confirm that the injected triggers remain effective even when the model attempts to mitigate adversarial attacks, highlighting a critical security concern.

The research recorded that the subgraph injection method successfully implants multiple triggers without the interference issues plaguing the subgraph replacement approach. This is crucial for achieving a truly multi-targeted attack, where each trigger directs the GNN towards a different, predetermined target class. Furthermore, the work highlights the vulnerability of GNNs to this type of sophisticated attack in graph classification tasks. The researchers will make their source code publicly available, enabling further investigation and development of robust defense mechanisms against multi-targeted backdoor attacks. This study provides a foundation for future work focused on securing GNNs in critical applications such as drug discovery, protein interaction prediction, and fraud detection.

Multi-Target GNN Backdoor via Subgraph Injection attacks graph

This work highlights a significant vulnerability in GNNs, demonstrating that multiple, distinct triggers can be embedded within a model to redirect predictions to various target classes simultaneously. The attack’s effectiveness was confirmed across four different GNN model architectures and various training parameter settings, indicating its generalizability. The authors acknowledge that their analysis focuses on classification tasks and may not directly translate to other GNN applications. Future research could explore extending this attack to different graph-based learning paradigms, such as graph regression or clustering. Additionally, investigating more sophisticated defence mechanisms specifically designed to detect and mitigate multi-targeted backdoor attacks remains an important direction. This research establishes a clear need for enhanced security measures in GNNs to protect against increasingly complex adversarial threats.

👉 More information

🗞 Multi-Targeted Graph Backdoor Attack

🧠 ArXiv: https://arxiv.org/abs/2601.15474