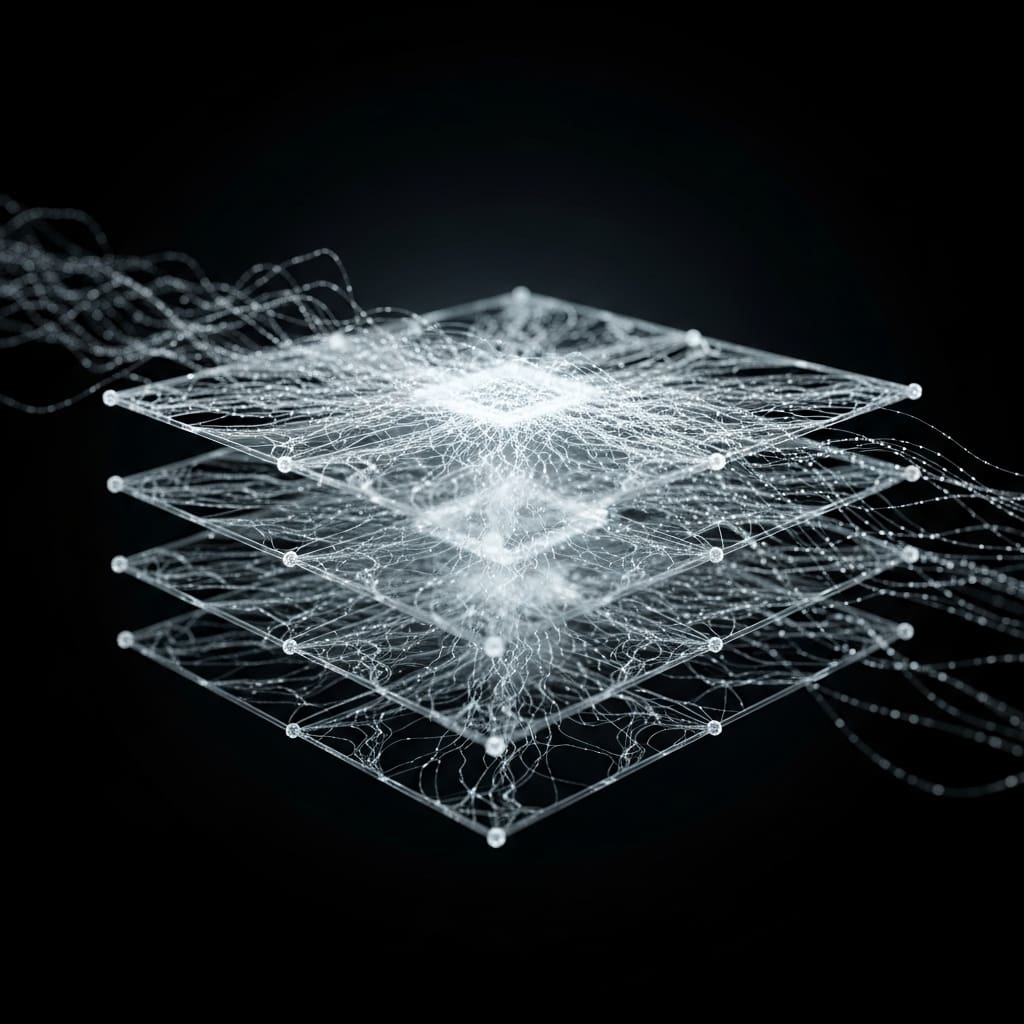

Photonic neural networks offer a potentially revolutionary pathway to faster and more energy-efficient machine learning, but simulating and training these networks currently presents significant hurdles, particularly when scaling up to complex architectures. Tzamn Melendez Carmona from Politecnico di Torino, Federico Marchesin from Ghent University and imec, and Marco P. Abrate from University College London, alongside their colleagues, address this challenge with LuxIA, a new framework for photonic neural network training. LuxIA employs a novel technique called the Slicing method, which dramatically reduces the computational demands of simulating light’s behaviour within these networks, enabling researchers to train substantially larger and more intricate systems than previously possible. Demonstrating significant speed and scalability improvements across benchmark datasets, including those used for image recognition, this work represents a crucial step towards realising the full potential of photonic AI hardware and accelerating innovation in the field.

Photonic Neural Networks Tackle AI Challenges

Photonic neural networks (PNNs) represent a promising approach to overcome the limitations of traditional electronic computing in artificial intelligence, particularly deep learning. By utilizing light instead of electrons, PNNs offer the potential for faster and more energy-efficient computation, though simulating and training these networks presents considerable challenges. Researchers are actively exploring various PNN architectures, comparing their strengths and weaknesses to optimize performance. Addressing the difficulties in simulating large-scale PNNs due to memory constraints, scientists employ techniques like Docker containerization and leverage tools such as Photontorch and the Transfer-Matrix Method.

Slicing Optimizes Photonic Neural Network Simulation

Researchers have developed Slicing, a new transfer matrix approach, to overcome limitations in simulating and training large-scale photonic neural networks (PNNs). Current simulation tools struggle with the computational demands of transfer matrix calculations, leading to high memory usage and extended processing times. The team integrated Slicing into a unified simulation and training framework named LuxIA, significantly reducing both memory requirements and execution time. The core of this work centers on optimizing transfer matrix computations by breaking down large matrices into smaller, more manageable slices.

This technique enables efficient back-propagation, a key algorithm for training neural networks, within the photonic domain. Scientists modeled the behavior of unitary transfer matrices using a photonic unit mesh, a circuit utilizing programmable optical elements like Mach-Zehnder Interferometers, and employed Singular Value Decomposition to factorize weight matrices. Extensive experiments using standard datasets, including MNIST, Digits, and Olivetti Faces, demonstrate that LuxIA outperforms existing tools in both speed and scalability. This advancement makes it feasible to explore and optimize larger, more intricate PNN designs, facilitating broader adoption of photonic technologies and accelerating innovation in AI hardware.

Slicing Boosts Photonic Neural Network Simulation

Scientists have developed Slicing, a new method to significantly improve the simulation and training of large-scale photonic neural networks (PNNs). Recognizing the computational demands of transfer matrix calculations in complex PNNs, the team integrated Slicing into a unified framework called LuxIA, designed for scalable PNN research. This method addresses limitations in memory usage and execution time, enabling the exploration and optimization of larger and more intricate PNN architectures. Experiments conducted across various photonic architectures and standard datasets, including MNIST, Digits, and Olivetti Faces, demonstrate that LuxIA consistently outperforms existing tools in both speed and scalability.

This breakthrough delivers a critical advancement in PNN simulation, making it feasible to investigate and refine increasingly complex designs previously limited by computational resources. Measurements confirm that the Slicing method substantially reduces the demands on both memory and processing time during PNN training. The team’s work facilitates broader adoption of photonic technologies in artificial intelligence hardware, accelerating innovation in this rapidly evolving field. The framework’s performance marks a significant step towards realizing the potential of photonic computing for advanced artificial intelligence applications. The framework proves effective across a range of visual recognition tasks and offers improved flexibility and usability for researchers. Future work will focus on refining the framework to accommodate even larger datasets and architectures, suggesting a continued trajectory of improvement and expansion in the capabilities of photonic neural network simulation.

👉 More information

🗞 LuxIA: A Lightweight Unitary matriX-based Framework Built on an Iterative Algorithm for Photonic Neural Network Training

🧠 ArXiv: https://arxiv.org/abs/2512.22264