The challenge of accurately predicting outcomes, and quantifying the uncertainty surrounding those predictions, drives innovation in machine learning, and M. M. Hammad, alongside colleagues, now presents a novel approach inspired by the principles of quantum mechanics. This team introduces the Schrodinger Neural Network, a new architecture that maps inputs to probability distributions using concepts from wave mechanics and the Born rule, offering a fundamentally different way to model uncertainty. The network achieves several key advantages, including guaranteed positive and normalised probability estimates, the ability to naturally represent multiple possible outcomes, and efficient calculation of important statistical measures, representing a significant step towards more robust and reliable probabilistic predictions. By framing machine learning within the language of quantum physics, this work establishes a coherent and tractable framework for elevating probabilistic prediction beyond simple point estimates, towards physically inspired amplitude-based distributions.

Schrödinger Neural Networks for Density Estimation

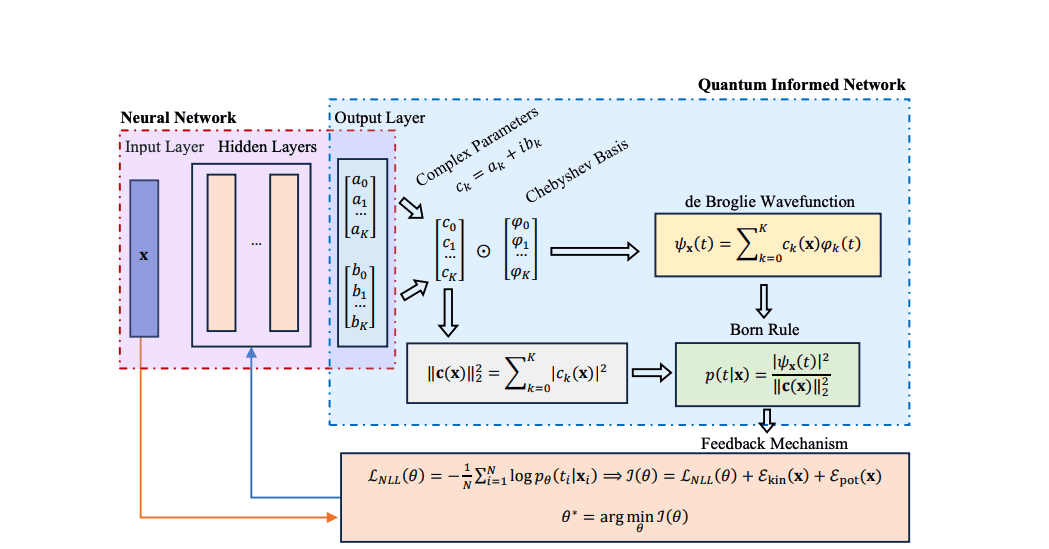

Researchers developed the Schrödinger Neural Network (SNN), a novel approach to conditional density estimation. The SNN represents predictive uncertainty as a normalized wave function defined over the output domain, calculating probabilities using Born’s rule. This work pioneers a method where a neural network learns to compute the complex coefficients of a spectral expansion, utilizing Chebyshev polynomials as an orthonormal basis to represent this wave function. Employing Chebyshev polynomials ensures numerical stability and computational efficiency in representing the conditional density, a critical step in complex probabilistic modelling.

The SNN achieves positivity and exact normalization of the predictive density as inherent structural properties, eliminating the need for numerical enforcement. This allows for the direct calculation of the probability density function without complex normalization procedures or approximations, streamlining computation and enhancing accuracy. Furthermore, the SNN naturally captures multimodality and asymmetry through interference among the basis modes, creating complex probability distributions without relying on explicit mixture components or assumptions about symmetry. Training the SNN involves maximizing the exact log-likelihood under Born’s rule, a significant advancement that avoids computationally intensive partition-function estimation, Jacobian determinants, or sampling-based surrogates.

The network’s output coefficients directly relate to the amplitude of the wave function, enabling a closed-form calculation of the probability density. This approach allows for efficient and accurate estimation of complex conditional distributions, offering a powerful tool for applications requiring robust uncertainty quantification and risk assessment. The method delivers a compact and expressive family of conditional densities, enabling precise probabilistic reports, such as quantiles at arbitrary levels, and analytic Bayes decisions.

The method also enables direct calculation of important statistical properties, such as moments, from the network’s internal representation. Researchers developed several techniques to train and evaluate the network, including a training process based on maximum likelihood and the incorporation of physics-inspired regularizers to improve performance. They also created methods for extending the network to handle more complex, multi-dimensional outputs and for incorporating external information, such as constraints or weak labels, into the prediction process. Comprehensive evaluation tools were developed to assess the quality of the network’s multimodal predictions, focusing on how accurately it allocates probability mass to different possible outcomes and how well its overall uncertainty estimates align with the true underlying distribution.

Researchers acknowledge that the performance of the network, like any machine learning model, is dependent on the quality and quantity of training data. They also note that the evaluation metrics, while comprehensive, may not capture all aspects of predictive accuracy and calibration. Future research directions include exploring the application of this network to a wider range of problems and investigating methods for further improving its scalability and robustness. The team intends to investigate the potential for combining this approach with other machine learning.

👉 More information

🗞 Schrodinger Neural Network and Uncertainty Quantification: Quantum Machine

🧠 ArXiv: https://arxiv.org/abs/2510.23449