Researchers have long observed that neural networks trained on equivariant data often develop layerwise equivariant structures, but the underlying mathematical reasons remained unclear. Vahid Shahverdi, Giovanni Luca Marchetti, and Georg Bökman, alongside Kathlén Kohn from KTH Royal Institute of Technology and the University of Amsterdam, now demonstrate a crucial link between end-to-end equivariance and layerwise equivariance. Their work proves that, given an identifiable network with an end-to-end equivariant function, a parameter choice exists that also renders each layer equivariant. This finding is significant because it provides a theoretical explanation for this commonly observed behaviour, grounding it in the concept of parameter identifiability and offering an architecture-agnostic framework for understanding equivariant neural networks.

Their work proves that, given an identifiable network with an end-to-end equivariant function, a parameter choice exists that also renders each layer equivariant. This finding is significant because it provides a theoretical explanation for this commonly observed behaviour, grounding it in the concept of parameter identifiability and offering an architecture-agnostic framework for understanding equivariant neural networks.

Layerwise Equivariance from End-to-End Symmetry enables robust representation

The team achieved this breakthrough by abstracting deep models as sequential compositions of parametric maps, representing layers, and symmetries as arbitrary groups acting on latent spaces, ensuring the theory’s broad applicability. Experiments show that designing equivariant layers is not merely a common practice, but the only possible route to constructing end-to-end equivariant networks. Furthermore, if a deep network converges to an equivariant solution, whether due to inherent data symmetries or symmetry-based data augmentation, equivariance automatically arises within the active components of its layers. This theoretical result has significant implications for understanding how symmetries are encoded in neural networks and how to effectively build models that respect these symmetries.

This work builds upon and generalises previous results concerning equivariant structures in shallow ReLU MLPs and the first layer of models with neuron-wise scaling symmetries, offering a more comprehensive and unified understanding. Consequently, the team’s contributions include a formalisation of identifiability, equivariance, and sub-models within a unified abstract language, a proof demonstrating that equivariant networks are layerwise equivariant under an identifiability assumption, and a discussion of the theory’s application to both MLPs and deep attention networks. An empirical investigation on image data further supports the theoretical findings, demonstrating the practical relevance of this work. The research opens avenues for designing more robust and interpretable neural networks by leveraging the inherent symmetries present in data, potentially leading to advancements in fields such as computer vision, graph neural networks, and physical modelling.

Layerwise Equivariance in Trained Multilayer Perceptrons emerges naturally

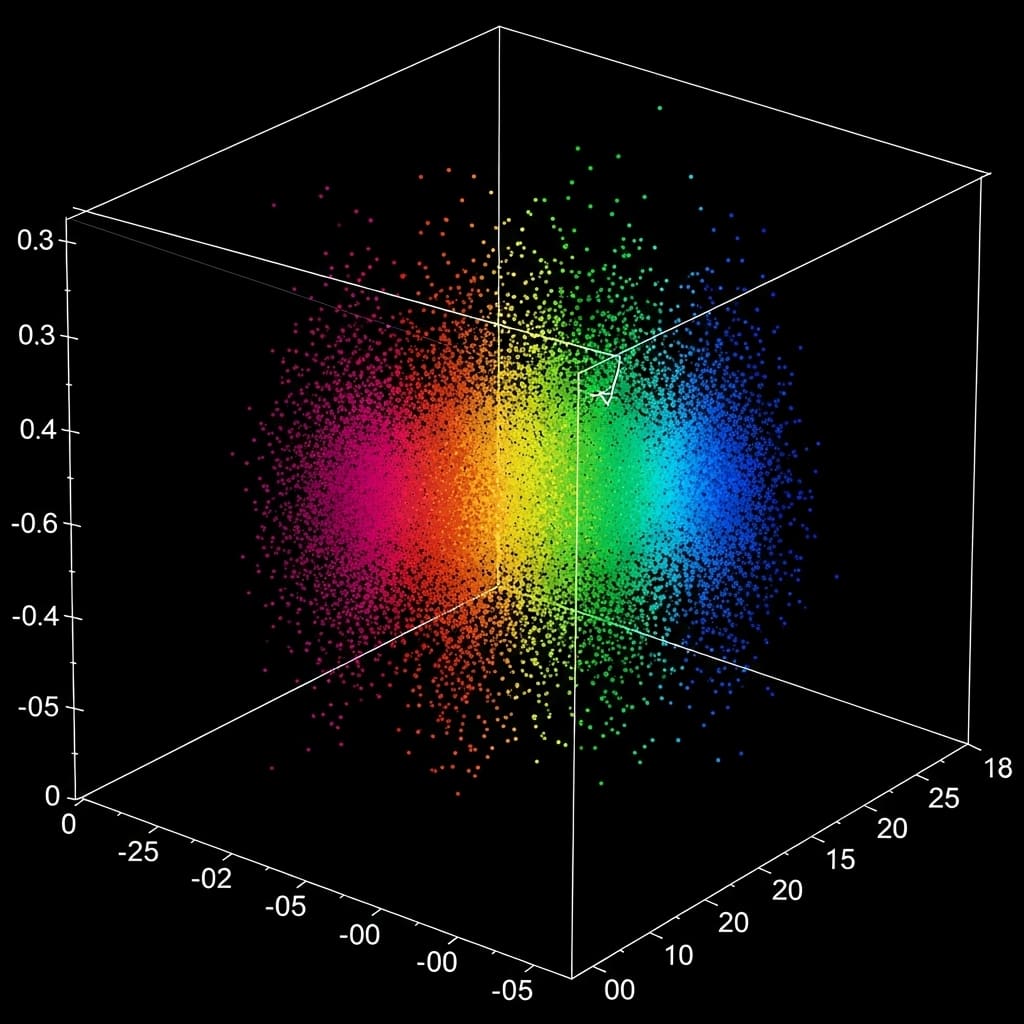

The research team grounded this theory in an abstract formalism, ensuring its applicability across diverse network architectures. To explore this, researchers trained multilayer perceptrons (MLPs) using mean-squared loss for autoencoding and cross-entropy loss for classification. During the latter half of training, an equivariance loss was incorporated; for autoencoding, this loss measured the mean-squared difference between f(x) and mirror[f(mirror[x])], while for classification, it assessed the mean-squared difference between f(x) and f(mirror[x]), where ‘f’ represents the neural network and ‘mirror’ is a left-to-right image mirroring function. The study pioneered a detailed analysis of the 64 filters learned in the first layer, visualizing them to understand how symmetry is encoded.

Experiments employed the CIFAR10 dataset, revealing that networks trained with a Tanh nonlinearity encoded left-to-right mirroring symmetry using symmetric filters alongside filters exhibiting mirrored or mirrored-and-negated copies. This corresponds to a signed permutation matrix acting on the latent space, aligning with the intertwiner group of Tanh and demonstrating how identifiability leads to layerwise equivariance. Conversely, using GELU resulted in degenerate parameters, particularly in the autoencoder, as the network bypassed the nonlinearity to achieve equivariance without a permutation action. Further, the team trained an autoencoder with a multi-head attention layer on CIFAR10, replacing the initial linear layer with a patch embedding followed by an attention layer.

A stride 2 convolution patchified the image, creating ‘image tokens’ with learnable positional encodings, and a single learnable query token served as a ‘class token’. The system delivers attention weights calculated via softmax, summing weighted value tokens to produce the layer’s output, concatenated across eight heads. Visualisation of the attention matrices revealed that most heads mirrored the input image, while the first and fifth heads exhibited permutation, demonstrating a qualitative example of mirror equivariance encoded as a permutation over the heads.

Layerwise Equivariance from End-to-End Network Properties emerges naturally

The research team developed an abstract formalism, ensuring the theory is applicable regardless of network architecture. The team rigorously proved that the parameters within each layer must be equivariant, allowing for the possibility of ‘inactive’ neurons that do not contribute to the forward pass. This finding confirms that designing equivariant layers is the sole method for constructing end-to-end equivariant networks, resolving a long-standing question in the field. Consequently, if a network converges to an equivariant solution, equivariance automatically manifests within the active components of its layers.

This requirement applies particularly to parameters not originating from smaller, embedded architectures, which may exhibit unique symmetries due to inactive neurons. The study establishes this identifiability property as a natural assumption, already validated for multilayer perceptrons using activation functions like Tanh and Sigmoid, with ongoing research addressing the more complex case of ReLU activations. The work presents a highly abstract framework, representing deep models as sequential compositions of parametric maps and symmetries as arbitrary group actions on latent spaces. The proof strategy builds upon and generalises previous research establishing equivariant structures in specific invariant models, reducing the problem to parameter identifiability. This theoretical advancement provides a mathematical foundation for understanding and designing more robust and efficient equivariant neural networks.

End-to-end implies layerwise equivariance mathematically proven

This finding holds true regardless of the specific network architecture, owing to the abstract formalism employed in the theoretical development. Researchers observed mirrored attention patterns in multi-head attention layers when presented with mirrored input images, further supporting the concept of encoded equivariance. However, the authors acknowledge limitations concerning the precise conditions for identifiability, particularly in networks utilising ReLU-style nonlinearities, and the impact of skip connections which introduce inter-layer dependencies and complicate identifiability. Future research should focus on establishing these conditions and extending the theory to encompass networks with skip connections, potentially leading to improved understanding of layerwise equivariance in such architectures.

👉 More information

🗞 Identifiable Equivariant Networks are Layerwise Equivariant

🧠 ArXiv: https://arxiv.org/abs/2601.21645