Modern networking increasingly demands efficient data processing, yet current Remote Direct Memory Access (RDMA) techniques present programming challenges and limited functionality. To address this, Md Ashfaqur Rahaman from the University of Utah, Alireza Sanaee from the University of Cambridge, and Todd Thornley from the University of Utah, alongside colleagues including Sebastiano Miano and Gianni Antichi from Politecnico di Milano and Queen Mary University of London, and Brent E. Stephens from Google and the University of Utah, present network-accelerated active messages, or NAAM. This innovative system allows applications to define small, portable functions that execute alongside messages as they travel through the network, dynamically steering processing to the most suitable location, whether on client machines, server CPUs, or SmartNICs. NAAM significantly improves performance by offloading substantial workloads from server CPUs, achieving up to 1. 8 million operations per second for certain applications, and importantly, scales to handle hundreds of offloaded tasks, a considerable advancement over existing SmartNIC frameworks.

RDMA, Smart NICs, and Network Acceleration

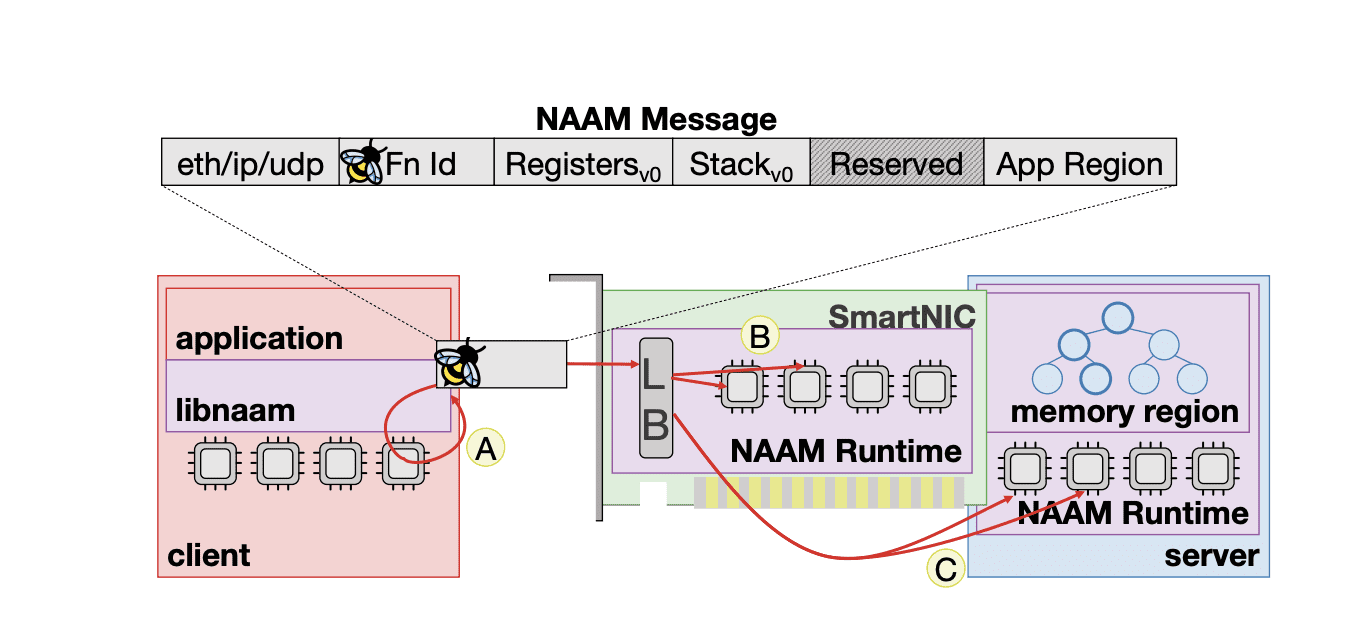

This compilation of research explores advancements in high-performance networking and distributed systems, focusing on techniques to build faster, more scalable, and more efficient computing systems. Researchers are also investigating the use of Smart Network Interface Cards (NICs) and Field-Programmable Gate Arrays (FPGAs) to offload network processing from CPUs, freeing up valuable resources for other tasks. Much of the research focuses on building distributed systems, including databases and machine learning frameworks, designed to handle large datasets and complex computations. Scientists are developing new congestion control algorithms to optimize network performance in RDMA-based systems and exploring ways to utilize far memory and persistent memory to improve scalability. NAAM allows applications to define small, portable functions written in eBPF, which are associated with messages and define the logic to be executed on data accessed via an RDMA-like interface. This innovative approach enables dynamic steering of message processing, allowing computation to occur on clients, server-attached SmartNICs, or even server host CPU cores, depending on system load and resource availability. The system intelligently relocates computation, shifting load in as little as tens of milliseconds when server resources become congested.

Experiments utilizing a BlueField-2 SmartNIC demonstrate NAAM’s ability to offload up to 1. 8 million operations per second for certain workloads and 750,000 lookups per second from server CPUs, significantly reducing computational burden. This dynamic offloading is achieved by intelligently distributing workload across available resources, maximizing overall system throughput and responsiveness. Furthermore, NAAM demonstrates superior scalability compared to existing SmartNIC offload frameworks, scaling to hundreds of application offloads with minimal impact on tail latency, a direct result of eBPF’s low overhead.

Dynamic Workload Offloading with Network Acceleration

The research team presents NAAM, network-accelerated active messages, a system designed to improve network performance by intelligently distributing workload between clients, servers, and SmartNICs. NAAM applications define small functions associated with messages, allowing the runtime to dynamically steer processing to the most suitable location. Experiments demonstrate NAAM’s ability to offload up to 1. 8 million operations per second for certain workloads and 750,000 lookups per second from server CPUs, significantly reducing computational burden. This dynamic offloading is achieved through the use of eBPF, enabling code portability and efficient execution across diverse network components.

A key achievement of this work is NAAM’s scalability, surpassing existing SmartNIC offload frameworks. While other systems scale to only eight application offloads on a BlueField-2 SmartNIC, NAAM achieves hundreds of offloads with minimal impact on tail latency, a result of eBPF’s low overhead and efficient resource management. The team measured that NAAM can run a mix of 128 different functions at the same request rate with only a modest increase in response latency. Furthermore, NAAM dynamically reconfigures hardware flow steering rules based on CPU load, shifting processing between the SmartNIC and host CPU cores in as little as 50 milliseconds.

Experiments show that NAAM can detect and respond to SmartNIC overload, shifting load to the host to maintain performance and prevent packet loss. The system successfully scaled beyond the SmartNIC’s maximum throughput by intelligently distributing workload. This research demonstrates NAAM’s potential to significantly enhance network performance and resource utilization in demanding environments.

NAAM Accelerates Workloads with Remote Functions

NAAM, or network-accelerated active messages, presents a novel approach to network acceleration by enabling applications to define portable functions that operate on remote data. The system executes these functions as active messages, efficiently distributed and run across various locations within the network, including clients, servers, and SmartNICs. Remote memory accesses do not impede the runtime, and hardware flow steering dynamically rebalances load, allowing applications to scale across network components in a matter of milliseconds and circumvent bottlenecks caused by CPU contention. Demonstrations with hash table and B-tree applications show NAAM successfully offloads significant processing from server CPUs, achieving substantial performance gains for certain workloads.

Notably, NAAM scales to hundreds of application offloads, surpassing the limitations of existing SmartNIC offload frameworks, while maintaining low tail latency due to the efficiency of eBPF. The system’s flexibility allows operations to run optimally on host CPUs, leverage SmartNIC caching for read-intensive tasks, or balance work across all available resources. The authors acknowledge that performance gains are workload-dependent and that the effectiveness of dynamic load balancing relies on accurate congestion detection. Future work may focus on refining congestion control mechanisms and exploring broader application domains to further optimize performance and scalability. This research demonstrates a promising path towards more flexible and efficient network acceleration, enabling applications to adapt to changing conditions and maximize resource utilization.

👉 More information

🗞 Network-accelerated Active Messages

🧠 ArXiv: https://arxiv.org/abs/2509.07431