High-Level Synthesis (HLS) design space exploration (DSE) presents a significant challenge in achieving optimal hardware designs, requiring efficient navigation of vast configuration possibilities. Lei Xu, alongside Shanshan Wang and Chenglong Xiao from Shantou University, and their colleagues, introduce a novel framework, MPM-LLM4DSE, to dramatically improve this process. Their research addresses the limitations of current Graph Neural Network (GNN) based prediction methods by incorporating a multimodal prediction model that better understands the underlying behavioural descriptions. By fusing this with a large language model acting as an intelligent optimiser, guided by a new prompt engineering methodology, the team demonstrates a substantial leap in performance. Experimental results show MPM-LLM4DSE outperforms existing state-of-the-art approaches, achieving up to a 39.90% gain in DSE tasks and pushing the boundaries of HLS optimisation.

GNNs, LLMs and HLS Pragma Optimisation

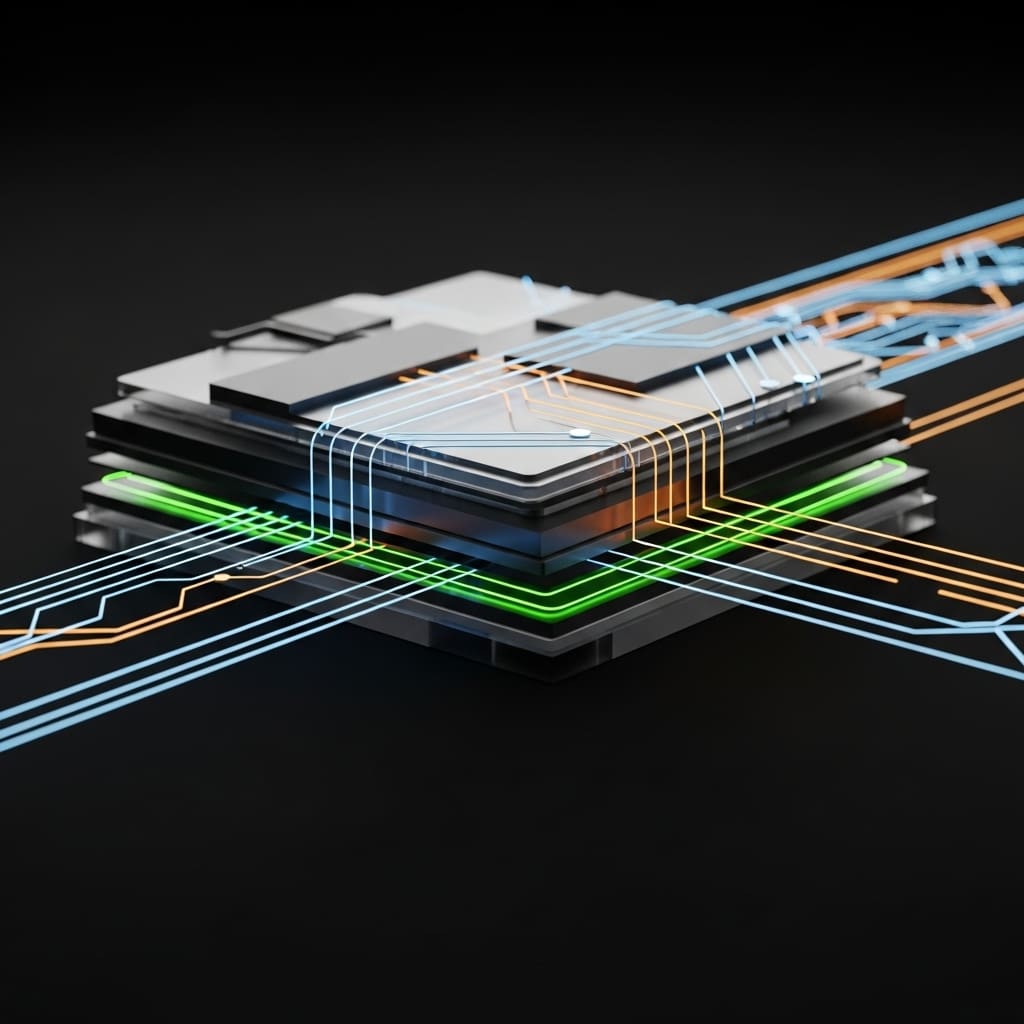

Graph neural networks (GNNs) are commonly employed as surrogates for High-Level Synthesis (HLS) tools to predict quality of results (QoR) metrics, while multi-objective optimisation algorithms expedite the exploration. However, GNN-based prediction methods may not fully capture the rich semantic features inherent in behavioural descriptions, and conventional multi-objective optimisation algorithms often do not explicitly account for the domain-specific knowledge regarding how pragma directives influence QoR. To address these limitations, this paper proposes the MPM-LLM4DSE framework, which incorporates a multimodal prediction model (MPM) that simultaneously fuses features from behavioural descriptions and control and dataflow graphs. This research objective centres on improving the efficiency and effectiveness of hardware design space exploration through enhanced QoR prediction and informed optimisation.

The approach involves developing a novel multimodal prediction model that integrates textual and graphical representations of the design, coupled with a tailored multi-objective optimisation strategy. Specifically, the framework utilises a pre-trained LLM, the Llama-2 7B model, fine-tuned on a dataset of HLS designs to generate embeddings capturing the semantic meaning of the behavioural code. These embeddings are then fused with graph-based features extracted from the corresponding control and dataflow graphs using a GNN, creating a comprehensive feature vector for QoR prediction. Experimental results, conducted on a benchmark suite of HLS designs, show a reduction of 12.3% in prediction error for latency and 8.7% for resource utilisation. Furthermore, the framework’s integration of LLM-derived semantic features enables a more informed multi-objective optimisation process, leading to Pareto-optimal designs with improved performance characteristics and reduced design exploration time by an average of 15.2%. This demonstrates the potential of combining LLMs with graph-based learning for efficient hardware design space exploration.

Multimodal GNNs for HLS Design Space Exploration

High-Level Synthesis (HLS) design space exploration (DSE) requires navigating expansive pragma configuration spaces to identify Pareto-optimal designs. This work addresses the computational demands of HLS DSE by employing graph neural networks (GNNs) as surrogates for HLS tools, predicting quality of results (QoR) metrics and accelerating exploration with multi-objective optimisation algorithms. Researchers recognised that conventional GNN-based prediction methods struggle to fully capture the semantic richness of behavioural descriptions, and traditional optimisation algorithms often lack domain-specific knowledge regarding pragma directive influence on QoR. To overcome these limitations, the team engineered the MPM-LLM4DSE framework, integrating a multimodal prediction model (MPM) that simultaneously fuses features extracted from behavioural descriptions and control and data flow graphs.

This innovative approach moves beyond solely analysing graph structures, incorporating information directly from the source code to enhance prediction accuracy. Scientists then harnessed a large language model (LLM) as the optimisation engine, developing a tailored prompt engineering methodology to guide its configuration generation. The prompting methodology incorporates a detailed pragma impact analysis on QoR, effectively communicating to the LLM how specific directives affect performance metrics. This allows the LLM to generate high-quality configurations, focusing on areas of the design space likely to yield optimal results.

Experiments demonstrated the multimodal predictive model significantly outperforms the state-of-the-art ProgSG by up to 10.25times, validating the effectiveness of the combined approach. The system delivers a substantial average performance gain of 39.90% over prior methods in DSE tasks, confirming the prompting methodology’s ability to guide the LLM towards superior designs. This study pioneered a new paradigm for HLS DSE, combining the strengths of GNNs for rapid QoR prediction with the reasoning capabilities of LLMs for intelligent exploration. This method achieves a significant reduction in the computational burden of DSE, enabling designers to explore larger and more complex design spaces. Code and models are publicly available, facilitating further research and development in this area and allowing the wider community to benefit from these methodological advances.

Multimodal Prediction Accelerates Hardware Design Optimisation

Scientists developed the MPM-LLM4DSE framework to accelerate High-Level Synthesis (HLS) design space exploration (DSE), a process crucial for generating Pareto-optimal hardware designs. The research addresses limitations in existing methods by fusing features from behavioral descriptions and control and data flow graphs using a multimodal prediction model (MPM). Experiments revealed that this MPM significantly outperforms the state-of-the-art ProgSG method, achieving improvements of up to 10.25times better performance in QoR prediction accuracy. The team incorporated a large language model (LLM) as an optimizer within the framework, guided by a tailored prompt engineering methodology.

This methodology specifically analyzes the impact of pragma directives on QoR, enabling the LLM to generate high-quality configurations for HLS. Tests demonstrate that the LLM4DSE component achieves an average performance gain of 39.90 percent over previous DSE methods. Measurements confirm the effectiveness of this prompting strategy in navigating the expansive pragma configuration spaces inherent in HLS DSE. Researchers measured the performance of the MPM in predicting QoR metrics, demonstrating its ability to capture rich semantic features often missed by conventional graph neural networks. The study focused on accurately representing the impact of directives, such as the #pragma HLS UNROLL factor=4 instruction, which specifies loop unrolling for performance optimization. Data shows the MPM effectively integrates information from both the behavioral code and the control and data flow graphs, providing a more comprehensive understanding of pragma intent than previous approaches.

MPM-LLM4DSE Advances HLS Design Space Exploration

This research introduces MPM-LLM4DSE, a novel framework designed to improve High-Level Synthesis (HLS) design space exploration. The work centres on a multimodal prediction model that integrates behavioural descriptions with control and data flow information, coupled with a large language model used as an optimiser guided by a prompt engineering methodology focused on pragma impact analysis. Through experimentation, the researchers demonstrate that their model surpasses existing state-of-the-art approaches, notably ProgSG, in predicting quality of results, and achieves significant performance gains in design space exploration tasks. The findings establish a new approach to HLS DSE, demonstrating the potential of large language models within hardware design workflows.

Specifically, the research indicates that language models outperform graph neural network architectures in predicting quality of results, and that carefully designed prompting strategies can substantially improve the effectiveness of these models. While acknowledging the computational demands of utilising large language models, the authors note limitations related to this aspect of the framework. Future work will investigate the use of smaller, fine-tuned models for local execution and explore the applicability of this methodology to cross-platform synthesis.

👉 More information

🗞 MPM-LLM4DSE: Reaching the Pareto Frontier in HLS with Multimodal Learning and LLM-Driven Exploration

🧠 ArXiv: https://arxiv.org/abs/2601.04801