Estimating motion from blurred images remains a significant challenge in computer vision, impacting fields from image restoration to advanced photography. Wontae Choi, Jaelin Lee, and colleagues at Sungkyunkwan University, alongside Hyung Sup Yun from ALLforLAND Co., Ltd. and Byeungwoo Jeon and Il Yong Chun from the Institute for Basic Science, now present a novel approach to this problem. Their research introduces MoTDiff, a framework that leverages diffusion models to estimate high-resolution motion trajectories from a single blurred image with unprecedented detail. Unlike existing methods that produce coarse or inaccurate motion representations, MoTDiff reconstructs fine-grained, pixel-accurate motion paths, achieving superior performance in both image deblurring and coded exposure photography applications and representing a substantial advance in the field.

Diffusion Models Restore Images From Blur

Researchers are applying denoising diffusion probabilistic models, or DDPMs, to the problem of image deblurring, achieving impressive results in restoring sharp images from blurred originals. This approach extends to applications like reconstructing 3D images from medical scans and improving the accuracy of weather forecasting. DDPMs work by learning to reverse a process of gradually adding noise to an image, effectively reconstructing a clean image from a noisy one. The team explores network design and training strategies to adapt these models to the specific challenges of image deblurring. The method utilizes transformer-based architectures, known for their ability to capture long-range relationships within images, and U-Net-like structures to process and refine images at different scales.

Self-attention mechanisms further enhance image quality by focusing on relevant features. To accelerate reconstruction, the team employs progressive distillation, a technique that speeds up the sampling process of DDPMs. They also investigate self-supervised learning to improve model robustness. The team validates their approach using large-scale image databases like ImageNet and standard deblurring datasets, also applying the method to 3D CT reconstruction and precipitation nowcasting, demonstrating its versatility. Performance is evaluated using metrics like peak signal-to-noise ratio, structural similarity index, and learned perceptual image patch similarity, alongside subjective evaluations by human observers. This research leverages recent advances in deep learning to achieve state-of-the-art results in image deblurring and related fields.

Motion Trajectory Estimation with Diffusion Models

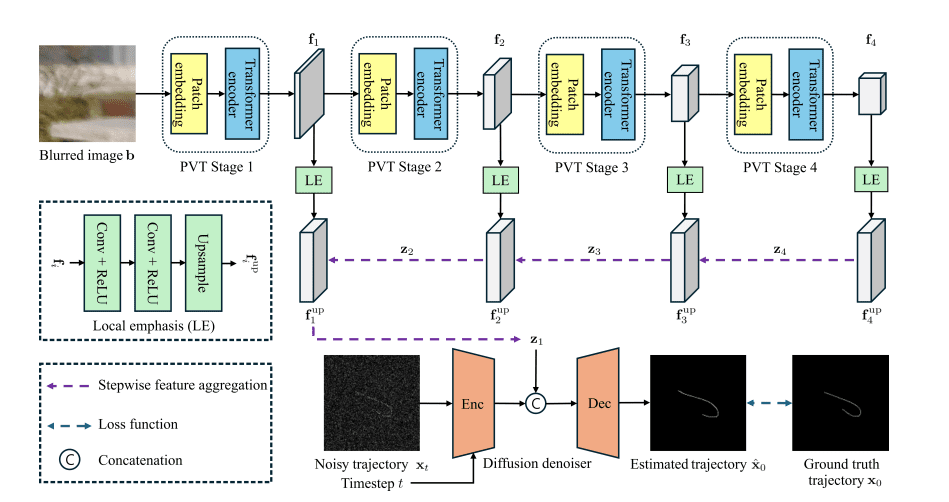

Researchers have pioneered a new framework, MoTDiff, to estimate high-resolution motion trajectories from single, motion-blurred images, addressing limitations in existing motion representation techniques. The core of MoTDiff lies in a conditional diffusion framework that uses multi-scale feature maps extracted from the blurred image to reconstruct fine-grained motion details, allowing for the reconstruction of complex motion paths crucial for applications like image deblurring and coded exposure photography. To ensure precise trajectory identification, the team developed a novel training method focused on accurately identifying fine-grained motion, maintaining a consistent overall motion path shape, and preserving pixel connectivity along the trajectory. This training process ensures that the estimated motion reflects local movements and adheres to a coherent and realistic global path.

Experiments demonstrate that MoTDiff outperforms state-of-the-art methods in reconstructing both sharp images and accurate motion information in blind image deblurring and coded exposure photography. Unlike methods relying on optical flow or parametric representations, MoTDiff’s diffusion-based framework allows for the reconstruction of arbitrary, non-linear motion paths with greater fidelity. The innovative training strategy specifically addresses the challenges of maintaining both local accuracy and global consistency in the estimated motion trajectory, resulting in a significant improvement in performance across various applications. The system delivers high-resolution motion estimation, enabling advancements in computational imaging and computer vision technologies.

High Resolution Motion Reconstruction From Single Images

Researchers have developed a new framework, MoTDiff, for estimating high-resolution motion trajectories from single blurred images, achieving significant advances in motion analysis. The work focuses on accurately reconstructing the path of motion, rather than relying on coarser or simplified representations. Experiments demonstrate that MoTDiff outperforms existing state-of-the-art methods in both blind image deblurring and coded exposure photography applications. The team addressed limitations of previous approaches by introducing a novel representation of motion trajectories, defined as a set of points mapped to a pixel grid matching the resolution of the input image.

This high-resolution trajectory, with a spatial resolution of 256×256 pixels, allows for the capture of fine-grained motion details and complex patterns, unlike earlier methods that used continuous spaces or coarser pixel grids. Traditional methods, such as those relying on point sets or parametric curves, struggle with accuracy and capturing complex motion, while the proposed method fully recovers underlying motion characteristics. The core of MoTDiff is a conditional diffusion model that leverages multi-scale feature extraction using a Vision Transformer (PVT) architecture. The PVT processes the blurred image, extracting feature maps at different scales to capture both local details and global context. These multi-scale features are then integrated as a condition into the diffusion model, guiding the generation of a fine-grained and accurate trajectory. The team successfully demonstrated that this approach can identify latent motion information within a blurred image and guide the diffusion process to produce high-resolution results.

Detailed Motion Trajectories from Blurred Images

Researchers have developed a new framework, MoTDiff, to estimate high-resolution motion trajectories from single, motion-blurred images, addressing limitations in the accuracy of existing motion representation techniques. Unlike previous methods that produce coarse or imprecise motion data, this approach focuses on reconstructing detailed motion paths, capturing complex characteristics such as direction and curvature. The team achieved this by combining a novel conditional diffusion model with a new training strategy, ensuring both spatial coherence and density in the estimated trajectories. Experimental results demonstrate that MoTDiff outperforms current state-of-the-art methods in both blind image deblurring and coded exposure photography applications.

The improved accuracy of the estimated motion trajectories directly benefits these tasks, particularly coded exposure photography where precise motion data is crucial for effective image reconstruction. While fragmented trajectories can still yield acceptable results in deblurring due to resampling techniques, the team highlights the overall improvement in trajectory quality. Future work will focus on integrating the trajectory estimation process directly into an end-to-end framework, streamlining the entire image reconstruction pipeline.

👉 More information

🗞 MoTDiff: High-resolution Motion Trajectory estimation from a single blurred image using Diffusion models

🧠 ArXiv: https://arxiv.org/abs/2510.26173