Estimating both movement of a camera and the flow of objects within a scene represents a core challenge in three-dimensional vision, yet these tasks are often tackled separately. Wenpu Li, Bangyan Liao from Westlake University and Zhejiang University, Yi Zhou, Qi Xu, Pian Wan from Georgia Institute of Technology, and Peidong Liu from Westlake University present a new framework, E-MoFlow, that jointly optimises these estimations using data from event cameras, which traditionally struggle with reliable data association. This research overcomes limitations of existing methods that introduce bias or converge to suboptimal solutions by employing implicit spatial, temporal, and geometric regularisation, effectively embedding coherence directly into the estimation process. The team demonstrates that E-MoFlow achieves state-of-the-art performance among unsupervised methods and rivals supervised approaches, offering a versatile solution for general six-degree-of-freedom motion scenarios and advancing the field of neuromorphic vision.

Continuous Motion via Neural Splines

This research details a new approach to estimating six-degree-of-freedom (6-DoF) motion and predicting dense optical flow, particularly suited for event-based cameras. The team developed a method that represents continuous motion and optical flow implicitly using neural networks, combined with a geometric loss function that doesn’t require explicit depth information. The method utilizes cubic B-splines to parameterize camera motion, ensuring smoothness and continuity, and employs a sparse control knot representation to keep the parameterization efficient. This avoids over-parameterization and naturally enforces realistic motion constraints. A key innovation is a geometric loss function derived from the motion field equation, which cleverly eliminates the need for depth information, allowing for unsupervised learning of both optical flow and 6-DoF motion simultaneously. Qualitative results demonstrate accurate 6-DoF motion estimation and smoother, more continuous optical flow compared to other methods.

Ego-Motion and Optical Flow from Event Data

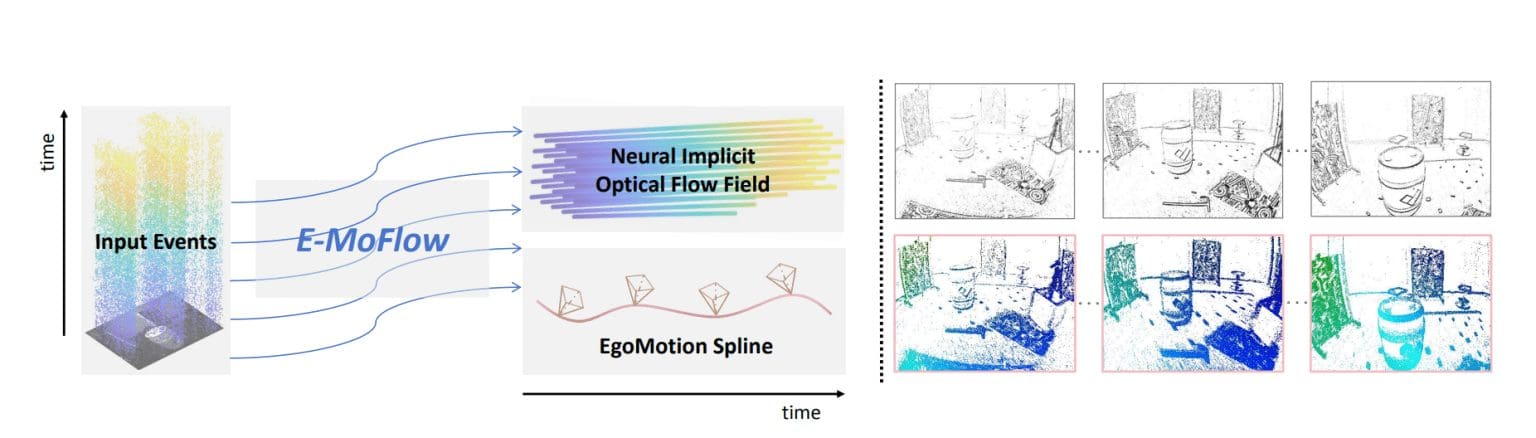

The research team developed E-MoFlow, a novel framework that simultaneously estimates optical flow and six-degree-of-freedom (6-DoF) ego-motion from neuromorphic event camera data. Recognizing that independent estimation of flow and motion is ill-posed without ground truth supervision, scientists pioneered an unsupervised approach that leverages implicit spatial-temporal and geometric regularization. The study models camera ego-motion as a continuous spline and represents optical flow as an implicit neural representation, inherently embedding temporal coherence within the learned model. This innovative method bypasses the need for explicit depth estimation by incorporating differential geometric constraints that directly link motion and flow. Experiments employ event data as input, delivering dense and continuous optical flow fields alongside corresponding warped event images for visualization and analysis, achieving state-of-the-art performance across a variety of 6-DoF motion scenarios and demonstrating robustness comparable to supervised approaches.

Ego-Motion and Optical Flow via Implicit Representations

Scientists developed E-MoFlow, a novel framework that simultaneously estimates optical flow and 6-DoF ego-motion from event camera data, achieving state-of-the-art performance in unsupervised learning. The work addresses a fundamental challenge in neuromorphic vision, where the lack of robust data association makes independent estimation of flow and motion ill-posed. Researchers formulated camera ego-motion as a continuous spline and optical flow as an implicit neural representation, inherently embedding spatial-temporal coherence. The team incorporated structure-and-motion priors through differential geometric constraints, bypassing explicit depth estimation while maintaining rigorous geometric consistency, stabilizing the solution and accurately estimating both motion and flow. Tests prove that E-MoFlow accurately recovers dense optical flow fields and corresponding warped event images, demonstrating its capacity to provide comprehensive motion and structural information for downstream applications.

Joint Optimisation of Motion and Flow Estimation

This research presents a novel framework, E-MoFlow, for simultaneously estimating optical flow and camera motion from event-based vision systems, addressing a long-standing challenge in the field. Current methods often struggle with establishing reliable data associations without supervisory signals. E-MoFlow overcomes these limitations by modelling both camera motion as a continuous spline and optical flow as an implicit neural representation, embedding spatial-temporal coherence directly into the system’s design. The key achievement lies in the joint optimisation of these two elements through differential geometric constraints, effectively bypassing the need for explicit depth estimation while maintaining rigorous geometric consistency. Experiments demonstrate that E-MoFlow achieves state-of-the-art performance among unsupervised methods for estimating both flow and 6-DoF ego-motion, even proving competitive with supervised learning approaches.

👉 More information

🗞 E-MoFlow: Learning Egomotion and Optical Flow from Event Data via Implicit Regularization

🧠 ArXiv: https://arxiv.org/abs/2510.12753