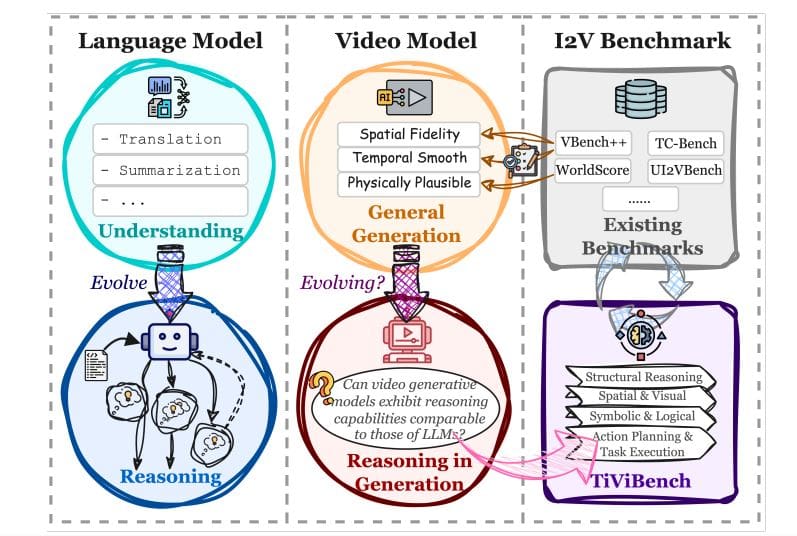

The pursuit of genuinely intelligent video generation now extends beyond visual realism to encompass complex reasoning abilities, and a new benchmark, TiViBench, directly addresses this challenge. Harold Haodong Chen, Disen Lan, Wen-Jie Shu, and colleagues systematically evaluate how well current image-to-video models understand and apply logic, plan actions, and interpret spatial relationships within video content. TiViBench assesses reasoning across four key dimensions, using 24 diverse tasks at varying difficulty levels, and reveals that while commercial models like Sora 2 and Veo 3. 1 exhibit stronger reasoning capabilities, open-source alternatives possess untapped potential. To unlock this potential, the team also introduces VideoTPO, a novel test-time strategy that leverages large language model self-analysis to refine generated videos, significantly improving reasoning performance without requiring further training or data, and establishing a crucial foundation for future advances in intelligent video generation.

Prompt Refinement For Video Generation Quality

This analysis thoroughly examines the process of refining prompts for video generation, meticulously detailing each attempt and its resulting video. The research identifies weaknesses in initial prompts and iteratively refines them to address these issues, demonstrating a clear process of problem-solving and anticipation of further challenges. Refined prompts are significantly more detailed and specific, requesting videos with defined settings, actions, visual styles, and even animated elements, addressing both technical and conceptual aspects of prompt engineering. Clearly labeling each prompt version facilitates comparison and understanding of the impact of each change, while explicitly stating what the video should not include can prevent unwanted elements. Adding example keywords or phrases focuses the model on specific concepts, and tailoring prompts to the strengths and weaknesses of a particular video generation model, such as DALL-E or Stable Diffusion, can further improve results. For complex scenes, breaking down the prompt into a shot list ensures a logical narrative, making this work a superb example of prompt engineering and a valuable resource for anyone seeking to create high-quality videos using artificial intelligence.

TiViBench Assesses Reasoning in Image-to-Video Generation

Scientists developed TiViBench, a hierarchical benchmark to rigorously evaluate reasoning capabilities in image-to-video generation. The benchmark systematically assesses performance across four key dimensions: Structural Reasoning and Search, Spatial and Visual Pattern Reasoning, Symbolic and Logical Reasoning, and Action Planning and Task Execution. It comprises 24 diverse task scenarios, each categorized into three difficulty levels, providing a comprehensive evaluation of zero-shot reasoning abilities. Extensive experiments using both commercial and open-source models revealed that commercial models currently demonstrate stronger reasoning capabilities, while open-source models possess untapped potential limited by training scale and data diversity.

To unlock this potential, scientists introduced VideoTPO, a novel test-time strategy inspired by preference optimization techniques used in large language models. VideoTPO generates multiple candidate videos and leverages a large language model to analyze their strengths and weaknesses, identifying areas for improvement without requiring additional training data or reward models. This multi-pass generation and preference alignment approach enables more fine-grained and accurate prompt optimization, significantly enhancing reasoning performance, and effectively improving reasoning performance on-the-fly without the costs of supervised fine-tuning or reinforcement learning.

TiViBench Evaluates Reasoning in Video Generation

The research team developed TiViBench, a comprehensive benchmark to rigorously evaluate the reasoning capabilities of image-to-video generation models. This work addresses a gap in existing benchmarks, which primarily assess visual fidelity and temporal coherence, failing to probe higher-order reasoning abilities. TiViBench systematically assesses reasoning across four key dimensions: Structural Reasoning and Search, Spatial and Visual Pattern Reasoning, Symbolic and Logical Reasoning, and Action Planning and Task Execution, encompassing 24 diverse task scenarios and three difficulty levels. The benchmark comprises a total of 595 image-prompt samples, providing a robust foundation for evaluating video generative reasoning.

Detailed analysis reveals characteristics of the benchmark’s data, with “Visual Analogy,” “Structural Reasoning,” and “Tool Use” accounting for significant portions of the dataset. Data distribution across the 24 tasks and three difficulty levels provides a nuanced assessment of model performance. Researchers leveraged Gemini-2. 5-Pro to generate effective prompts, grounded in visual information and designed to drive reasoning, emphasizing task subjectivity and narrative descriptiveness. The team prioritized data quality and diversity, collecting samples from internet data, existing datasets, and synthetic data, ensuring a comprehensive and reliable evaluation of video generative reasoning capabilities.

TiViBench Evaluates Image-to-Video Reasoning Capabilities

This work introduces TiViBench, a comprehensive hierarchical benchmark designed to rigorously evaluate reasoning capabilities in image-to-video generation models. The benchmark assesses performance across four key dimensions: Structural Reasoning and Search, Spatial and Visual Pattern Reasoning, Symbolic and Logical Reasoning, and Action Planning and Task Execution, utilizing a diverse set of 24 task scenarios at varying difficulty levels. Evaluations using TiViBench reveal that commercially available models currently demonstrate stronger and more consistent reasoning abilities compared to open-source alternatives, although the latter show promising potential. To unlock this potential in open-source models, researchers developed VideoTPO, a lightweight test-time strategy that optimizes performance without requiring additional training data or computational resources.

VideoTPO leverages multi-pass generation and preference alignment, effectively refining outputs based on internal analysis. Results demonstrate that VideoTPO significantly enhances reasoning performance, and that different models respond uniquely to prompt optimization techniques. This work establishes a foundation for continued advancement in reasoning capabilities within the field of video generation.

👉 More information

🗞 TiViBench: Benchmarking Think-in-Video Reasoning for Video Generative Models

🧠 ArXiv: https://arxiv.org/abs/2511.13704