The challenge of enhancing reasoning capabilities in large language models receives a significant boost from new research into test-time scaling, a technique that dynamically adjusts computational resources during operation. Aradhye Agarwal from Microsoft Research, along with Ayan Sengupta and Tanmoy Chakraborty from the Indian Institute of Technology Delhi, and their colleagues, present the first large-scale study systematically comparing various test-time scaling strategies. Their investigation, spanning over thirty billion tokens generated using eight open-source language models, reveals that no single approach consistently outperforms others, and that performance is strongly linked to both the complexity of the problem and the length of the computational process. This work establishes clear guidelines for selecting the most effective scaling strategy based on these factors, offering a practical pathway to optimise inference and unlock the full potential of large language models.

Decoding Strategies, Cost and Accuracy Tradeoffs

This research investigates the balance between accuracy and computational cost when using different decoding strategies for large language models (LLMs) on mathematical and reasoning tasks. The team compared beam search, a standard but relatively expensive method, with majority voting, which generates multiple responses and selects the most frequent one, offering high accuracy at a significant computational cost. They also explored first finish search and last finish search, cost-saving strategies that stop decoding once a sufficient number of responses are generated. The study assessed these strategies on datasets focused on mathematical and symbolic reasoning, measuring both accuracy and the total number of tokens generated as a proxy for computational cost.

The results demonstrate that no single decoding strategy is universally optimal, with the best choice depending on the specific model and task. While majority voting consistently delivers high accuracy, it requires substantial computational resources. First finish search and last finish search offer potential trade-offs, reducing cost but with varying impacts on accuracy. Last finish search often performs well on mathematical reasoning tasks, and this research helps practitioners choose the most efficient decoding strategy for their LLM applications, balancing the need for accuracy with the constraints of available computational resources.

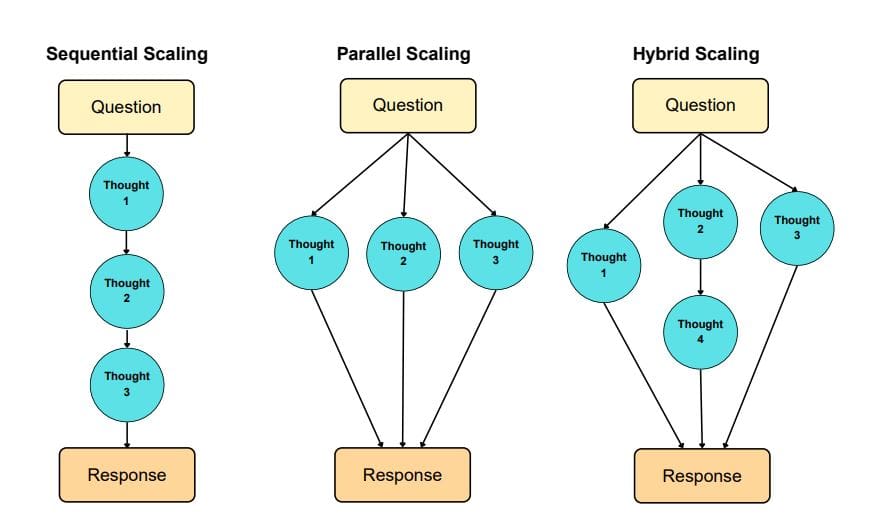

Large Language Model Test-Time Scaling Strategies

This allowed for precise control over reasoning depth and identification of distinct trace-quality patterns across problem difficulty and trace length, categorizing reasoning into short-horizon and long-horizon behaviours. The study revealed that no single TTS strategy universally dominates, and the best approach depends on both the specific LLM employed and the difficulty of the reasoning task. Differences in performance are attributed to variations in post-training algorithms, with certain algorithms promoting concise reasoning and others enabling sustained deeper reasoning on more challenging tasks. This insight led to the development of a practical recipe for selecting the optimal TTS strategy, considering problem difficulty, model type, and available compute.

Reasoning Scales with Model and Trace Length

This research delivers a comprehensive analysis of test-time scaling (TTS) strategies for large language models (LLMs), spanning over thirty billion tokens generated using eight open-source models ranging from 7 to 235 billion parameters. The study systematically evaluates performance across four reasoning datasets, revealing crucial insights into how compute allocation impacts reasoning ability. Results demonstrate that no single TTS strategy universally dominates, highlighting the need for tailored approaches. The team identified distinct trace-quality patterns based on problem difficulty and trace length, categorizing reasoning into short-horizon and long-horizon behaviours.

This categorization reveals how different models approach problem-solving, with some excelling at concise reasoning while others benefit from deeper exploration. Crucially, the study shows that for a given TTS type, performance scales monotonically with compute budget, meaning increased computational resources consistently yield improvements. Further investigation revealed a strong correlation between model post-training methods and reasoning horizons, confirming that optimal TTS is highly contextual, requiring consideration of model training, task type, and available compute to guide effective strategy selection.

Reasoning Strategy Depends On Model And Task

This large-scale study demonstrates that no single test-time scaling (TTS) strategy universally enhances reasoning in large language models. Instead, the most effective approach depends on the interplay between a model’s training, the difficulty of the problem being addressed, and the available computational resources. Researchers observed distinct behaviours across different model families, with some consistently favouring shorter reasoning traces while others benefit from more extended deliberation, particularly when tackling harder problems. The findings reveal that reasoning patterns fall into two categories, short-horizon and long-horizon, influencing the optimal TTS strategy.

Importantly, beam search consistently proved suboptimal for complex reasoning tasks. This work provides a practical framework for practitioners, highlighting the need for a nuanced, model-aware approach to inference rather than relying on a single, universal strategy. Researchers acknowledge that selecting the best TTS strategy requires careful consideration of these factors to maximize performance.

👉 More information

🗞 The Art of Scaling Test-Time Compute for Large Language Models

🧠 ArXiv: https://arxiv.org/abs/2512.02008