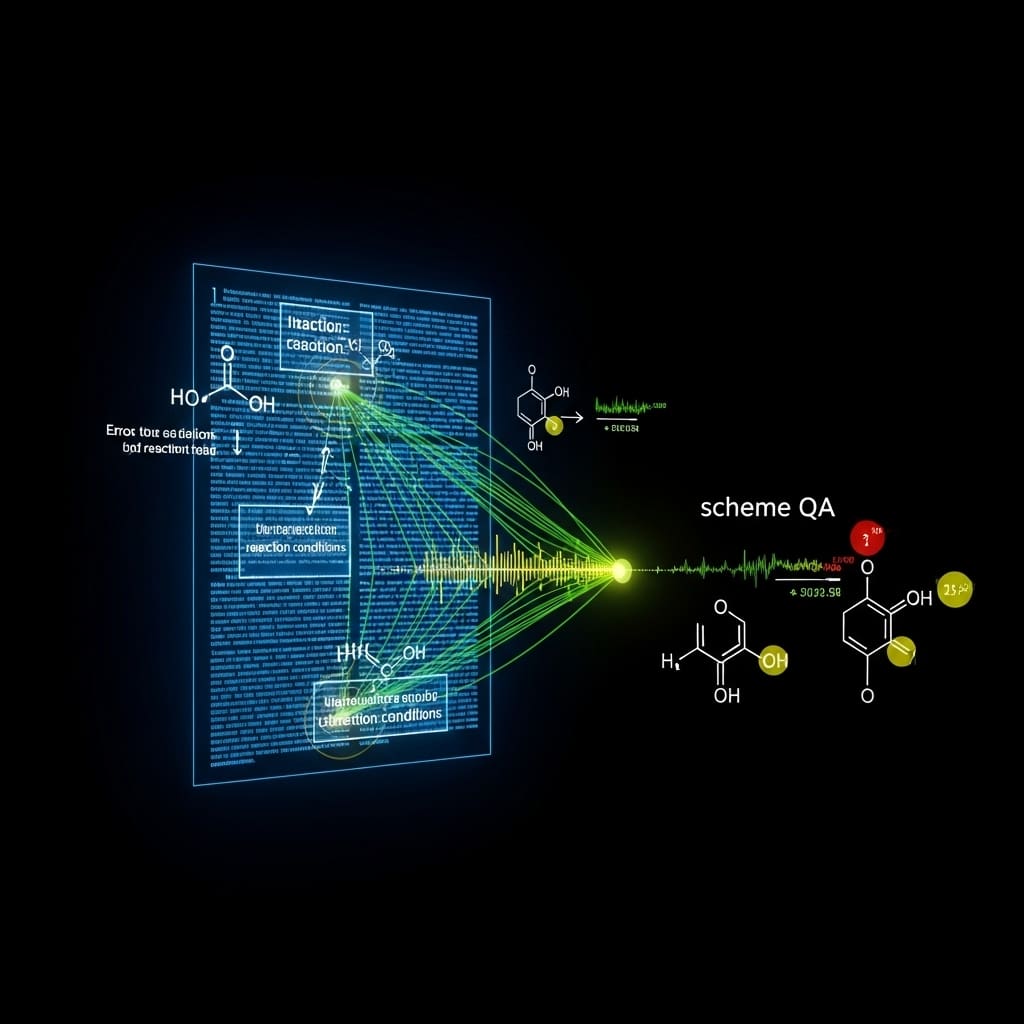

The ability of artificial intelligence to understand and interpret chemical reactions from scientific literature represents a significant step towards accelerating chemical discovery, but current systems often struggle with the complex interplay of text and visual information found in research papers. Hanzheng Li, Xi Fang, and Yixuan Li, from their respective institutions, lead a team that addresses this challenge with the development of RxnBench, a new benchmark designed to thoroughly assess the capabilities of large language models in comprehending chemical reactions as presented in authentic scientific PDFs. This benchmark employs two distinct tasks, testing both detailed visual understanding and the ability to synthesise information across entire research articles, including text, diagrams and tables. The results reveal a considerable gap in current model performance, demonstrating that while models can readily extract textual information, they struggle with the nuanced chemical reasoning and precise structural recognition necessary for true comprehension, highlighting the need for more advanced visual processing and reasoning capabilities in future systems.

Proprietary MLLMs Excel at Scientific Question Answering

Investigations into multimodal large language models (MLLMs) reveal that proprietary models generally outperform open-weight alternatives on scientific question-answering tasks, including understanding scientific figures and full documents. Models designated with “Think”, indicating inference-time reasoning, consistently demonstrate improved performance, suggesting reasoning abilities are crucial for these complex challenges. These tasks, however, remain difficult, with even the best-performing models achieving only moderate accuracy. Performance varies across different sub-categories within scientific question answering, indicating that no single model excels at all aspects of scientific understanding.

The Qwen series of models represents the leading open-weight approach, demonstrating the potential of open-source development. Gemini-3-Flash-preview currently achieves the highest scores on these tasks, while Qwen3-235B-A22B-Think is the best-performing open-weight model. The prompt used to generate these questions emphasizes high-difficulty, reasoning-based multiple-choice questions about chemical reactions, incorporating both Chinese and English versions for broader accessibility. In conclusion, while MLLMs are progressing in scientific question answering, significant improvements are still needed. The ability to reason is a critical factor, and performance is dependent on the specific scientific skill being tested. Proprietary models currently lead, but open-weight models are showing promise.

RxnBench, Assessing Chemical Reasoning in Scientific Literature

Researchers have developed RxnBench, a benchmark to rigorously assess the chemical reasoning capabilities of large language models (MLLMs) when interpreting scientific literature. This addresses a gap in evaluation, as existing benchmarks often fail to capture the complex visual and logical demands of authentic chemistry research. The dataset was meticulously curated from prestigious journals, including Nature Chemistry and Angewandte Chemie International Edition, focusing on articles with rich graphical information and chemical diversity. The construction of the benchmark involved automated figure extraction followed by expert review and refinement of question-answer pairs. Questions are categorized into descriptive questions, assessing information extraction, and reasoning questions, requiring synthesis of visual information with chemical knowledge. This methodology enables a detailed assessment of MLLM performance, revealing limitations in deep chemical logic and precise structural recognition, while highlighting the potential of inference-time reasoning.

LLMs Struggle with Chemical Reaction Logic

The research team introduced RxnBench to rigorously evaluate how well large language models (MLLMs) understand chemical reactions presented in scientific literature. The benchmark consists of Single-Figure QA, testing visual perception and mechanistic reasoning, and Full-Document QA, requiring integration of information from entire articles. Experiments reveal that current MLLMs excel at extracting explicit text but struggle with deeper chemical logic and precise structural recognition. The Single-Figure QA task includes questions ranging from basic fact extraction to advanced mechanistic reasoning. Evaluations of 41 MLLMs demonstrated that Gemini-3-Flash-preview achieved the highest mean score, showcasing exceptional chemical vision understanding. Models utilizing inference-time reasoning, denoted as “Think” models, significantly outperformed standard architectures, highlighting the benefit of generating internal reasoning steps before providing an answer.

RxnBench Measures Chemical Reasoning in Language Models

This research introduces RxnBench, a benchmark to assess the capabilities of large language models in understanding chemical reactions as presented in scientific literature. The benchmark features two tasks, Single-Figure Question Answering and Full-Document Question Answering, which progressively challenge models with increasing complexity. Evaluations reveal that current models demonstrate proficiency in extracting explicit textual information, but struggle with the nuanced chemical logic and precise structural recognition necessary for deeper understanding. While models employing reasoning during inference perform better than standard architectures, none currently achieve satisfactory performance on the more demanding Full-Document QA task. Future work should focus on developing domain-specific visual encoders and more robust reasoning engines to improve model performance and enable autonomous chemical research.

👉 More information

🗞 RxnBench: A Multimodal Benchmark for Evaluating Large Language Models on Chemical Reaction Understanding from Scientific Literature

🧠 ArXiv: https://arxiv.org/abs/2512.23565