Reward models, crucial for aligning large language models with human values, are demonstrably shaped by the foundations upon which they are built. Brian Christian, Jessica A.F. Thompson, and Elle Michelle Yang, from the University of Oxford, alongside Vincent Adam from Universitat Pompeu Fabra and colleagues, reveal that these models inherit significant value biases from their pre-trained counterparts. Their comprehensive analysis of ten leading open-weight reward models, utilising validated psycholinguistic data, demonstrates a clear preference for ‘agency’ in Llama-based models and ‘communion’ in Gemma-based models, even with identical preference data and finetuning. This research is significant because it highlights that the choice of base language model isn’t simply a matter of performance, but also a critical consideration of embedded values, necessitating greater attention to safety and alignment during pre-training.

F. Researchers derived usable implicit reward scores, confirming the consistent agency/communion divergence. This suggests that the initial values embedded within the pre-trained LLM significantly shape the RM’s subsequent behaviour, even with substantial preference-based training. This work establishes that RMs, despite being designed to represent human preferences, are demonstrably influenced by the pre-trained LLMs upon which they are built.

The team’s findings underscore the critical importance of addressing safety and alignment concerns during the pretraining stage of LLM development. By quantifying inherited value biases, this research provides a new method for assessing and mitigating potential misalignment in AI systems. Furthermore, the researchers developed a new RM interpretability method leveraging tools from psycholinguistics, allowing for the mapping of specific words to broader psychological constructs and the quantification of value biases. Analysis of 10 RMs on RewardBench revealed significant and replicable differences between Llama- and Gemma-based models across various dimensions of human value. The team’s systematic experiments, involving training RMs on different base models with controlled data and hyperparameters, confirmed the robustness and persistence of these inherited biases, even with extensive finetuning. This work not only identifies a critical vulnerability in current alignment pipelines but also provides a pathway for developing more value-aligned AI systems.

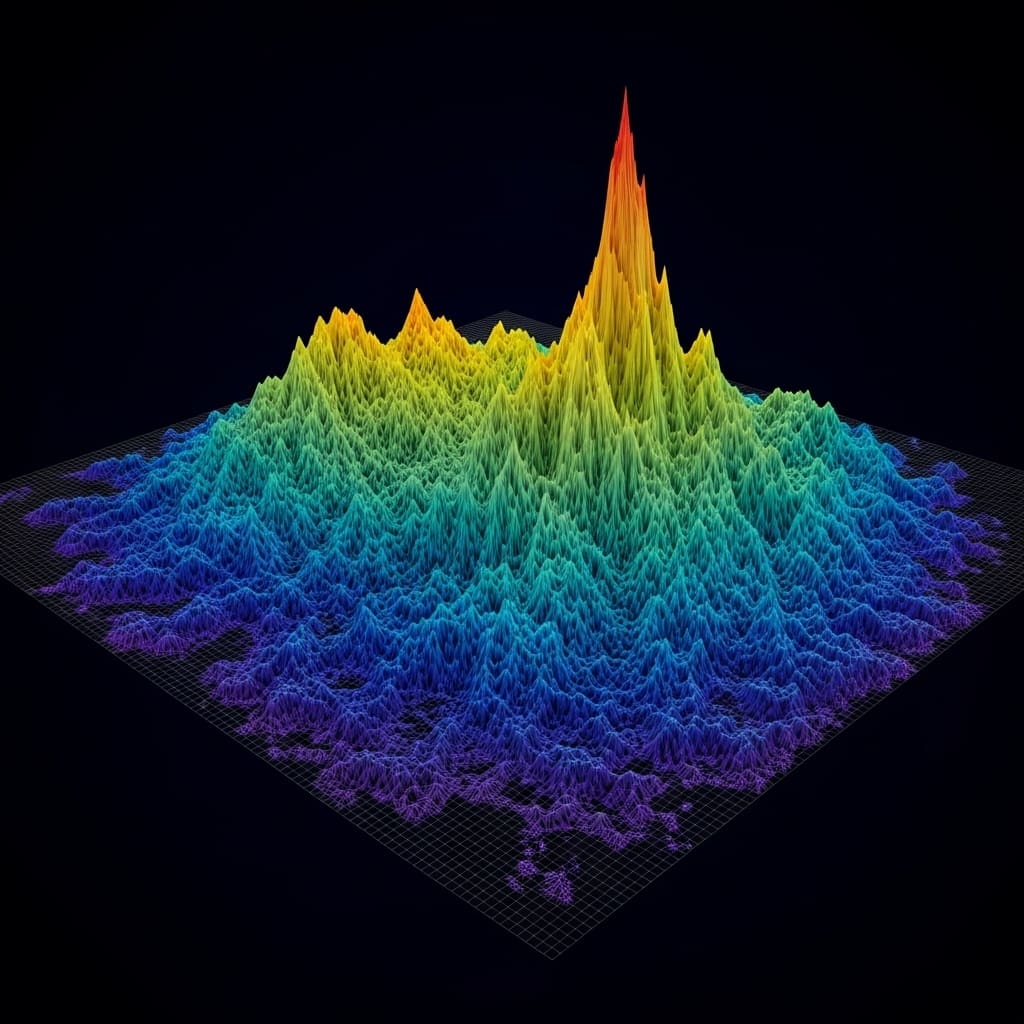

Token search reveals reward model value biases

Scientists investigated whether reward models (RMs) inherit value biases from their pre-trained large language model (LLM) foundations. The study employed an exhaustive token search method, initially introduced by Christian et al., to evaluate each token in an RM’s vocabulary against value-laden prompts. This technique reveals the highest and lowest scoring responses, allowing researchers to uncover and quantify value biases within RMs as a function of the base model used for development. Data was collected from 10 leading RMs available on RewardBench, enabling robust and replicable comparisons between Llama- and Gemma-based models across various dimensions of human value.

Researchers focused on the “Big Two” psychological dimensions, agency and communion, utilising psychologically-validated corpora to demonstrate a consistent preference for agency in Llama-based RMs and communion in Gemma-based RMs. The team combined exhaustive token search with tools from psycholinguistics, specifically the Big Two corpus and the Moral Foundations Dictionary (MFD2), to map words to psychological constructs and quantify value biases. These corpora, coded by human experts, provided a framework for assessing how RMs respond to prompts related to different value dimensions. To trace the origins of these biases, the study examined the log probabilities of the instruction-tuned and pre-trained base models.

Differences in these log-probabilities were formulated as implicit reward models, generating usable scores that mirrored the observed agency/communion preference. Experiments were then conducted, training new RMs on different base models with identical data and hyperparameters, while varying data sources and quantities. This allowed scientists to chart the evolution of bias during preference finetuning and assess its durability, determining the extent to which it could be mitigated with increased finetuning data. Qualitatively, when presented with the prompt “What, in one word, is the greatest thing ever?”, Gemma-based RMs favoured variations of “Love”, while Llama-based RMs preferred “Freedom”, despite identical training data and developer. This work highlights the critical importance of considering pretraining choices in alignment pipelines and underscores that the selection of a base model is as crucial for values as it is for performance.

Llama and Gemma bases impart value biases

Experiments revealed that these log-probability differences could be formulated as an implicit RM, allowing the team to derive usable implicit reward scores exhibiting the same agency/communion difference. Analyses suggest these biases manifest in meaningful ways within RM reward scores, and consequently, in the downstream LLMs optimised using them. Specifically, when presented with positively framed prompts, Llama RMs ranked authority- and fairness-related words higher than Gemma RMs, while Gemma RMs ranked care-, loyalty-, and sanctity-related words higher than Llama RMs, as confirmed by permutation-based t-tests with p Gemma, p 0.0001), though authority, loyalty, and sanctity maintained the same pattern as positive prompts (all p 0.0001). A three-way ANOVA revealed a significant interaction between Big-Two category, prompt valence, and model (F(1, 208) = 88.8, p 0.0001), mirroring the findings in the pre-trained versions of Gemma 2 2B and Llama 3.2 3B (F(1, 208) = 42.3).

Researchers calculated log probabilities assigned to Big-Two nouns by instruction-tuned Gemma 2 2B and Llama 3.2 3B, showing that agency words were ranked higher by Llama and communion words by Gemma in positively framed prompts, reversing for negative prompts. Furthermore, the team defined implicit reward scores based on the log-probability difference between Llama and Gemma, effectively framing this difference as a reward model. This allowed them to apply an “optimal and pessimal token” methodology, revealing that the observed agency/communion split was indeed rooted in the base models themselves, with Welch’s t-tests yielding FDR-corrected p 0.01 across relevant comparisons.

Llama and Gemma influence reward model values

The authors acknowledge limitations including the use of short responses and token-level analysis, though they demonstrate generalisation across prompt variations and to multi-token responses. Future research should focus on establishing formal scaling laws for model size and data quantity, expanding the analysis to a wider range of base models, and investigating other dimensions of value beyond agency and communion. These findings underscore the importance of considering pre-training choices as a critical aspect of safety and alignment, as much as the RLHF stage itself. The study highlights that the selection of a base model carries inherent value considerations, and that alignment is not solely achieved through preference-based finetuning. Mechanistic interpretability tools are needed to fully understand how values are inherited during pretraining, but this work establishes a clear link between base model characteristics and the values expressed by subsequent reward models.

👉 More information

🗞 Reward Models Inherit Value Biases from Pretraining

🧠 ArXiv: https://arxiv.org/abs/2601.20838