Researchers are increasingly concerned with political bias embedded within Large Language Models (LLMs), given their growing influence on global communication. Afrozah Nadeem from Macquarie University, Agrima from Microsoft, and Mehwish Nasim from the University of Western Australia, alongside Usman Naseem et al., have undertaken a large-scale investigation into this issue, evaluating political bias across 33 languages and 50 countries. This work is significant because it moves beyond studies focused on Western languages, addressing the critical need for cross-lingual consistency in bias detection and mitigation. The team developed Cross-Lingual Steering (CLAS), a novel framework that dynamically regulates ideological interventions, reducing bias while maintaining response quality and offering a scalable approach to fairer, multilingual LLM governance.

The team achieved a large-scale multilingual evaluation of political bias, encompassing 50 countries and 33 languages, to understand how these models reflect ideological leanings globally.

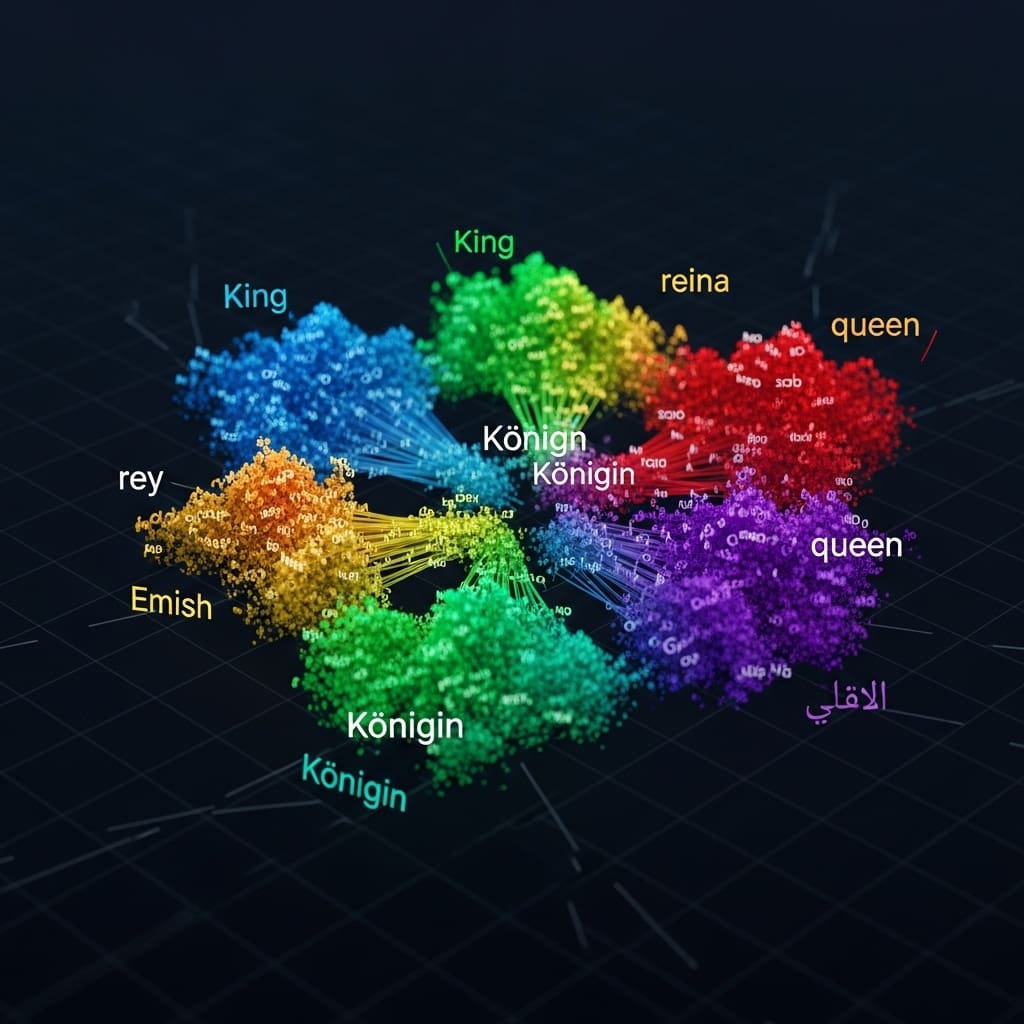

This research introduces Cross-Lingual Steering (CLAS), a novel post-hoc mitigation framework designed to improve fairness and neutrality in LLMs. CLAS augments existing steering methods by utilising ideological representations across languages and dynamically regulating intervention strength. The method induces latent ideological representations from political prompts into a shared ideological subspace, ensuring consistency across languages.

An adaptive mechanism within CLAS prevents over-correction, thereby preserving the coherence and quality of generated text. Experiments reveal substantial reductions in bias along both economic and social axes, with minimal impact on response quality. The study unveils a scalable and interpretable paradigm for governing multilingual LLMs, balancing ideological neutrality with linguistic and cultural diversity.

Researchers systematically evaluated political bias in 13 state-of-the-art LLMs across five Pakistani languages, Urdu, Punjabi, Sindhi, Pashto, and Balochi, integrating a culturally adapted Political Compass Test with multi-level framing analysis. Results indicate that while LLMs generally reflect liberal-left orientations consistent with Western training data, they exhibit more authoritarian framing in regional languages, highlighting language-conditioned ideological modulation.

This work establishes that multilingual political bias is both axis-specific and representation-misaligned across languages. By aligning stance representations before steering, the team enabled consistent bias reduction while preserving linguistic coherence and cultural semantics. The proposed framework offers a robust post-hoc mitigation strategy, addressing a key limitation of prior steering approaches that implicitly assume ideological directions are comparable across languages. This research opens new avenues for fair and reliable multilingual LLM governance, crucial for responsible AI deployment in a global context.

Quantifying and categorising ideological stance from Likert scale responses presents methodological challenges

Scientists developed a large-scale multilingual evaluation of political bias, spanning 50 countries and 33 languages, to address gaps in existing research. The study employed the Political Compass Test as a standardized tool for ideological bias, utilising a four-level Likert response set, Strongly Agree, Agree, Disagree, and Strongly Disagree, for each statement.

To quantify political stance, researchers first used a classifier to compute confidence scores for each Likert category, denoted as As, A, D, and Ds. A continuous stance value S, ranging from -10 to 10, was then derived by multiplying the dominant label’s confidence by a fixed polarity weight of ±10 for strong responses and ±5 for moderate responses.

This representation captured both the direction and intensity of the model’s position. Subsequently, the team discretised S into ordinal labels using a symmetric thresholding function g(·), mapping continuous scores to categorical values of 0, 1, 2, and 3, corresponding to the four Likert responses. Stance values across all statements were aggregated and projected into a two-dimensional ideological space, defined by economic and social axes, yielding a structured representation of political alignment.

This enabled robust cross-lingual and cross-model comparisons, examining consistency of ideology across languages and nations, or adaptation of stances based on linguistic and cultural framing. The research pioneered Cross-Lingual Alignment Steering (CLAS), a framework designed to harmonise and stabilise ideological responses across multilingual contexts.

CLAS explicitly aligns ideological axes across languages prior to steering, combining this with uncertainty-adaptive scaling to prevent over-correction and preserve semantic faithfulness. The team systematically compared CLAS against Individual Steering Vectors (ISV) and Steering Vector Ensembles (SVE) across the 50 countries, establishing a framework for cross-lingual political bias mitigation. This method latent ideological representations induced by political prompts into a shared ideological subspace, ensuring cross-lingual consistency, while the adaptive mechanism prevents over-correction and preserves coherence.

Political Bias Reduction via Shared Ideological Representation requires nuanced algorithmic approaches

Scientists have achieved substantial reductions in political bias across 33 languages, spanning 50 countries, through a new framework called Cross-Lingual Steering (CLAS). The research demonstrates a scalable and interpretable paradigm for fairness-aware multilingual Large Language Model (LLM) governance, balancing ideological neutrality with linguistic and cultural diversity.

Experiments revealed both axis-specific bias and misalignment of representation across languages in political tendencies. The team measured ideological tendencies using the Political Compass Test (PCT), analysing outcomes along economic and social dimensions. Results show that CLAS effectively aligns language-specific stance representations into a shared ideological subspace before applying intervention, enabling consistent bias reduction.

This alignment process prevents over-correction and preserves linguistic coherence and cultural semantics, addressing a key limitation of prior steering approaches. Measurements confirm that the framework mitigates bias along both economic and social axes with minimal degradation in response quality. The study established a large-scale 50-country PCT benchmark, revealing substantial cross-lingual and cross-national variation in political leanings.

Data shows that CLAS incorporates uncertainty-adaptive scaling, dynamically regulating intervention strength based on model confidence. Scientists recorded consistent reductions in ideological skew across languages, supporting fair and reliable multilingual LLM governance. The breakthrough delivers a robust post-hoc mitigation, addressing the issue of assuming comparable ideological directions across languages despite inconsistent political encoding.

Tests prove that aligning stance representations prior to steering enables consistent bias reduction while preserving linguistic coherence and cultural semantics. This work identifies representational misalignment as a critical factor, necessitating the alignment of language-specific ideological directions into a shared subspace before intervention.

Cross-lingual ideological alignment through post-hoc intervention reduces bias in large language models significantly

Scientists have demonstrated a large-scale multilingual evaluation of political bias in large language models, spanning 50 countries and 33 languages. Their research reveals that these models exhibit noticeable political drift across languages, even when presented with semantically identical prompts, indicating the internalisation of language-specific ideological cues during training.

This inconsistency poses a challenge for responsible deployment in multilingual contexts, where users anticipate uniform and unbiased behaviour. To address this, researchers introduced Cross-Lingual Steering (CLAS), a post-hoc mitigation framework that augments existing steering methods by utilising ideological representations across languages and dynamically regulating intervention strength.

Experiments show that CLAS substantially reduces bias along both economic and social axes while maintaining response quality. Specifically, the framework induces latent ideological representations into a shared ideological subspace, ensuring cross-lingual consistency and preventing over-correction. The authors acknowledge that the evaluation does not offer normative judgements about the correctness or desirability of any particular political stance.

The significance of these findings lies in establishing a scalable and interpretable paradigm for fairness-aware multilingual LLM governance. By balancing ideological neutrality with linguistic and cultural diversity, this work contributes to more stable, fair, and predictable model behaviour across diverse linguistic inputs. Future research could explore the application of CLAS to a wider range of languages and domains, as well as investigate the underlying mechanisms driving cross-lingual bias in LLMs.

👉 More information

🗞 Bias Beyond Borders: Political Ideology Evaluation and Steering in Multilingual LLMs

🧠 ArXiv: https://arxiv.org/abs/2601.23001