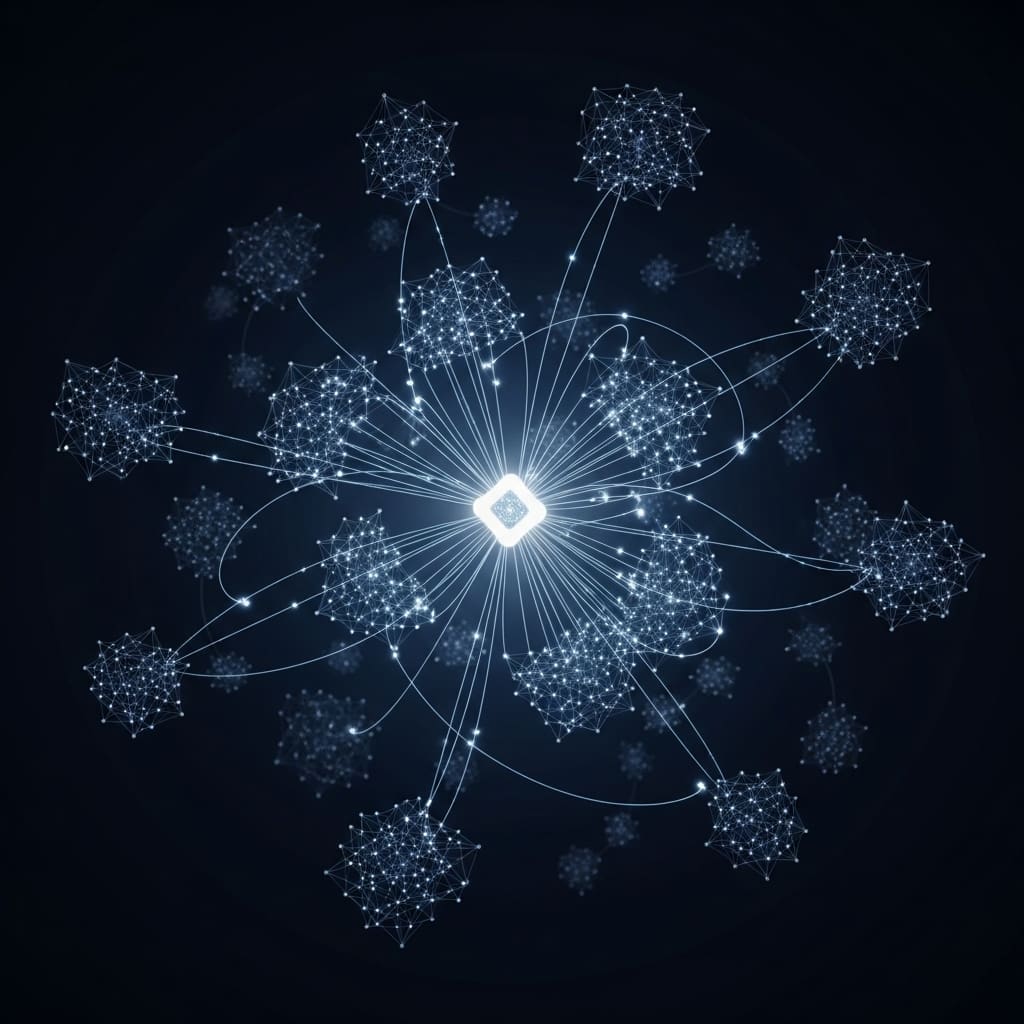

Graph Foundation Models (GFMs) promise transferable representations for diverse graph-based tasks, but their potential is currently hampered by significant in-memory bottlenecks. Haonan Yuan, Qingyun Sun, and Jiacheng Tao from Beihang University, alongside Xingcheng Fu and Jianxin Li, address this challenge in their new research by introducing RAG-GFM , a retrieval-augmented approach that moves knowledge storage out of the model parameters and into an external, more scalable system. This innovative method utilises a dual-modal unified module combining text and structural motifs, preserving richer information and enabling efficient adaptation to new tasks, as demonstrated by consistent outperformance against 13 state-of-the-art baselines across five benchmark datasets. Their work represents a crucial step towards building truly scalable and interpretable GFMs, unlocking the full potential of graph learning.

RAG-GFM tackles graph knowledge scalability issues by leveraging

This innovative approach moves beyond traditional “pretrain-then-finetune” paradigms, which compress knowledge into model parameters, leading to lossy compression and hindering efficient adaptation. This objective ensures complementary representation learning across the two stores, enhancing the model’s ability to understand and utilise the combined knowledge. Experiments show that this allows for more targeted and effective learning, reducing the need for extensive retraining and improving performance on new tasks. The work opens exciting possibilities for real-world applications requiring robust and adaptable graph learning, such as modelling complex relationships in the World Wide Web, social networks, and biological systems. By decoupling knowledge storage from model parameters, RAG-GFM offers a pathway towards more scalable, transparent, and efficient graph representation learning, paving the way for more powerful and versatile graph-based AI systems. This innovative approach promises to unlock the full potential of graph data in a wide range of domains, enabling more accurate predictions and deeper insights.

Dual-Modal Retrieval for Scalable Graph Learning enables efficient

This innovative approach allows for a significantly larger capacity for graph knowledge than traditional parameter-based models. The study pioneered a dual-view alignment objective to preserve heterogeneous information within the dual-modal module. This alignment process ensures that the model effectively integrates textual attributes with the underlying graph structure, improving representation quality. Experiments employed five benchmark graph datasets to rigorously evaluate the effectiveness of this alignment strategy. Scientists harnessed a retrieval mechanism to identify relevant texts and motifs, providing the model with additional information during inference.

This technique enables efficient adaptation to new tasks and domains without requiring extensive retraining. The system delivers contextual evidence by retrieving supporting instances, effectively augmenting the model’s knowledge base on demand. The research team meticulously designed the retrieval process to prioritize both semantic similarity and structural relevance, ensuring that the retrieved information is both informative and contextually appropriate. Performance comparisons revealed that RAG-GFM’s ability to access and integrate external knowledge significantly enhances its generalization capabilities, enabling it to effectively transfer learned representations across diverse graph datasets and tasks. This breakthrough enables more scalable and interpretable graph foundation models, paving the way for advanced graph learning applications.

RAG-GFM excels at few-shot node classification

This database integrates semantic queries providing textual signals and structural queries delivering transferable motifs, enabling adaptation with minimal supervision. This represents a relative improvement of over 5.3% compared to UniGraph on the Wiki-CS dataset. The team observed an average relative gain of approximately 3.0% in the LODO (dataset) setting and a 4.0% improvement in the more stringent LODO (domain) setting, highlighting the benefits of retrieval-enhanced transfer learning. The results reveal that cross-view alignment, enforcing consistency between semantic and structural views, significantly strengthens cross-domain robustness. Furthermore, domain-gated prompting ensures the universality of the framework, with consistent gains observed across both node and graph classification tasks, an average of 4.5% higher accuracy in node classification and 3.8% in graph classification, relative to baseline models. These findings demonstrate that RAG-GFM’s ability to integrate diverse knowledge sources and adapt to unseen domains represents a significant advancement in graph learning.

RAG-GFM decouples knowledge for graph learning

Scientists have developed a new Retrieval-Augmented Generation aided Graph Foundation Model (RAG-GFM) designed to overcome limitations in existing graph learning models. These models often struggle with in-memory bottlenecks, as they attempt to encode all necessary knowledge within their parameters, restricting their capacity and adaptability. RAG-GFM addresses this by externalising graph knowledge into a unified semantic-structural retrieval database, effectively decoupling learned parameters from retrievable information. The significance of this work lies in its ability to create more scalable and interpretable graph foundation models.

By offloading knowledge, RAG-GFM avoids the heavy lossy compression inherent in parameter-based approaches, enabling better generalisation across domains and datasets. The authors acknowledge that performance is sensitive to the number of retrieved query-answer pairs and weighting of textual and structural signals, suggesting that moderate values yield the best results. Future research could explore optimising these parameters further or investigating the application of RAG-GFM to even more complex graph-based problems, though the current findings represent a substantial advancement in the field of graph learning.

👉 More information

🗞 Overcoming In-Memory Bottlenecks in Graph Foundation Models via Retrieval-Augmented Generation

🧠 ArXiv: https://arxiv.org/abs/2601.15124