Mixture-of-Experts (MoE) models represent a promising approach to building increasingly powerful artificial intelligence systems, but applying this technique to image generation has proven challenging. Yujie Wei, Shiwei Zhang, and Hangjie Yuan, along with their colleagues, address this problem by introducing ProMoE, a new MoE framework designed specifically for Diffusion Transformers. The team demonstrates that existing MoE methods struggle with visual data because images contain inherent redundancy, hindering the development of specialised ‘expert’ networks. ProMoE overcomes this limitation with a two-step routing system that intelligently directs image components to the most appropriate experts, significantly improving performance on image generation tasks and surpassing current state-of-the-art methods on the ImageNet benchmark. This advancement paves the way for more efficient and effective image generation models with increased capacity and improved results.

ProMoE Architecture, Experiments and Implementation Details

This document provides a comprehensive supplement to a research paper describing ProMoE, a novel approach to building large-scale diffusion models. It details extensive experimental results, ablation studies, and implementation details supporting the claims made in the main paper, demonstrating the effectiveness of the ProMoE architecture. The document also adheres to guidelines regarding the use of Large Language Models in manuscript preparation. ProMoE utilizes a Mixture of Experts (MoE) approach, employing multiple expert neural networks to process different parts of the input data. This allows for increased model capacity without a proportional increase in computational cost.

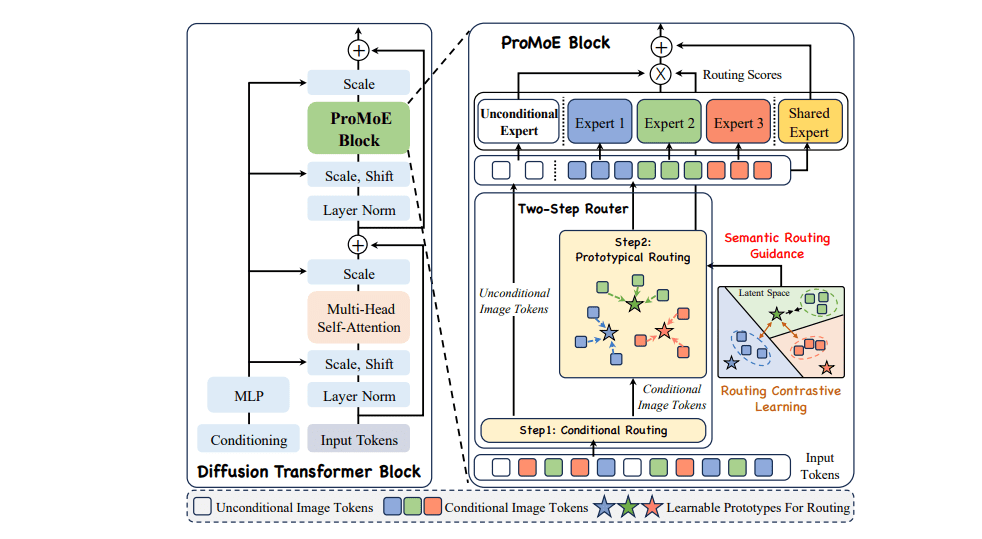

A key innovation is prototype-based routing, where the system learns a set of prototypes and assigns tokens to experts based on their similarity to these prototypes, improving routing stability and interpretability. The architecture also incorporates a Routing Contrastive Learning (RCL) loss function to encourage diverse expert assignments and improve routing quality, and includes both unconditional and shared experts for efficient processing. The document presents a wealth of experimental results demonstrating that ProMoE outperforms dense models as model size increases, and that performance improves with a greater number of experts, confirming the scalability of the approach. Ablation studies validate the importance of different components, showing that RCL is more effective than traditional load-balancing losses. The team showcases high-quality image generation results using ProMoE, and uses t-SNE visualizations to demonstrate that tokens are assigned to different experts based on their semantic content.

ProMoE Improves DiT Expert Specialisation

This study addresses the limited success of Mixture-of-Experts (MoE) when applied to Diffusion Transformers (DiTs), despite MoE’s effectiveness in large language models. Researchers identified high spatial redundancy among image patches and functional heterogeneity arising from conditional and unconditional inputs as key challenges hindering MoE performance in vision tasks. To overcome these limitations, the team developed ProMoE, a novel MoE framework designed to promote expert specialization within DiTs. ProMoE employs a two-step router with explicit guidance, beginning with conditional routing that partitions image tokens into conditional and unconditional sets based on their functional roles.

This initial step addresses the functional heterogeneity of visual inputs, ensuring experts process distinct types of information. Subsequently, prototypical routing refines assignments of conditional tokens using learnable prototypes based on semantic content, effectively leveraging the underlying semantic structure of images. This process utilizes similarity-based expert allocation in latent space, incorporating explicit semantic guidance crucial for vision MoE. To further enhance the prototypical routing process, the team proposed a routing contrastive loss, explicitly promoting both intra-expert coherence and inter-expert diversity.

Intra-expert coherence ensures each expert consistently processes similar patterns, while inter-expert diversity encourages specialization in distinct tasks. Extensive experiments on the ImageNet benchmark demonstrate that ProMoE surpasses state-of-the-art methods under both Rectified Flow and DDPM training objectives. Researchers measured inter-expert diversity using singular value decomposition on expert weight matrices, confirming that the routing guidance enhances specialization.

ProMoE Boosts DiT Performance with Token Routing

The research team presents ProMoE, a novel Mixture-of-Experts (MoE) framework designed to significantly enhance Diffusion Transformer (DiT) models. Recognizing that existing MoE applications to DiTs have yielded limited gains, scientists analyzed the fundamental differences between language and visual tokens, discovering that visual tokens exhibit spatial redundancy and functional heterogeneity, hindering expert specialization. ProMoE addresses this challenge with a two-step router that incorporates explicit routing guidance, promoting more effective expert assignment. The first step partitions image tokens into conditional and unconditional sets based on their functional roles.

Subsequently, prototypical routing refines assignments of conditional tokens using learnable prototypes grounded in semantic content. This similarity-based expert allocation in latent space provides a natural mechanism for incorporating explicit semantic guidance, which the team validated as crucial for vision MoE. To further enhance this process, scientists developed a routing contrastive loss that explicitly promotes intra-expert coherence and inter-expert diversity. Experiments conducted on the ImageNet benchmark demonstrate ProMoE’s superior performance under both Rectified Flow and DDPM training objectives.

Notably, ProMoE surpasses state-of-the-art methods, even those with 1. 7times more total parameters. The team achieved significant gains over dense models while utilizing fewer activated parameters, demonstrating the efficiency of the approach. These results confirm that ProMoE effectively scales DiT models, delivering substantial improvements in generative quality and computational efficiency. The framework’s success stems from its ability to address the unique characteristics of visual tokens and leverage explicit semantic guidance for optimized expert assignment.

Functional Routing Improves Vision Transformer Scaling

ProMoE represents a significant advance in diffusion transformer architecture, successfully addressing limitations encountered when applying mixture-of-experts techniques to vision tasks. Researchers developed a novel framework featuring a two-step router that guides expert specialization by partitioning image tokens based on their functional roles and refining assignments using semantic content. This approach, incorporating a routing contrastive loss to enhance coherence and diversity, enables more effective scaling of capacity while maintaining computational efficiency. Extensive evaluation on the ImageNet benchmark demonstrates that ProMoE consistently surpasses both dense transformers and existing mixture-of-experts models across various settings and training objectives.

Specifically, the team achieved substantial reductions in Fréchet Inception Distance and increases in Inception Score, indicating improved image quality and diversity. Importantly, ProMoE achieves these gains with fewer activated parameters than comparable models, highlighting its parameter efficiency and scalability. The authors acknowledge that performance is dependent on careful tuning of the routing process and the contrastive loss. Future work could explore adaptive routing strategies and investigate the potential for applying ProMoE to other vision tasks beyond image generation. The team has made code publicly available to facilitate further research and development in this area.

👉 More information

🗞 Routing Matters in MoE: Scaling Diffusion Transformers with Explicit Routing Guidance

🧠 ArXiv: https://arxiv.org/abs/2510.24711