Scientists are increasingly scrutinising the long-horizon planning abilities of large language models, a facet of artificial intelligence not yet fully understood. Sebastiano Monti, Carlo Nicolini, and Gianni Pellegrini from Ipazia SpA, alongside Jacopo Staiano of the University of Trento and Bruno Lepri from both Fondazione Bruno Kessler and Ipazia SpA, present a systematic evaluation using a new benchmark called SokoBench, built around simplified Sokoban puzzles. This research is significant because it reveals a clear performance drop in these models when tasks require planning beyond 25 moves, indicating a potential fundamental limit to their forward-thinking capacity and suggesting that simply increasing model size may not be enough to overcome this challenge.

Researchers intentionally minimised structural complexity, creating puzzles with minimal branching factors to focus specifically on the ability of LRMs to generate coherent action sequences over extended periods. This suggests that inherent architectural limitations within the models may not be fully addressed by simply increasing computational scale during testing. The team achieved a controlled environment where models are required to produce complete solution sequences without external memory or feedback, relying solely on internal state representations to track the evolving puzzle state.

This approach allows for a focused investigation into the ability of LRMs to sustain coherent planning over long, albeit simple, action sequences. The research establishes that even minimal reasoning branching in trivial Sokoban instances is sufficient to induce planning failures, highlighting a systemic deficiency in long-term action representation and sequential logic. Researchers posit that this limitation extends to spatial reasoning, representing a crucial gap in the capabilities of current LRMs. Concretely, the study examined whether current LRMs could reliably solve linear-corridor Sokoban puzzles with minimal branching and identified the point at which increasing horizon length leads to catastrophic breakdowns in action validity.

These minimal sub-problems, easily solved by humans, still present a significant challenge for Large Reasoning Models, aligning with preliminary studies involving spatial intelligence. This breakthrough reveals that while LRMs excel in natural language understanding and knowledge retrieval, their ability to perform robust long-horizon planning remains constrained. The work opens avenues for future research focused on developing new architectures and training methodologies that enhance the long-term action representation and sequential logic capabilities of these models. By isolating the core challenges of long-horizon planning, this study provides a valuable benchmark for evaluating and improving the planning abilities of future generations of Large Reasoning Models, with potential applications in robotics, game playing, and complex problem-solving scenarios.

Sokoban Corridor Maps for Long-Horizon Planning

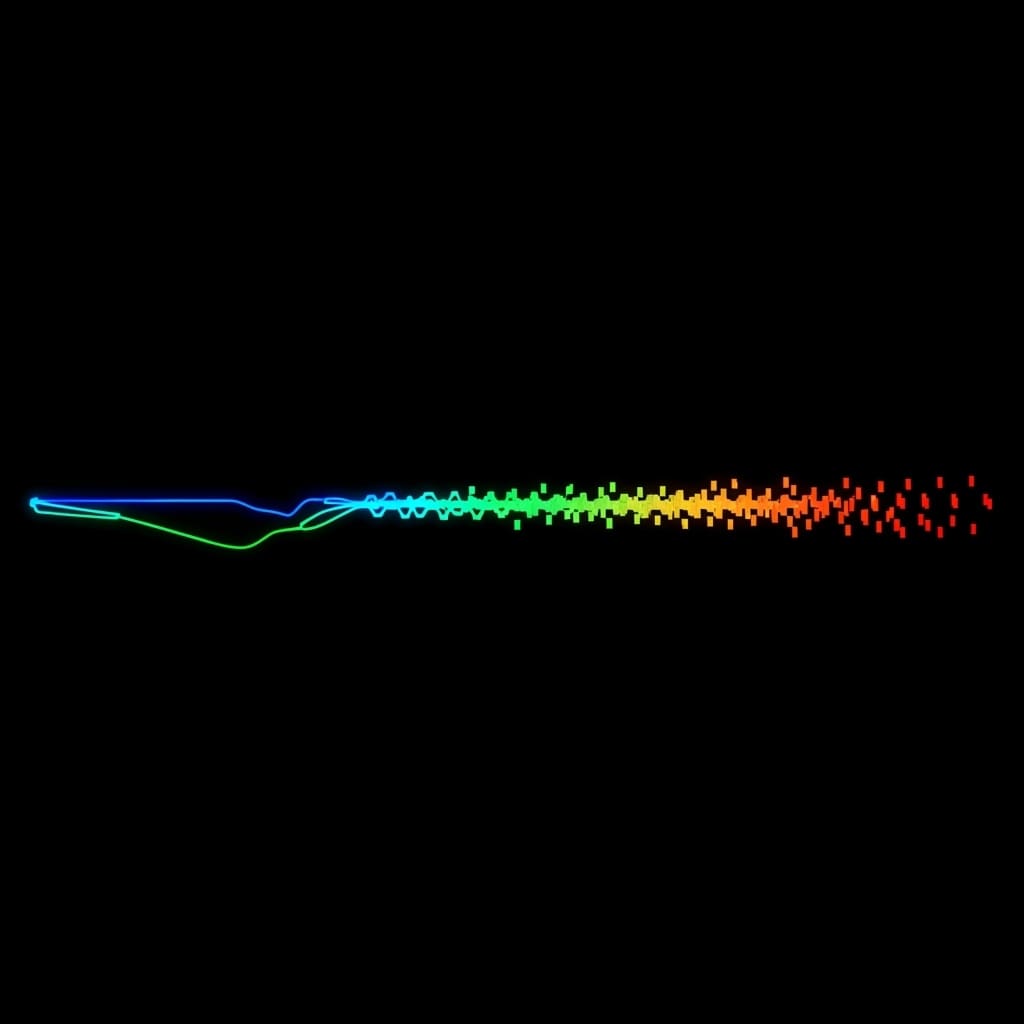

Researchers generated a dataset comprising narrow, corridor-like maps of width and height 1, each containing one player, one box, and one goal, with map lengths ranging from 5 to 100 in increments of 5. This constrained map design allowed for a single degree of freedom, corridor length, to serve as a proxy for task difficulty, simplifying the measurement of solution complexity. To augment the dataset and mitigate potential pretraining biases, the team created four rotated variants of each map, encompassing 90◦, 180◦, and 270◦ rotations alongside the original orientation. This resulted in a comprehensive evaluation set of 80 distinct maps, each with four orientations, providing a robust basis for analysing orientation-dependent performance.

Experiments employed DeepSeek R1, GPT-5, and GPT-oss 120B, all reasoning models configured to generate explicit reasoning traces before providing final answers. Inference calls were consistently routed through OpenRouter, utilising DeepInfra as the inference provider, ensuring a standardised computational environment. Specifically, the pipeline involved a domain parser, problem parser, and problem solver, enabling the models to translate Sokoban maps into PDDL format, validate the problem definition, and generate a plan using a solver. The maximum number of completion tokens, encompassing both reasoning and the final answer, was capped at 32,768 to manage computational resources. This approach allowed scientists to assess whether equipping LRMs with external planning tools could improve performance beyond inherent architectural limitations, revealing that intrinsic properties like word length significantly influence performance.

LRMs falter beyond twenty-five move Sokoban puzzles

This suggests that current LRMs struggle with tasks demanding extended sequences of reasoning steps. Measurements confirm that these enhancements, while helpful, did not fully overcome the inherent architectural limitations of the models. Data shows that test-time scaling approaches alone may be insufficient to unlock significantly improved long-horizon planning. This finding highlights the need for architectural innovations to address the core limitations in forward planning. Researchers meticulously evaluated the models on Sokoban puzzles, a spatially constrained environment requiring strategic box manipulation.

Solvable maps were generated efficiently, enabling rigorous evaluation with exact solvers and metrics like search depth and solution time. Tests proved that Sokoban, considered a good benchmark for planning, presents a unique challenge due to its lack of shortcuts and the need for unique solutions for each map. Initial results with the OpenAI o1-preview model yielded success rates of only 10, 12% when directly applied to the puzzles. Furthermore, the team explored an LLM-Modulo setting, where the model generates plans executed by an external planner, achieving approximately 43% solved instances for o1-preview.

Measurements indicate a substantial increase in success rates, but at a significantly higher computational cost. Analysis of LLM failures revealed that weaknesses often stem from basic reasoning steps, rather than the complexity of the planning problem itself. The work builds on observations from character-counting tasks, demonstrating that LLMs can perform simple symbolic operations but require explicit prompting to do so reliably.

Sokoban puzzles reveal limited planning horizons

Scientists have systematically assessed the planning and long-horizon reasoning capabilities of state-of-the-art language models. The assessment of long-horizon planning in language models is both necessary and achievable, as demonstrated by this work. The study acknowledges limitations including the focus on one-box linear corridors, providing a lower bound on planning ability, and the use of exact-plan validation. Future research will explore solver-based verification to accommodate maps with multiple valid solutions. The significance of these findings lies in highlighting that the limitations of language models extend beyond problem complexity, potentially stemming from a lack of basic abilities such as counting. Observations align with recent characterisations of reasoning models as “wanderers”, where small errors in state tracking accumulate exponentially over extended planning horizons. Consequently, simply increasing the scale of these models will not overcome these structural limitations without fundamental architectural changes or the incorporation of explicit symbolic grounding.

👉 More information

🗞 SokoBench: Evaluating Long-Horizon Planning and Reasoning in Large Language Models

🧠 ArXiv: https://arxiv.org/abs/2601.20856