The challenge of translating sign language into text receives a significant boost from a new framework developed by Sen Zhang, Xiaoxiao He, and colleagues at Rutgers University, alongside Chaowei Tan from Qualcomm. Their work introduces a method for directly translating three-dimensional American Sign Language, moving beyond the limitations of traditional two-dimensional video analysis. This innovative approach captures the full spatial and gestural complexity of signing, promising more accurate and robust digital communication for deaf and hard-of-hearing individuals. The team demonstrates both direct translation from sign data to text, and a flexible instruction-guided system, representing a crucial step towards building truly inclusive intelligent systems capable of understanding all forms of human communication.

Sign Language Translation with Large Language Models

Researchers have developed a new approach to Sign Language Recognition (SLR) that utilizes a Large Language Model (LLM) alongside motion data to translate sign language gestures into English text, guided by specific instructions. The team employs a Vector Quantized Variational Autoencoder (VQ-VAE) to compress motion data into a discrete token sequence, suitable for input into LLMs like LLaMA or Qwen. This approach utilizes the large-scale SignAvatar dataset, encompassing multiple sign languages, including American Sign Language and German Sign Language. The training occurs in two stages, beginning with initial training to map motion data to text, followed by instruction tuning to refine the model’s ability to follow prompts and generate accurate translations, demonstrating that LLMs can effectively recognize and translate sign language.

D Motion Encoding for Sign Language Translation

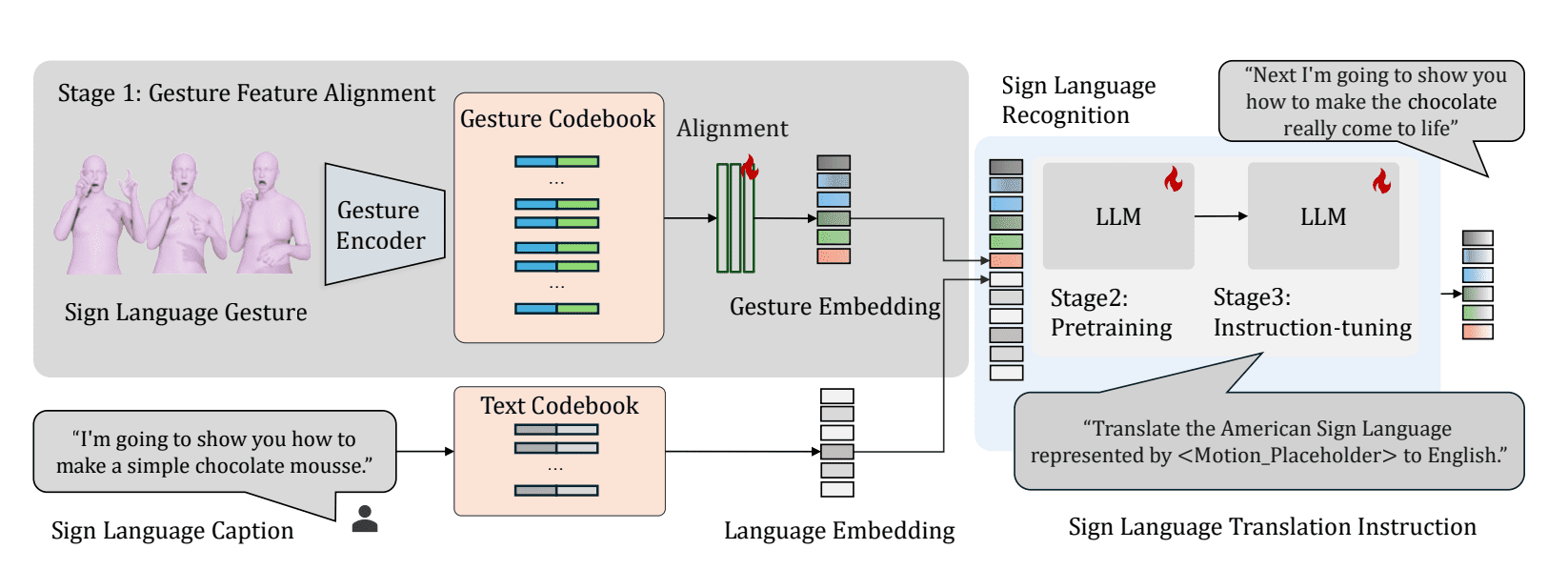

Scientists have created the Large Sign Language Model (LSLM), a novel framework for translating three-dimensional American Sign Language that moves beyond traditional two-dimensional video analysis. Instead of relying on video, the system directly utilizes detailed 3D gesture features captured from body and hand motion, leveraging recent 3D sign language datasets and SMPL-X representations. The team employed a motion vector-quantized variational autoencoder (VQ-VAE) to encode these 3D motions, creating a discrete representation of gesture features, which then interfaces with a projection layer aligning it with textual data for integration with a large language model (LLM). The training pipeline begins with a pretrained text-based LLM, followed by training the VQ-VAE and aligning gesture features with text, establishing a connection between visual and linguistic information. These aligned features then serve as input to pretrain the LLM, enabling it to develop an understanding of sign language, and finally undergoes instruction-finetuning, learning to translate 3D ASL representations into English based on specific prompts.

D Sign Language Translation with Large Models

Researchers have developed the Large Sign Language Model (LSLM), leveraging large language models to improve virtual communication for individuals who are hearing impaired. This work moves beyond traditional two-dimensional sign recognition by directly utilizing 3D skeletal data, capturing richer spatial and gestural information. A core achievement is a vector quantized variational autoencoder (VQ-VAE) based sign motion encoder, which transforms continuous sign language motion sequences into discrete tokens, utilizing the SMPL-X human body model representing poses with 52 joints, and downsampling the original motion data. Multilayer perceptrons align the quantized representations with the input embeddings of the large language model. The training process was divided into three stages: training the sign language tokenizer, modality-alignment pretraining, and instruction fine-tuning, resulting in significant performance improvements.

D Sign Language Translation with Large Models

This research presents the Large Sign Language Model (LSLM), which advances the translation of 3D American Sign Language for improved virtual communication. Unlike existing methods relying on 2D video analysis, LSLM directly utilizes rich 3D data capturing spatial information, gestures, and depth, resulting in more accurate and robust translation. The team successfully integrated this 3D gesture data with a large language model, enabling end-to-end translation and exploring instruction-guided translation for greater control over the output. Results indicate that combining the language model with direct alignment and fusion of 3D gesture features improves translation accuracy. Future research will focus on expanding available 3D sign language datasets and developing linguistically-aware learning strategies to further enhance model performance and accessibility for Deaf and hard-of-hearing communities.

👉 More information

🗞 Large Sign Language Models: Toward 3D American Sign Language Translation

🧠 ArXiv: https://arxiv.org/abs/2511.08535