Extending the capabilities of large language models to understand both text and images remains a significant challenge, often requiring enormous resources to create new, fully integrated vision-language models. James Y. Huang from the University of Southern California, alongside Sheng Zhang, Qianchu Liu, Guanghui Qin, Tinghui Zhu from the University of California, Davis, and Tristan Naumann from Microsoft Research, present a novel framework called BeMyEyes that overcomes this limitation. Their approach avoids costly retraining by orchestrating collaboration between smaller, adaptable vision-language models, acting as ‘perceivers’, and powerful language models functioning as ‘reasoners’ through carefully designed conversations. The team demonstrates that this multi-agent system unlocks multimodal reasoning capabilities, allowing a relatively small, open-source language model to outperform much larger, proprietary vision-language models on complex knowledge-intensive tasks, paving the way for more flexible and scalable multimodal AI systems.

Three Agents Improve Visual Question Answering

This research details a multi-agent system, named B. Prompt, designed to answer multiple-choice questions about images. The system leverages a three-agent approach to improve performance on visual question answering tasks by breaking down complex problems into smaller, manageable steps. Each agent plays a specific role: the Perceiver Agent describes the image, the Reasoner Agent coordinates the process and interrogates the Perceiver Agent for details, and the Expert, embodied within the Reasoner Agent, synthesizes information and makes the final decision. The system begins with an initial prompt containing the question and image descriptions, and the Reasoner Agent extracts the final answer in a specific format: Answer: $LETTER.

Multimodal Reasoning via Collaborative Language Agents

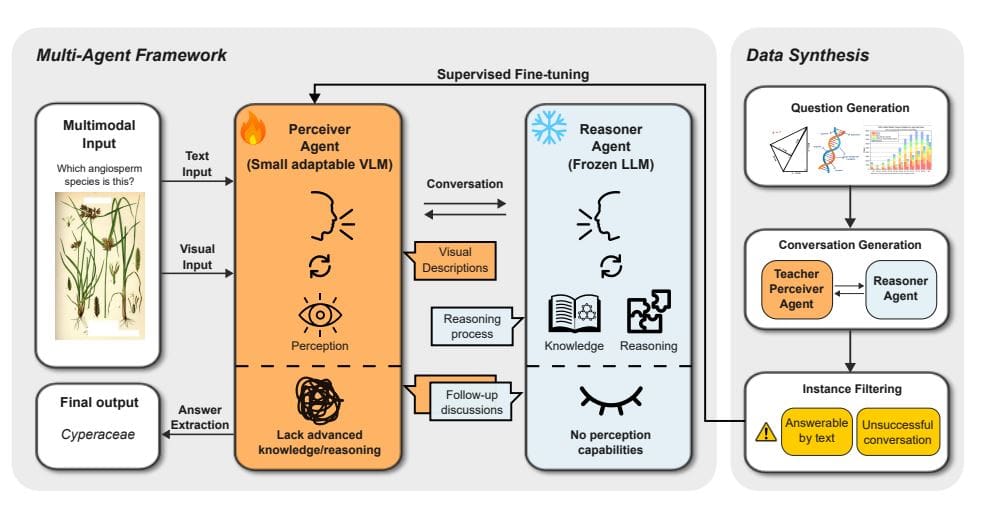

Researchers developed BeMyEyes, a multi-agent framework that extends the capabilities of large language models (LLMs) to multimodal reasoning through collaboration between adaptable vision-language models (VLMs) and powerful LLMs. This system decouples perception and reasoning, allowing text-only LLMs to process visual information without extensive retraining. The framework centers around a perceiver agent, implemented using a small, computationally efficient VLM, and a reasoner agent, leveraging a frozen LLM with strong knowledge and reasoning skills. This modular design enables flexible integration of new perceiver or reasoner models.

To facilitate effective collaboration, the researchers engineered a conversational flow between the agents, with the perceiver agent focusing on interpreting visual inputs and conveying relevant details, while the reasoner actively queries for specific information. The perceiver agent is prompted to be aware of the reasoner’s lack of direct visual perception, encouraging detailed descriptions. The team designed a data synthesis pipeline and supervised fine-tuning strategy to train the perceiver agent, improving its ability to accurately perceive visual information and respond effectively to the reasoner’s instructions. Experiments demonstrate the effectiveness of this approach across diverse tasks, models, and domains, establishing BeMyEyes as a scalable and flexible alternative to large-scale multimodal models. Equipping a text-only DeepSeek-R1 LLM with a Qwen2. 5-VL-7B perceiver agent allows the system to outperform large-scale proprietary VLMs, such as GPT-4o, on knowledge-intensive multimodal tasks.

BeMyEyes Framework Enables Multimodal Reasoning

Researchers present BeMyEyes, a novel multi-agent framework that extends the capabilities of large language models (LLMs) to multimodal reasoning, integrating visual information without requiring extensive retraining of the LLM itself. This framework orchestrates collaboration between efficient, adaptable vision language models (VLMs) acting as “perceiver” agents and powerful LLMs functioning as “reasoner” agents through conversational exchanges. The perceiver agent processes visual inputs and conveys relevant information, while the reasoner agent leverages its existing knowledge and reasoning skills to solve complex tasks. Experiments demonstrate that equipping a text-only DeepSeek-R1 LLM with the Qwen2.

5-VL-7B perceiver agent unlocks significant multimodal reasoning capabilities, exceeding the performance of large-scale proprietary VLMs such as GPT-4o on challenging benchmarks. Specifically, on the MathVista benchmark, the pairing achieves a score of 72. 7, exceeding GPT-4o’s 68. 3, and on the MMMU-Pro benchmark, it delivers a score of 57. 2, outperforming GPT-4o’s 49.

- To facilitate effective collaboration, the researchers developed a data synthesis pipeline to train the perceiver agent, distilling perceptual and instruction-following capabilities from larger VLMs. This approach allows the perceiver to effectively communicate visual information to the reasoner, enabling a more nuanced and accurate understanding of the task. The results confirm that BeMyEyes significantly improves the performance of text-only LLMs on multimodal reasoning tasks, establishing a modular, scalable, and flexible alternative to training large-scale multimodal models, reducing computational cost and preserving generalization capabilities.

Multimodal Reasoning via Collaborative Agents

This research presents BeMyEyes, a novel framework that extends the capabilities of large language models to multimodal reasoning through collaboration with smaller, efficient vision-language models. By orchestrating a multi-agent system, the team successfully avoids the need to train extremely large multimodal models while preserving the reasoning strengths of existing language models. Comprehensive experiments across multiple benchmarks demonstrate that BeMyEyes substantially improves multimodal reasoning performance, achieving results comparable to, and in some cases exceeding, those of large-scale proprietary models. The key achievement lies in the framework’s ability to combine the perceptual strengths of vision-language models with the reasoning capabilities of large language models, creating a synergistic system. This modular approach offers a scalable and flexible alternative to training monolithic multimodal models, allowing for adaptation to new modalities without extensive retraining. This work establishes a promising new direction for building future multimodal reasoning systems, offering a pathway towards more efficient and adaptable artificial intelligence.

👉 More information

🗞 Be My Eyes: Extending Large Language Models to New Modalities Through Multi-Agent Collaboration

🧠 ArXiv: https://arxiv.org/abs/2511.19417