Researchers at MIT have developed a tool called SymGen that makes it easier to verify the responses of large language models, a type of artificial intelligence. These models can sometimes “hallucinate” and generate incorrect or unsupported information in response to a query. To address this issue, human fact-checkers typically read through long documents cited by the model, but this process is time-consuming and error-prone.

SymGen generates responses with citations that point directly to the place in a source document, such as a given cell in a database. Users can hover over highlighted portions of the text response to see the data used to generate that specific word or phrase. The system was developed by researchers including Shannon Shen, Lucas Torroba Hennigen, and David Sontag, among others.

In a user study, SymGen sped up verification time by about 20 percent compared to manual procedures. This technology has the potential to help people identify errors in large language models deployed in various real-world situations, from generating clinical notes to summarizing financial market reports.

Verifying AI Model Responses with SymGen

Large language models (LLMs) have revolutionized the field of artificial intelligence, but they are not without their limitations. One major issue is that these models can “hallucinate” or generate incorrect or unsupported information in response to a query. To address this problem, human fact-checkers are often employed to verify an LLM’s responses, especially in high-stakes settings like healthcare or finance. However, the validation process can be time-consuming and error-prone, which may prevent some users from deploying generative AI models in the first place.

Streamlining Validation with SymGen

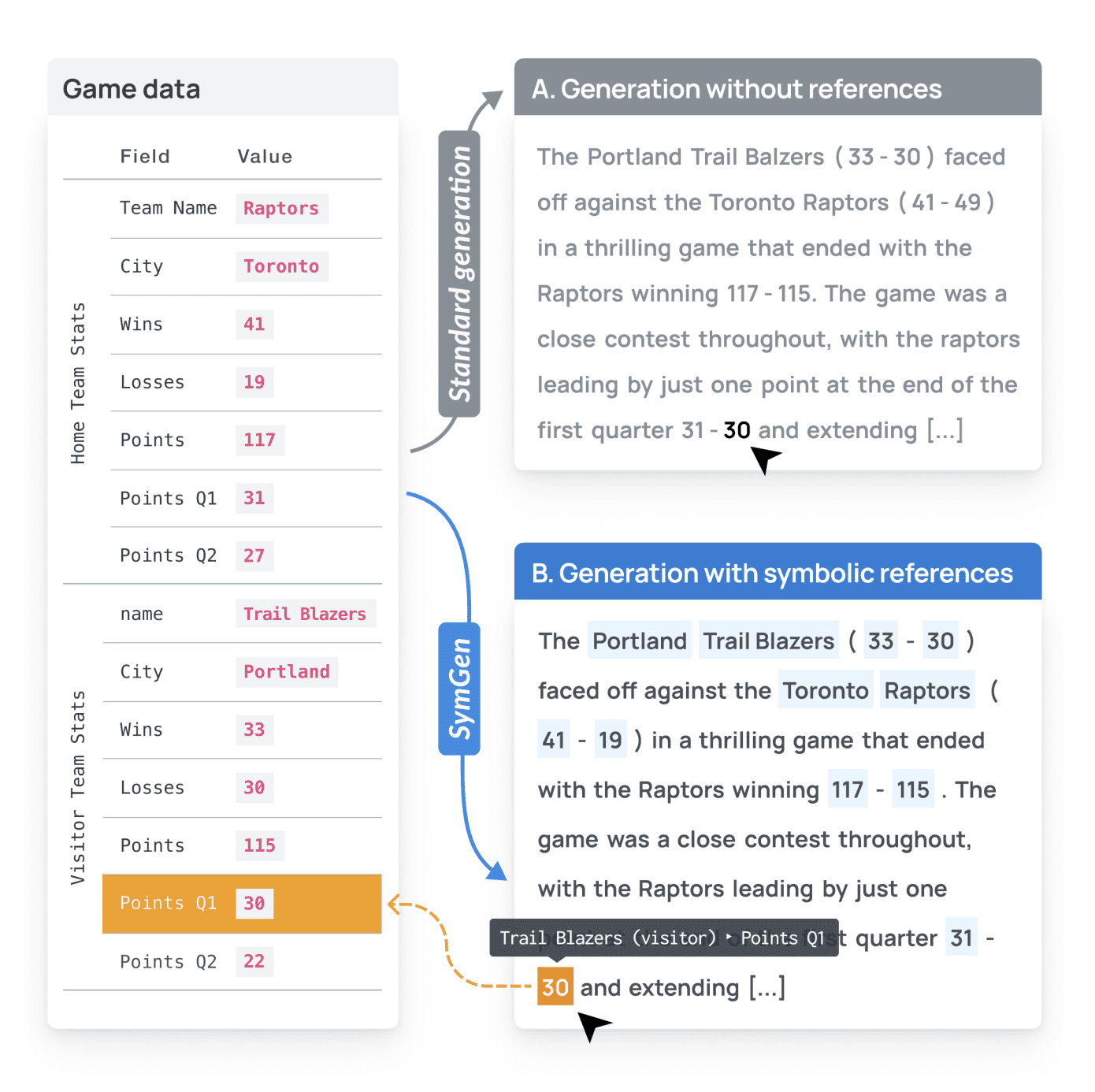

Researchers at MIT have developed a system called SymGen that aims to speed up the verification process by making it easier for humans to validate model outputs. SymGen works by prompting an LLM to generate its response in a symbolic form, where every time the model wants to cite words in its response, it must write the specific cell from the data table that contains the information it is referencing. This intermediate step allows for fine-grained references, enabling users to pinpoint exactly where in the data each span of text in the output corresponds to.

SymGen then resolves each reference using a rule-based tool that copies the corresponding text from the data table into the model’s response. This ensures that there will be no errors in the part of the text that corresponds to the actual data variable. In a user study, participants found that SymGen made it easier to verify LLM-generated text and could validate the model’s responses about 20% faster than if they used standard methods.

Symbolic References for Validation

Many LLMs are designed to generate citations, which point to external documents, along with their language-based responses so users can check them. However, these verification systems are usually designed as an afterthought, without considering the effort it takes for people to sift through numerous citations. SymGen approaches the validation problem from the perspective of the humans who will do the work.

The researchers’ approach is to design the prompt in a specific way to draw on the LLM’s capabilities. By training the model on reams of data from the internet, including some data recorded in “placeholder format” where codes replace actual values, SymGen can create symbolic responses that are easily verifiable.

Limitations and Future Directions

While SymGen shows promise in streamlining the validation process, it is limited by the quality of the source data. The LLM could cite an incorrect variable, and a human verifier may be none-the-wiser. Additionally, the user must have source data in a structured format, like a table, to feed into SymGen.

Moving forward, the researchers are enhancing SymGen so it can handle arbitrary text and other forms of data. With that capability, it could help validate portions of AI-generated legal document summaries, for instance. They also plan to test SymGen with physicians to study how it could identify errors in AI-generated clinical summaries.

External Link: Click Here For More