MIT engineers have developed a handheld tool that enables robots to learn tasks via teleoperation, kinesthetic training, or observation – combining three existing methods into a single interface. The tool, which attaches to standard collaborative robotic arms, records movements and force applied during tasks, allowing robots to learn from volunteers with manufacturing expertise. Testing involved press-fitting and molding, with the aim of broadening the range of users capable of training robots and expanding the skills they can acquire.

Expanding Robotic Skillsets

Engineers have developed a three-in-one training interface enabling robots to learn tasks through teleoperation, kinesthetic training, or by observing a person perform the task, thereby expanding potential user interaction and skill acquisition. This interface, designed to attach to collaborative robotic arms, offers flexibility in selecting the most suitable training style for a given application.

The MIT team tested this versatile demonstration interface on a standard collaborative robotic arm, utilising volunteers with manufacturing expertise to perform press-fitting and molding tasks. The interface is equipped with a camera and markers to track tool position and movement, alongside force sensors to measure applied pressure during task execution. When attached, the entire robot can be controlled remotely, with the interface’s camera recording movements for the robot to learn, and a person can physically guide the robot, with the interface recording the movements for replication.

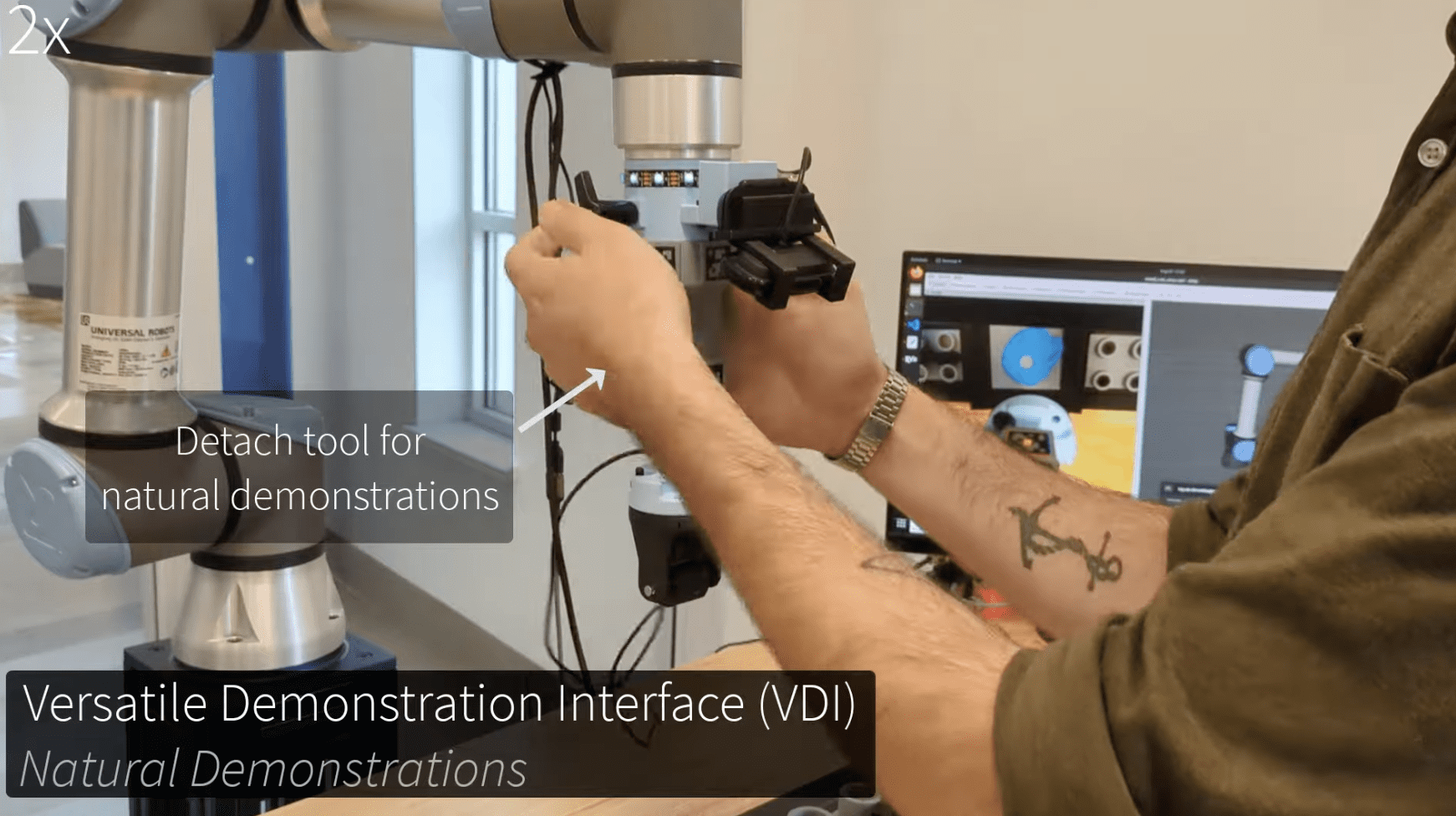

The development of this tool aligns with the emerging strategy of learning from demonstration, or LfD, which focuses on designing robots capable of being trained in more natural and intuitive ways. The team engineered the versatile demonstration interface with the goal of combining these three distinct methods of robot instruction, enabling robots to learn from a wider range of individuals and for a greater variety of tasks. The interface allows for both physical guidance of the robot and observation of a person performing the task, with the camera recording the actions for the robot to mimic.

This new interface offers increased training flexibility, potentially expanding the range of users who can interact with and teach robots, and enabling them to learn a wider set of skills, and represents a contribution to the field of robot training solutions. The tool allows a person to remotely train a robot to handle hazardous materials, physically guide a robot through packaging, or demonstrate a task like applying a logo, with the robot learning through observation and replication of the demonstrated actions.

Methods of Robotic Instruction

The interface is designed to fit onto the arm of a typical collaborative robotic arm, providing a means of physical interaction and data capture. The attachment is equipped with a camera and markers that track the tool’s position and movement over time, along with force sensors to measure the amount of pressure applied during a given task, facilitating precise recording of task parameters. When the interface is attached to a robot, the entire robot can be controlled remotely, and the interface’s camera records the robot’s movements, which the robot can use to learn the task.

Similarly, a person can physically move the robot, with the interface attached, allowing for kinesthetic teaching, and the interface records these movements for the robot to replicate the actions. The versatile demonstration interface can also be detached and physically used to perform the task, with the camera recording the human demonstration, enabling the robot to learn by observing and mimicking the demonstrated actions.

The development of this tool represents a contribution to the field of robot training solutions, and aligns with the emerging strategy of learning from demonstration, or LfD, which focuses on designing robots capable of being trained in more natural and intuitive ways. Existing LfD methods generally fall into the three categories of teleoperation, kinesthetic training, and natural teaching, with each method potentially excelling in specific scenarios. The team engineered the versatile demonstration interface with the goal of bringing together these three different ways someone might want to learn, enabling robots to learn from a wider range of individuals and for a greater variety of tasks.

Versatility and Future Implications

The versatility of this interface extends to its applicability with standard collaborative robotic arms, offering compatibility with existing robotic systems and facilitating broader adoption across manufacturing and other sectors. The interface’s design allows for seamless integration with commonly used robotic platforms, reducing the need for extensive modifications or specialised equipment.

The system’s data capture capabilities are comprehensive, utilising both visual and tactile feedback to create a detailed record of task execution, and the camera and markers track tool position and movement over time, while force sensors measure applied pressure during a given task. This multi-sensor approach ensures accurate and reliable data acquisition, enabling the robot to learn complex tasks with greater precision.

The potential for varied training approaches is a key feature of the interface, and a person can remotely train a robot to handle hazardous materials, physically guide a robot through packaging, or demonstrate a task like applying a logo. This adaptability allows for tailored training strategies based on the specific task and the user’s expertise, maximising learning efficiency and skill acquisition.

The development of this tool aligns with an emerging strategy in robot learning called learning from demonstration, or LfD, and existing LfD methods generally fall into the three categories of teleoperation, kinesthetic training, and natural teaching. The team engineered the versatile demonstration interface with the goal of bringing together these three different ways someone might want to learn, enabling robots to learn from a wider range of individuals and for a greater variety of tasks. This combined approach aims to overcome the limitations of individual LfD methods, offering a more robust and versatile solution for robot training solutions.

More information

External Link: Click Here For More