Mistral AI has introduced Codestral, a generative AI model designed for code generation tasks. Fluent in over 80 programming languages, Codestral assists developers in various coding environments and projects, saving time and reducing the risk of errors. It outperforms other models in code generation performance and latency. Codestral is available for research and testing under the new Mistral AI Non-Production License and can be downloaded on HuggingFace. It is integrated into LlamaIndex and LangChain, and can be used within the VSCode and JetBrains environments through Continue.dev and Tabnine. Feedback from developers and companies like JetBrains, Tabnine, and Sourcegraph has been positive.

Introduction to Codestral: A New AI Model for Code Generation

Mistral AI has introduced Codestral, a generative AI model specifically designed for code generation tasks. This model is the first of its kind from the company and is aimed at assisting developers in writing and interacting with code. Codestral is not only proficient in English but also in code, making it a valuable tool for designing advanced AI applications for software developers.

Codestral’s Language Proficiency and Functionality

Codestral is trained on a diverse dataset of over 80 programming languages, including popular ones like Python, Java, C, C++, JavaScript, and Bash, as well as more specific ones like Swift and Fortran. This wide language base allows Codestral to assist developers across various coding environments and projects. The model is designed to save developers time and effort by completing coding functions, writing tests, and filling in any partial code using a fill-in-the-middle mechanism. This interaction with Codestral can help developers improve their coding skills and reduce the risk of errors and bugs.

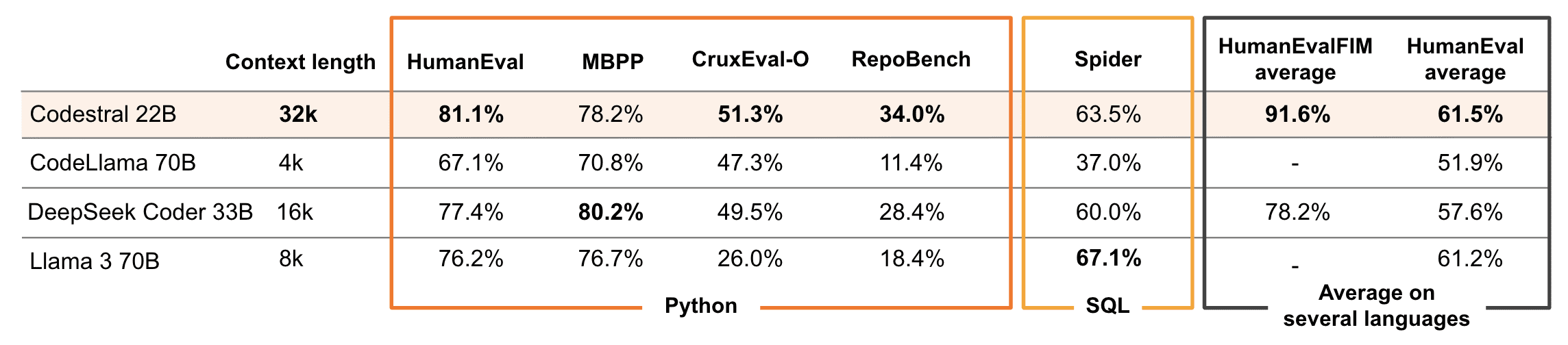

Codestral’s Performance and Benchmarking

As a 22B model, Codestral has set a new standard in the performance/latency space for code generation, outperforming previous models used for coding. It has a larger context window of 32k, compared to 4k, 8k, or 16k for competitors, which allows it to outperform all other models in RepoBench, a long-range evaluation for code generation. Codestral’s performance has been evaluated against existing code-specific models with higher hardware requirements in Python, SQL, and additional languages. It has also been assessed for its fill-in-the-middle performance in Python, JavaScript, and Java, compared to DeepSeek Coder 33B.

Getting Started with Codestral

Codestral is a 22B open-weight model licensed under the new Mistral AI Non-Production License, which means it can be used for research and testing purposes. It can be downloaded on HuggingFace. With this release, Mistral AI has also introduced a new endpoint: codestral.mistral.ai. This endpoint is preferred by users who use the Instruct or Fill-In-the-Middle routes inside their IDE. The API Key for this endpoint is managed at the personal level and isn’t bound by the usual organization rate limits. Codestral is also available on the usual API endpoint: api.mistral.ai where queries are billed per tokens.

Codestral’s Integration and Feedback from the Developer Community

Codestral has been integrated into popular tools for developer productivity and AI application-making, such as LlamaIndex and LangChain. It is also available within the VSCode and JetBrains environments through Continue.dev and Tabnine. The developer community has given positive feedback on Codestral’s speed, quality, and performance. For instance, Nate Sesti, CTO and co-founder of Continue.dev, stated that a public autocomplete model with this combination of speed and quality hadn’t existed before, and it’s going to be a phase shift for developers everywhere. Vladislav Tankov, Head of JetBrains AI, expressed excitement about the capabilities that Mistral unveils and the strong focus on code and development assistance.

External Link: Click Here For More