The demand for faster data transfer within high-performance computing continues to grow alongside the increasing power of modern graphics processing units. Researchers, including Gabin Schieffer, Jacob Wahlgren, and Ruimin Shi from KTH Royal Institute of Technology, alongside colleagues at Lawrence Livermore National Laboratory, investigate communication between multiple AMD MI300A Accelerated Processing Units, which combine CPU, GPU, and memory within a single package. Their work focuses on the Infinity Fabric Interconnect, a technology used in leadership supercomputers like El Capitan to link four APUs within a single node, and explores how efficiently data moves directly between the GPUs. By designing specific benchmarks and comparing different programming interfaces, such as HIP, MPI, and RCCL, the team identifies key strategies for optimising communication and ultimately improving the performance of demanding scientific applications, demonstrated through successful optimisation of the Quicksilver and CloverLeaf codes.

MI300A APU Unified Memory Performance Analysis

This document details a comprehensive performance analysis of the AMD MI300A APU, focusing on its innovative unified memory architecture and interconnects. The research investigates how sharing a single pool of physical memory between the CPU and GPU impacts performance, a departure from traditional discrete GPU setups. Researchers employed a variety of tests to characterize performance under different memory access patterns and communication scenarios. The MI300A delivers high memory bandwidth, but latency varies depending on how data is accessed and where it resides within the memory hierarchy. Sequential data access greatly benefits from the high bandwidth, while random access is more sensitive to latency. The unified memory architecture simplifies programming and data management by eliminating the need for explicit data transfers between CPU and GPU memory. However, performance can vary significantly depending on the workload, memory access patterns, and system configuration. The research compares the MI300A’s performance to traditional discrete GPU systems, highlighting the advantages and disadvantages of the unified memory approach.

The team developed specialized micro-benchmarks and profiling tools to thoroughly analyze the MI300A’s performance. This research provides valuable insights into the performance characteristics of the AMD MI300A APU and its unified memory architecture. The findings are crucial for developers and system architects seeking to optimize applications and systems for this platform. This design aims to overcome performance bottlenecks caused by data movement between processors in high-performance computing applications by collocating processing units and memory, significantly reducing latency and increasing bandwidth. A key innovation lies in the system’s architecture, which moves beyond traditional designs that separate CPU and GPU with distinct memory spaces.

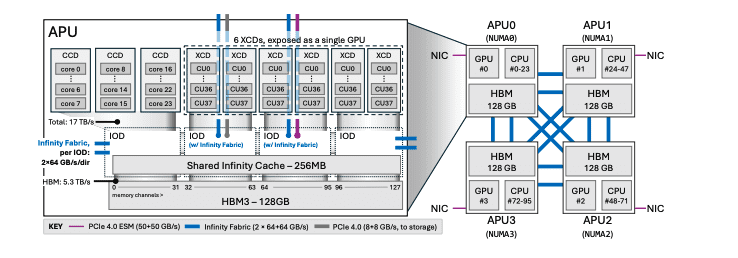

The MI300A utilizes a unified physical memory model, allowing both the CPU and GPU to access the same memory region directly, simplifying data sharing and reducing overhead. This is achieved through a chiplet-based design, combining different processing units, CPU cores, GPU compute units, and memory controllers, into a cohesive system. The APU incorporates a large, shared “Infinity Cache” distributed across all processing units, further accelerating data access and reducing memory contention. To comprehensively assess the system’s performance, researchers designed benchmarks specifically targeting different communication scenarios.

These benchmarks evaluate direct memory access from the GPU, explicit data transfers between APUs, and collective communication patterns involving all four processors. The team compared the efficiency of various programming interfaces, including HIP, MPI, and RCCL, to identify optimal strategies for inter-APU communication. This detailed analysis extends to the memory allocators used, examining their impact on performance and scalability. The methodology also involved optimizing two real-world high-performance computing applications, Quicksilver and CloverLeaf, to demonstrate the practical benefits of the multi-APU system. By identifying and addressing communication bottlenecks within these applications, researchers achieved substantial speedups, highlighting the effectiveness of their optimization strategies. Recent research focuses on understanding and optimizing data movement within these complex multi-APU systems to fully exploit their potential. This work presents a detailed analysis of communication mechanisms within multi-APU nodes, categorizing them into direct GPU access, explicit memory transfer, and both point-to-point and collective inter-process communication.

Through a series of specifically designed benchmarks, researchers quantified the performance of data movement using various software interfaces and compared results against theoretical hardware limits. The study reveals critical insights into optimizing communication, including the selection of appropriate programming interfaces and memory allocation strategies. Notably, the research extends beyond the MI300A APU, providing comparative data from MI250X systems to highlight the advancements offered by the integrated architecture. A significant finding is the impact of unified memory architecture on communication efficiency.

Unlike systems with separate CPU and GPU memory spaces, the MI300A’s shared memory requires careful management to avoid bottlenecks. Researchers investigated the effects of memory allocation methods and whether data is initially accessed by the CPU or GPU, identifying strategies to maximize data transfer rates. Furthermore, the study demonstrates the effectiveness of specialized hardware units, such as SDMA and the XNACK mechanism, in accelerating communication. The benefits of these optimizations are demonstrated through real-world applications, with Quicksilver and CloverLeaf achieving up to a 2. 15x speedup in communication performance. Researchers quantified the peak hardware capacity and assessed the efficiency of various communication methods, including data transfer between the CPU and GPU, direct GPU-to-GPU communication, and collective operations involving multiple GPUs. The results demonstrate the significant impact of memory allocation strategies and programming interfaces on data movement performance within these systems.

👉 More information

🗞 Inter-APU Communication on AMD MI300A Systems via Infinity Fabric: a Deep Dive

🧠 ArXiv: https://arxiv.org/abs/2508.11298